tldr: Many high-stakes decisions (e.g., drug approval) rely on p-values, but people submitting evidence respond strategically even w/o p-hacking. Can we characterize this behavior & how policy shapes it?

1/n

tldr: Many high-stakes decisions (e.g., drug approval) rely on p-values, but people submitting evidence respond strategically even w/o p-hacking. Can we characterize this behavior & how policy shapes it?

1/n

Apply by *December 15* for full consideration.

Apply by *December 15* for full consideration.

- A 46% false fraud rate

- Anguish for families who were wrongly accused of fraud and had benefits stopped

- Months of additional work for government, setting up a hotline, correcting false fraud

www.theguardian.com/society/2025...

- A 46% false fraud rate

- Anguish for families who were wrongly accused of fraud and had benefits stopped

- Months of additional work for government, setting up a hotline, correcting false fraud

www.theguardian.com/society/2025...

Come by if you’re around!

www.eventbrite.com/e/fall-2025-...

Come by if you’re around!

www.eventbrite.com/e/fall-2025-...

🕐 Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📍East Exhibition Hall A-B #E-1104

🔗 arxiv.org/abs/2502.16380

🕐 Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📍East Exhibition Hall A-B #E-1104

🔗 arxiv.org/abs/2502.16380

But is there any evidence for that?

In our latest work w/ David Danks @berkustun, we show explanations fail to help people, even under optimal conditions.

PDF shorturl.at/yaRua

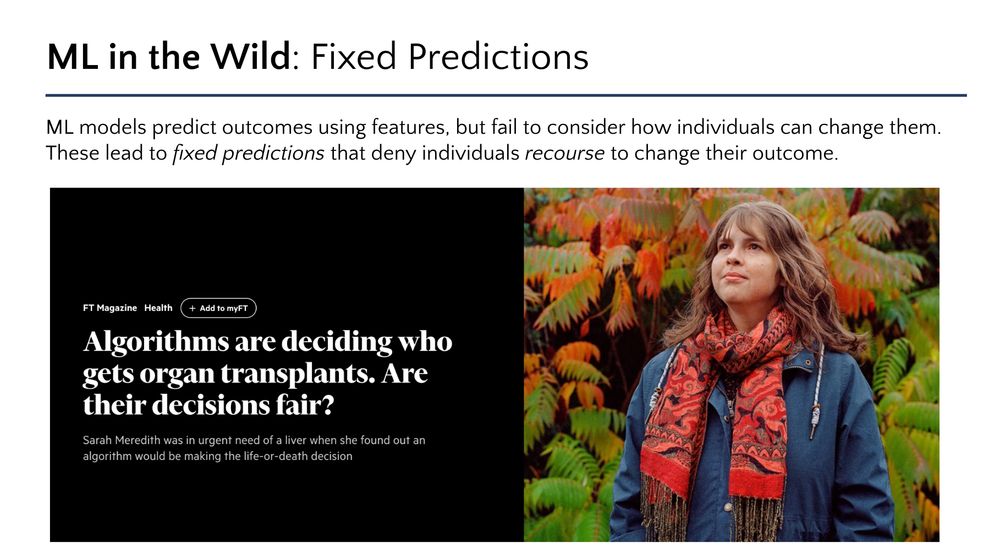

Plus - as we show - it also won't give recourse.

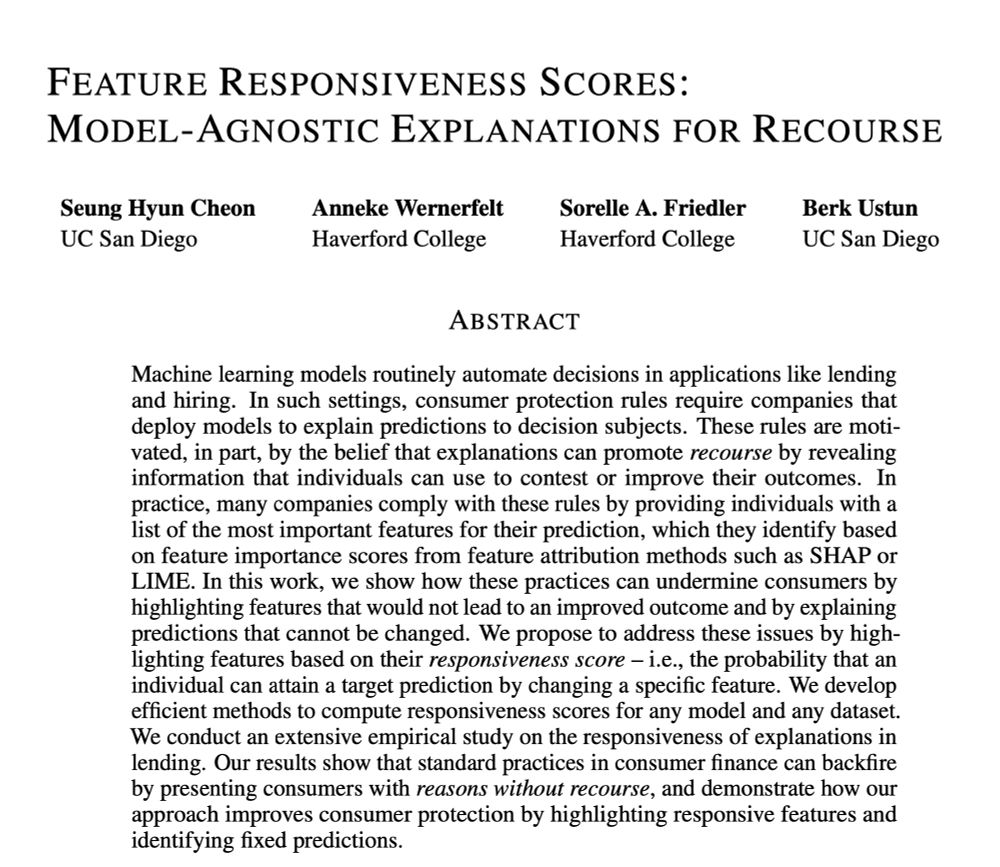

In a paper at #ICLR we introduce feature responsiveness scores... 1/

arxiv.org/pdf/2410.22598

Plus - as we show - it also won't give recourse.

In a paper at #ICLR we introduce feature responsiveness scores... 1/

arxiv.org/pdf/2410.22598

In our latest w @anniewernerfelt.bsky.social @berkustun.bsky.social @friedler.net, we show how existing explanation frameworks fail and present an alternative for recourse

In our latest w @anniewernerfelt.bsky.social @berkustun.bsky.social @friedler.net, we show how existing explanation frameworks fail and present an alternative for recourse

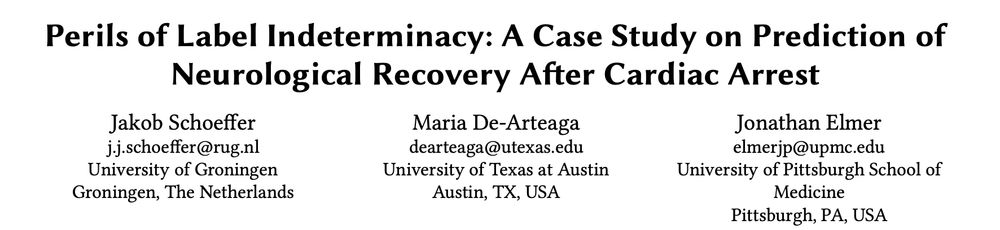

– Diagnoses from unreliable tests

– Outcomes from noisy electronic health records

In a new paper w/@berkustun, we study how this subjects individuals to a lottery of mistakes.

Paper: bit.ly/3Y673uZ

🧵👇

– Diagnoses from unreliable tests

– Outcomes from noisy electronic health records

In a new paper w/@berkustun, we study how this subjects individuals to a lottery of mistakes.

Paper: bit.ly/3Y673uZ

🧵👇

“Learning Under Temporal Label Noise”

We tackle a new challenge in time series ML: label noise that changes over time 🧵👇

arxiv.org/abs/2402.04398

“Learning Under Temporal Label Noise”

We tackle a new challenge in time series ML: label noise that changes over time 🧵👇

arxiv.org/abs/2402.04398

Paper submissions: Feb 20

hcxai.jimdosite.com

Paper submissions: Feb 20

hcxai.jimdosite.com

last week, in its supervisory highlights, the Bureau offered a range of impressive new details on how financial institutions should be searching for less discriminatory algorithms.

last week, in its supervisory highlights, the Bureau offered a range of impressive new details on how financial institutions should be searching for less discriminatory algorithms.

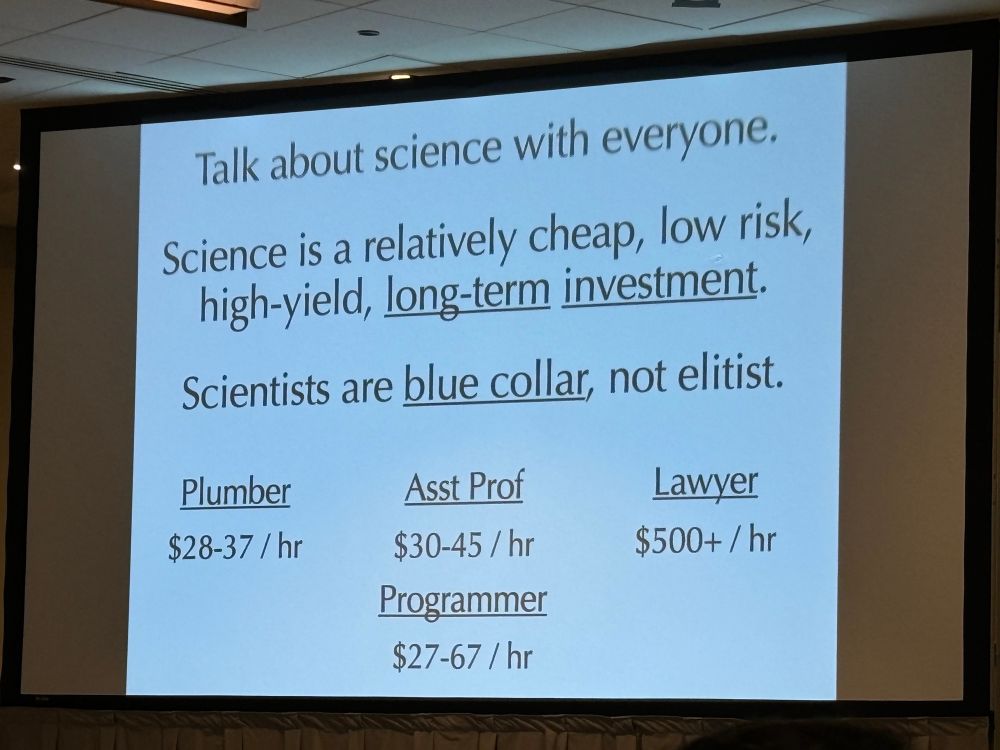

Engaging discussions on the future of #AI in #healthcare at this week's ICHPS, hosted by @amstatnews.bsky.social.

JCHI's @kdpsingh.bsky.social shared insights on the safety & equity of #MachineLearning algorithms and examined bias in large language models.

Engaging discussions on the future of #AI in #healthcare at this week's ICHPS, hosted by @amstatnews.bsky.social.

JCHI's @kdpsingh.bsky.social shared insights on the safety & equity of #MachineLearning algorithms and examined bias in large language models.

Perhaps most important to AI translation is the local silent trial. Ethically, and from an evidentiary perspective, this is essential!

url.au.m.mimecastprotect.com/s/pQSsClx14m...

Perhaps most important to AI translation is the local silent trial. Ethically, and from an evidentiary perspective, this is essential!

url.au.m.mimecastprotect.com/s/pQSsClx14m...

In collaboration with @s010n.bsky.social and Manish Raghavan, we explore strategies and fundamental limits in searching for less discriminatory algorithms.

In collaboration with @s010n.bsky.social and Manish Raghavan, we explore strategies and fundamental limits in searching for less discriminatory algorithms.