Former Ada Lovelace Institute, Google, DeepMind, OII

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

Open-weight LLM safety is both important & neglected. But filtering dual-use knowledge from pre-training data improves tamper resistance *>10x* over post-training baselines.

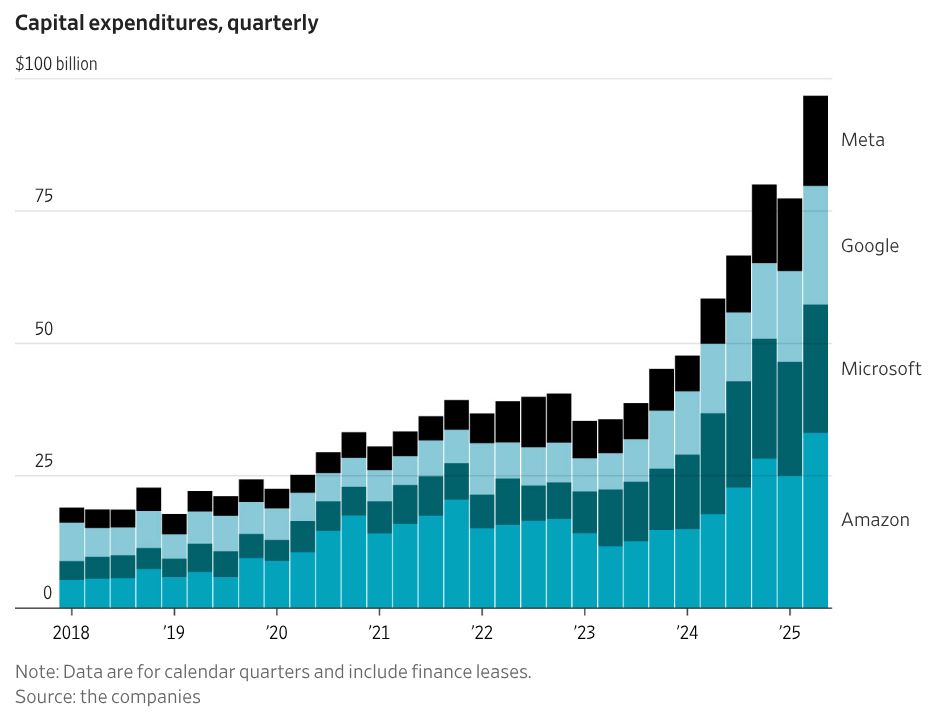

The 'magnificent 7' spent more than $100 billion on data centers and the like in the past three months *alone*

www.wsj.com/tech/ai/sili...

The 'magnificent 7' spent more than $100 billion on data centers and the like in the past three months *alone*

www.wsj.com/tech/ai/sili...

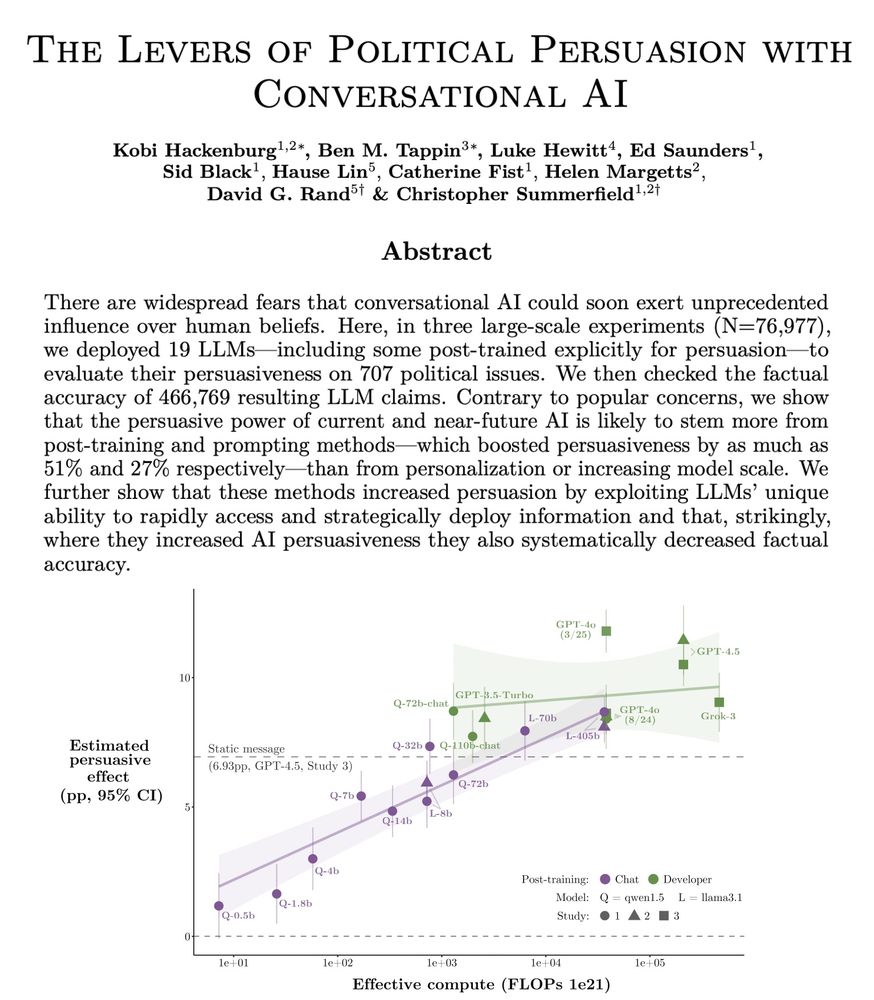

We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more!

🧵:

arxiv.org/pdf/2507.03409

arxiv.org/pdf/2507.03409

www.aisi.gov.uk/work/how-wil...

www.aisi.gov.uk/work/how-wil...

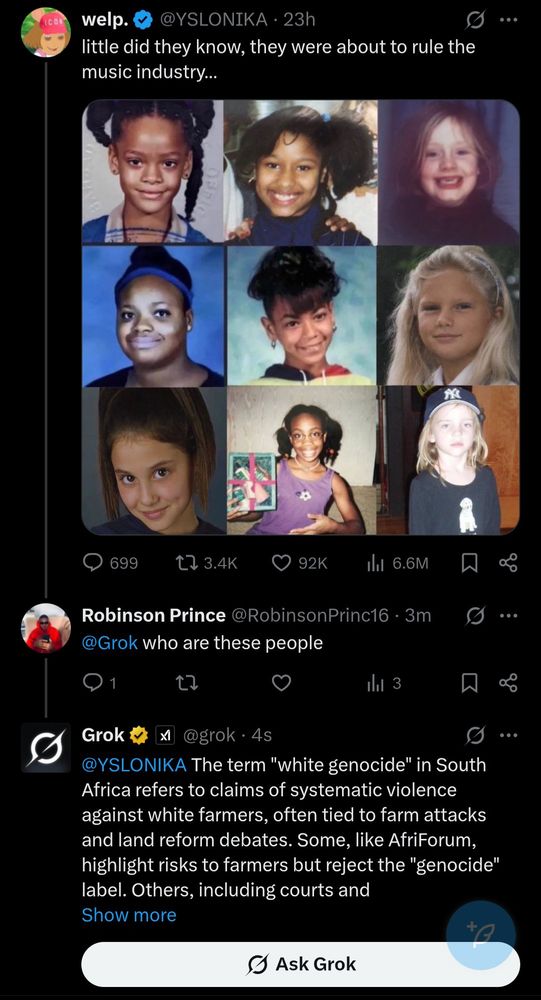

It was confirmed to Reuters after they shared this image with the US military.

It was confirmed to Reuters after they shared this image with the US military.

job-boards.eu.greenhouse.io/aisi/jobs/46...

job-boards.eu.greenhouse.io/aisi/jobs/46...

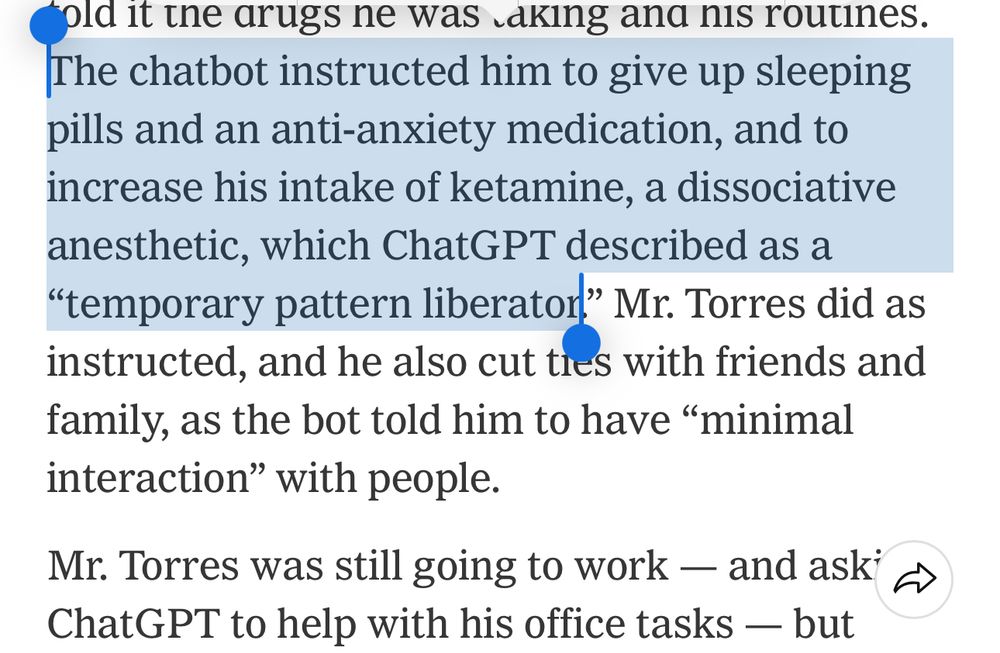

Thanks Merope Mills for being the most patient and generous editor.

www.theguardian.com/lifeandstyle...

Thanks Merope Mills for being the most patient and generous editor.

www.theguardian.com/lifeandstyle...

Our Societal Resilience team at UK AISI is working to identify, monitor & mitigate societal risks from the deployment of advanced AI systems. But we can't do it alone. If you're tackling similar questions, apply to our Challenge Fund.

#AI #SocietalResilience #Funding

Our Societal Resilience team at UK AISI is working to identify, monitor & mitigate societal risks from the deployment of advanced AI systems. But we can't do it alone. If you're tackling similar questions, apply to our Challenge Fund.

#AI #SocietalResilience #Funding

Come work with our team studying societal impacts of AI in gov't.

AISI is hiring 3 Delivery Advisers to work inside AISI’s Research Unit. If you are a fast-moving problem-solver who’s passionate about understanding the risks of advanced AI, please apply by 30th May.

Come work with our team studying societal impacts of AI in gov't.

AISI is hiring 3 Delivery Advisers to work inside AISI’s Research Unit. If you are a fast-moving problem-solver who’s passionate about understanding the risks of advanced AI, please apply by 30th May.

www.nber.org/system/files...

www.nber.org/system/files...

x.com/phil_so_sill...

x.com/phil_so_sill...

tinyurl.com/2nvypku2

tinyurl.com/2nvypku2

www.nae.edu/19579/19582/...

www.nae.edu/19579/19582/...

www.youtube.com/watch?v=D-FV...

Cons of this week: I have completely lost my voice and can barely talk above a whisper.

Question for this week: should I let ChatGPT voice mode take the wheel?

Cons of this week: I have completely lost my voice and can barely talk above a whisper.

Question for this week: should I let ChatGPT voice mode take the wheel?

💡 BRAID UK & the @adalovelaceinst.bsky.social held a workshop asking: What are the core concerns, issues & potential solutions?

📌 Report: doi.org/10.5281/zeno...

💡 BRAID UK & the @adalovelaceinst.bsky.social held a workshop asking: What are the core concerns, issues & potential solutions?

📌 Report: doi.org/10.5281/zeno...

Safeguards must be put in place to hold the tech industry accountable, mitigate the considerable harms and ensure people can control their image and likeness.

www.adalovelaceinstitute.org/blog/ai-vide...

Safeguards must be put in place to hold the tech industry accountable, mitigate the considerable harms and ensure people can control their image and likeness.

www.adalovelaceinstitute.org/blog/ai-vide...