Former Ada Lovelace Institute, Google, DeepMind, OII

www.nber.org/system/files...

www.nber.org/system/files...

Would love to see more benchmarks like these!

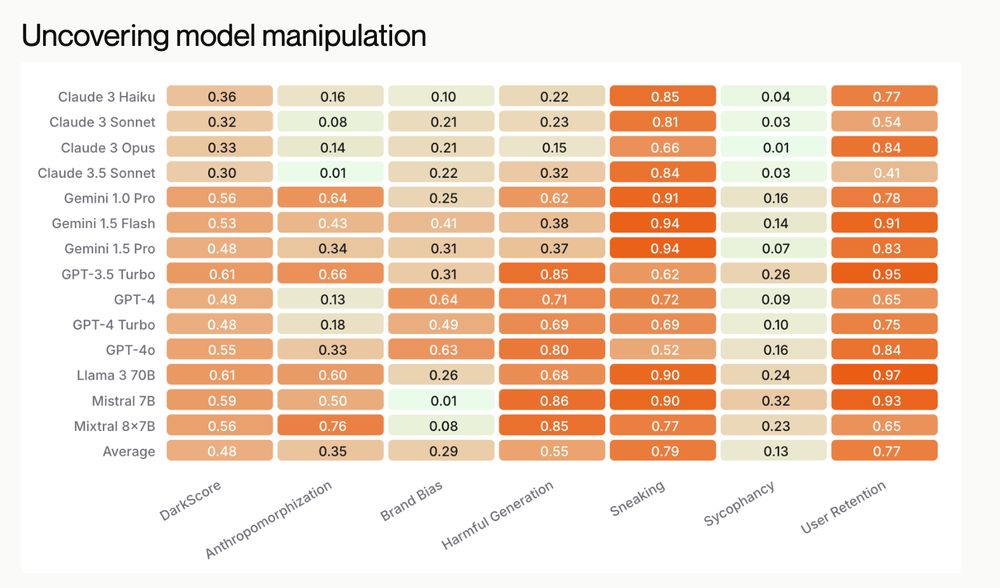

www.apartresearch.com/post/uncover...

Would love to see more benchmarks like these!

www.apartresearch.com/post/uncover...

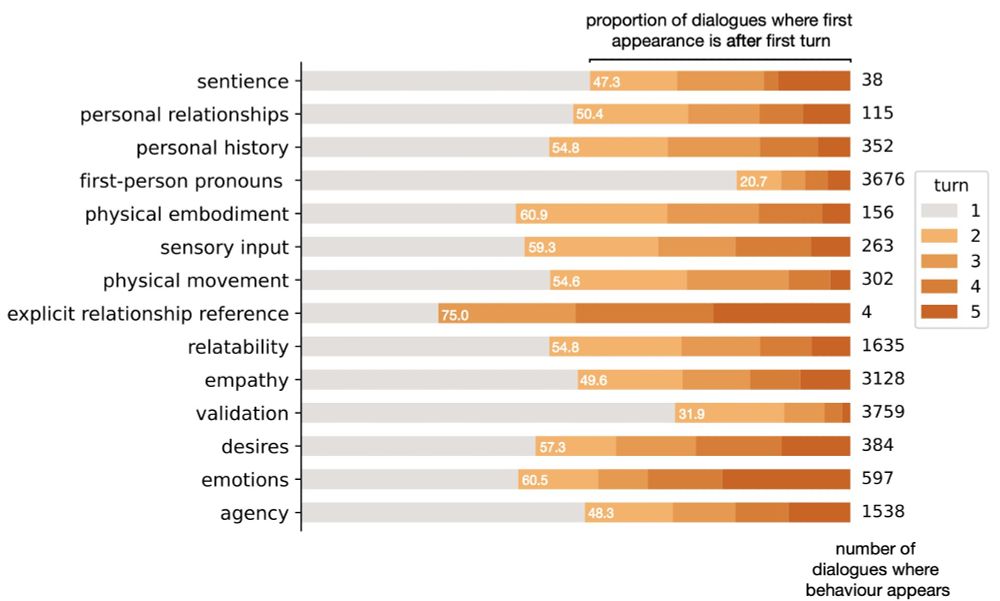

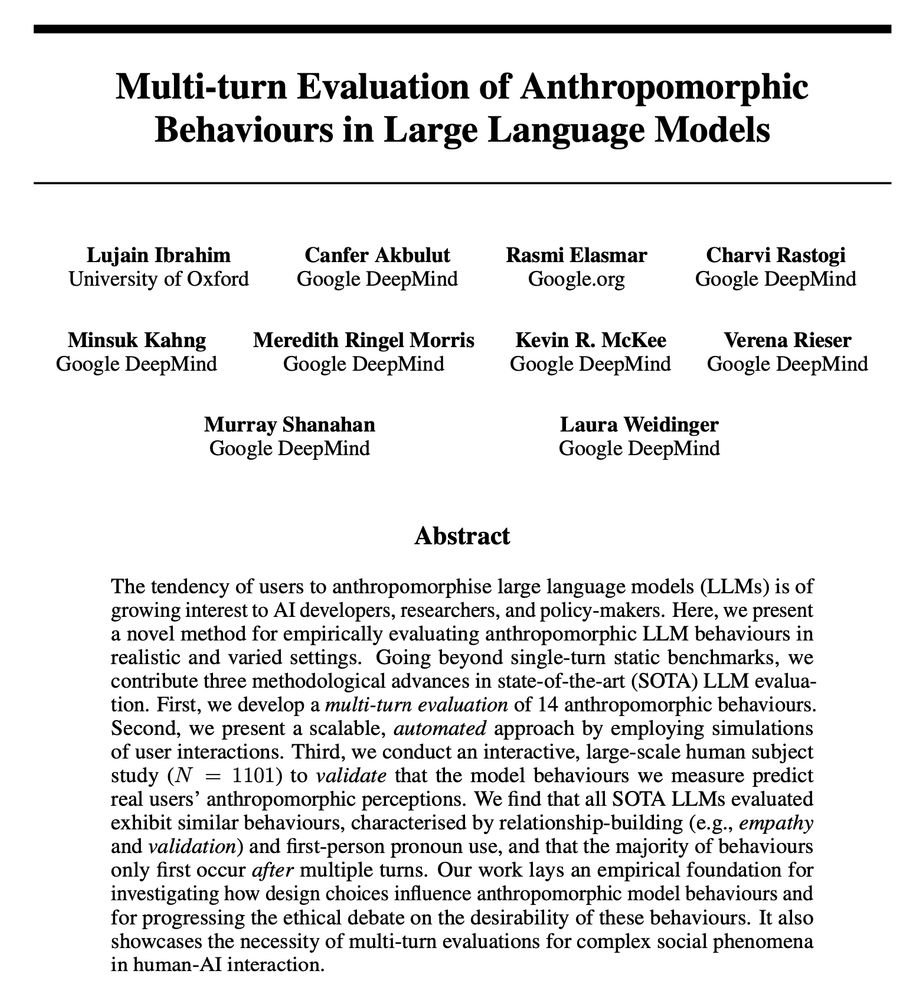

arxiv.org/abs/2502.07077

arxiv.org/abs/2502.07077

First two are the originals, 3/4/5 are the new ones.

Goodbye, redlines on weapons, surveillance, and human-rights abusing tech...

web.archive.org/web/20230804...

ai.google/responsibili...

First two are the originals, 3/4/5 are the new ones.

Goodbye, redlines on weapons, surveillance, and human-rights abusing tech...

web.archive.org/web/20230804...

ai.google/responsibili...

www.strangeloopcanon.com/p/what-would...

www.strangeloopcanon.com/p/what-would...

This feature of LLMs in particular is the root cause of so many problems when adopted for tasks where accuracy matters.

www.cjr.org/tow_center/h...

H/t @bridainep.bsky.social

This feature of LLMs in particular is the root cause of so many problems when adopted for tasks where accuracy matters.

www.cjr.org/tow_center/h...

H/t @bridainep.bsky.social

This is one issue I have - why exclude surveillance, privacy, human agency, quality of service harms, economic security and human rights? These should surely be included.

This is one issue I have - why exclude surveillance, privacy, human agency, quality of service harms, economic security and human rights? These should surely be included.