@david-g-clark.bsky.social and Ashok Litwin-Kumar! Timely too, as “low-D manifold” has been trending again. (If you read thru the end, we escape Flatland and return to the glorious high-D world we deserve.) www.biorxiv.org/content/10.6...

@david-g-clark.bsky.social and Ashok Litwin-Kumar! Timely too, as “low-D manifold” has been trending again. (If you read thru the end, we escape Flatland and return to the glorious high-D world we deserve.) www.biorxiv.org/content/10.6...

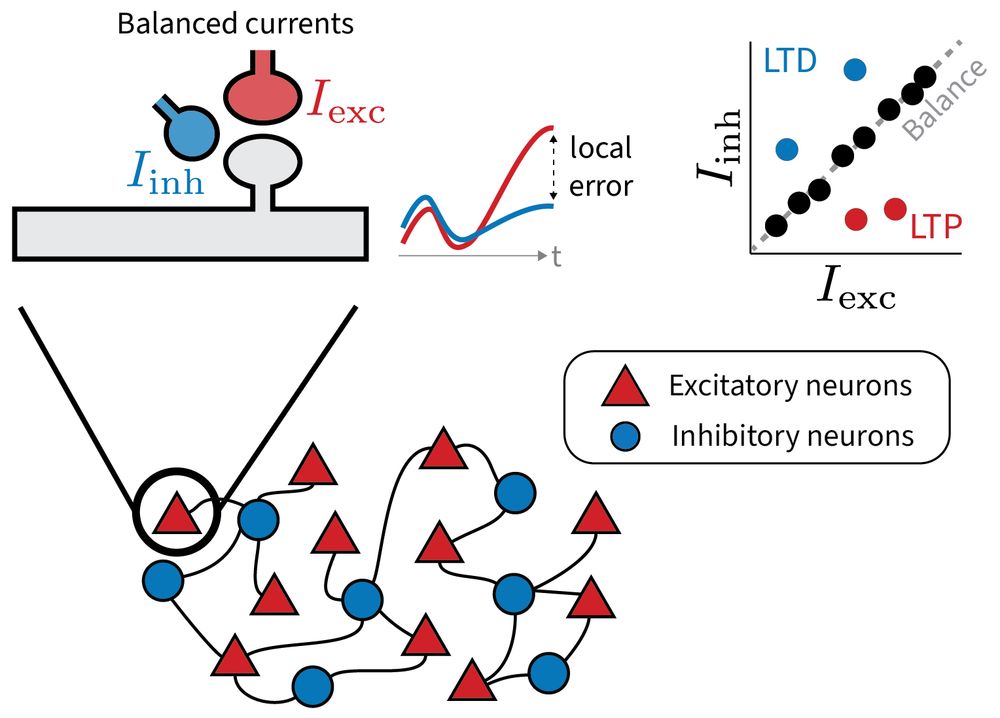

Our new preprint explores a provocative idea: Small, targeted deviations from this balance may serve a purpose: to encode local error signals for learning.

www.biorxiv.org/content/10.1...

led by @jrbch.bsky.social

Our new preprint explores a provocative idea: Small, targeted deviations from this balance may serve a purpose: to encode local error signals for learning.

www.biorxiv.org/content/10.1...

led by @jrbch.bsky.social

A short thread 🧵

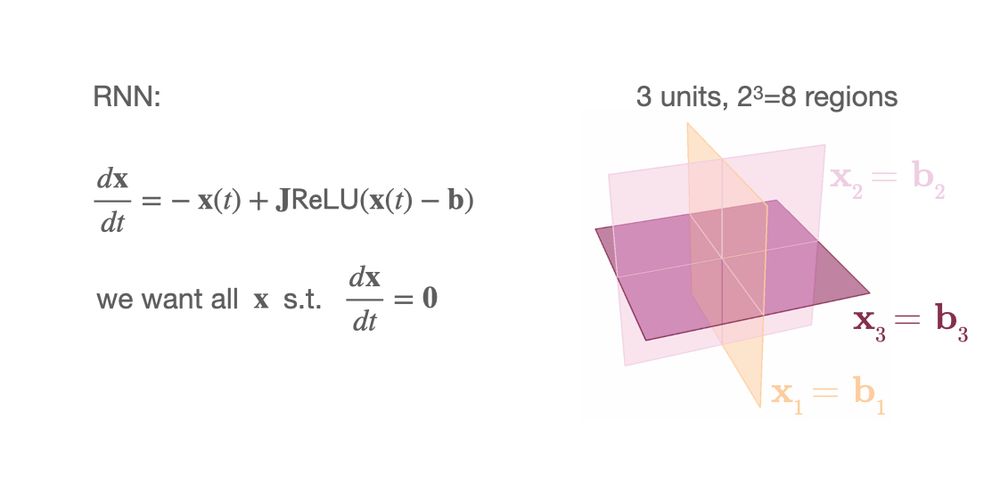

In RNNs with N units with ReLU(x-b) activations the phase space is partioned in 2^N regions by hyperplanes at x=b 1/7

A short thread 🧵

In RNNs with N units with ReLU(x-b) activations the phase space is partioned in 2^N regions by hyperplanes at x=b 1/7

In this paper with Haim Sompolinsky, we simplify and unify derivations for high-dimensional convex learning problems using a bipartite cavity method.

arxiv.org/abs/2412.01110

In this paper with Haim Sompolinsky, we simplify and unify derivations for high-dimensional convex learning problems using a bipartite cavity method.

arxiv.org/abs/2412.01110

go.bsky.app/7VFUkdn

(also, I tried but couldn't remove my profile...)

go.bsky.app/7VFUkdn

(also, I tried but couldn't remove my profile...)

tl;dr: EG respects Dale's law, produces weight distributions that match biology, and outperforms GD in biologically relevant scenarios.

🧠📈 🧪