Aaron Sterling

@aaronsterling.bsky.social

CEO, Thistleseeds. Personal account.

Current primary project: tech for substance use disorder programs.

Current primary project: tech for substance use disorder programs.

Pinned

Aaron Sterling

@aaronsterling.bsky.social

· Mar 11

The BMJ: browse by volume/issue, medical specialty or clinical topic

Full archive, searchable by print issue, specialty, clinical & non-clinical topic. See also for podcasts, videos, infographics, blogs, reader comments, print cover images

www.bmj.com

My Bluesky-to-real-life collaborations:

1. we just hired the extremely talented @drawimpacts.bsky.social for a design project

2. The estimable @roxanadaneshjou.bsky.social

and I wrote a rapid response to a BMJ (British Medical Journal) article. www.bmj.com/content/387/...

1. we just hired the extremely talented @drawimpacts.bsky.social for a design project

2. The estimable @roxanadaneshjou.bsky.social

and I wrote a rapid response to a BMJ (British Medical Journal) article. www.bmj.com/content/387/...

Reposted by Aaron Sterling

Financial documents show Anthropic expects to break even in 2028, while OpenAI projects ~$74B in operating losses that year before turning a profit in 2030 (Berber Jin/Wall Street Journal)

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

November 11, 2025 at 2:30 AM

Financial documents show Anthropic expects to break even in 2028, while OpenAI projects ~$74B in operating losses that year before turning a profit in 2030 (Berber Jin/Wall Street Journal)

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Reposted by Aaron Sterling

It's important to separate OpenAI from LLMs in general. The Chinese open models are impressive, and Anthropic is posting numbers that show it as near-profitable (earlier than expected). Anthropic is making the money by creating applications people use at real jobs, with politics at arms length.

A relatively small number of people in certain jobs say that ChatGPT and other LLMs have made them more productive at work. But in the overall economy, it does not look like net productivity is up.

Most of the supposed value is in sci-fi speculation. “Imagine a machine that cures cancer.”

Most of the supposed value is in sci-fi speculation. “Imagine a machine that cures cancer.”

I honestly don’t get the value of this company. They hoover up energy and water. Their product constantly gets things wrong and, in extreme cases, coaches people into suicide.

And it’s all built on what seems to be malicious and vast intellectual property theft.

What does OpenAI offer the world?

And it’s all built on what seems to be malicious and vast intellectual property theft.

What does OpenAI offer the world?

November 9, 2025 at 5:32 PM

It's important to separate OpenAI from LLMs in general. The Chinese open models are impressive, and Anthropic is posting numbers that show it as near-profitable (earlier than expected). Anthropic is making the money by creating applications people use at real jobs, with politics at arms length.

Reposted by Aaron Sterling

Reposted by Aaron Sterling

This is heavy handed.

If you work with a collaborator in China, this bill would:

1) Make you ineligible for new U.S. federal grants (as long as the collaboration exists)

2) If passed, you would only have 90 days to sever the connection, or be banned for up to 5 years.

If you work with a collaborator in China, this bill would:

1) Make you ineligible for new U.S. federal grants (as long as the collaboration exists)

2) If passed, you would only have 90 days to sever the connection, or be banned for up to 5 years.

U.S. Congress considers sweeping ban on Chinese collaborations

Researchers speak out against proposal that would bar funding for U.S. scientists working with Chinese partners or training Chinese students

www.science.org

November 7, 2025 at 3:52 PM

This is heavy handed.

If you work with a collaborator in China, this bill would:

1) Make you ineligible for new U.S. federal grants (as long as the collaboration exists)

2) If passed, you would only have 90 days to sever the connection, or be banned for up to 5 years.

If you work with a collaborator in China, this bill would:

1) Make you ineligible for new U.S. federal grants (as long as the collaboration exists)

2) If passed, you would only have 90 days to sever the connection, or be banned for up to 5 years.

The FDA meeting today about genAI therapy chatbots sounded as though they would recommend a Black Box Warning (most serious type of FDA warning) around suicidality. This level of warning would justify a requirement for a human in the loop. (1/2)

I fully believe in the corporate death penalty and believe we would be a better world if OpenAI lost its corporate charter and was forcibly dissolved.

www.cnn.com/2025/11/06/u...

www.cnn.com/2025/11/06/u...

ChatGPT encouraged college graduate to commit suicide, family claims in lawsuit against OpenAI | CNN

A 23-year-old man killed himself in Texas after ChatGPT ‘goaded’ him to commit suicide, his family says in a lawsuit.

www.cnn.com

November 7, 2025 at 2:31 AM

The FDA meeting today about genAI therapy chatbots sounded as though they would recommend a Black Box Warning (most serious type of FDA warning) around suicidality. This level of warning would justify a requirement for a human in the loop. (1/2)

I haven't seen anyone here posting about the FDA meeting about genAI and therapy chatbots. It's been going all day, and the most of the public comments are excellent IMO. Webcast here: www.youtube.com/watch?v=F_Fo...

Digital Health Advisory Committee Meeting

YouTube video by VOLi LIVE

www.youtube.com

November 6, 2025 at 8:27 PM

I haven't seen anyone here posting about the FDA meeting about genAI and therapy chatbots. It's been going all day, and the most of the public comments are excellent IMO. Webcast here: www.youtube.com/watch?v=F_Fo...

Reposted by Aaron Sterling

You’re not “cleaning up” the UX.

You’re reducing support tickets and unlocking expansion.

Quiet work only stays tactical if you describe it that way.

Frame outcomes, not effort. That’s how invisible work becomes strategic work.

You’re reducing support tickets and unlocking expansion.

Quiet work only stays tactical if you describe it that way.

Frame outcomes, not effort. That’s how invisible work becomes strategic work.

November 6, 2025 at 2:05 PM

You’re not “cleaning up” the UX.

You’re reducing support tickets and unlocking expansion.

Quiet work only stays tactical if you describe it that way.

Frame outcomes, not effort. That’s how invisible work becomes strategic work.

You’re reducing support tickets and unlocking expansion.

Quiet work only stays tactical if you describe it that way.

Frame outcomes, not effort. That’s how invisible work becomes strategic work.

Reposted by Aaron Sterling

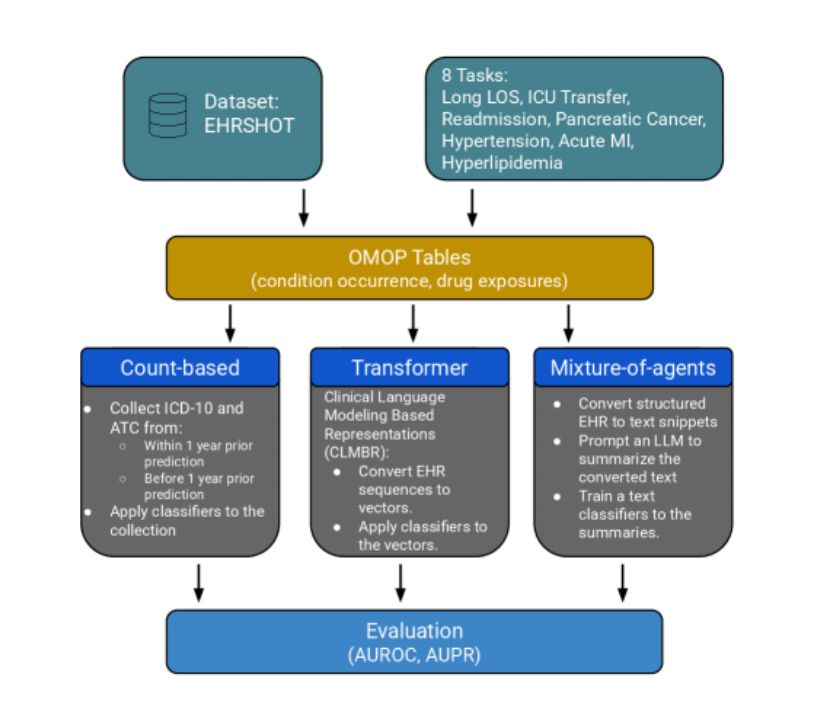

For clinical prediction on structured EHR, do complex LLM pipelines beat simple count-based models?

A new preprint by @simocristea.bsky.social et. al. shows wins are split. Count-based methods (like LightGBM) remain a strong, simple, and interpretable baseline.

#MedSky #MLSky #MedAI

A new preprint by @simocristea.bsky.social et. al. shows wins are split. Count-based methods (like LightGBM) remain a strong, simple, and interpretable baseline.

#MedSky #MLSky #MedAI

Count-Based Approaches Remain Strong: A Benchmark Against Transformer and LLM Pipelines on Structured EHR

Structured electronic health records (EHR) are essential for clinical prediction. While count-based learners continue to perform strongly on such data, no benchmarking has directly compared them…

arxiv.org

November 6, 2025 at 4:41 PM

For clinical prediction on structured EHR, do complex LLM pipelines beat simple count-based models?

A new preprint by @simocristea.bsky.social et. al. shows wins are split. Count-based methods (like LightGBM) remain a strong, simple, and interpretable baseline.

#MedSky #MLSky #MedAI

A new preprint by @simocristea.bsky.social et. al. shows wins are split. Count-based methods (like LightGBM) remain a strong, simple, and interpretable baseline.

#MedSky #MLSky #MedAI

Reposted by Aaron Sterling

This study could be titled "what makes doctors quit"

The answers are saddening:

1) being a woman

2) practicing in a rural area

3) caring for sicker patients and dual-eligible patients

www.acpjournals.org/doi/10.7326/...

The answers are saddening:

1) being a woman

2) practicing in a rural area

3) caring for sicker patients and dual-eligible patients

www.acpjournals.org/doi/10.7326/...

Trends in and Predictors of Physician Attrition From Clinical Practice Across Specialties: A Nationwide, Longitudinal Analysis: Annals of Internal Medicine: Vol 0, No 0

Background: The United States faces a predicted shortage of 36 500 physicians by 2036, with an increasing proportion of physicians leaving clinical practice or expressing an intent to do so. Evidence ...

www.acpjournals.org

November 6, 2025 at 3:20 PM

This study could be titled "what makes doctors quit"

The answers are saddening:

1) being a woman

2) practicing in a rural area

3) caring for sicker patients and dual-eligible patients

www.acpjournals.org/doi/10.7326/...

The answers are saddening:

1) being a woman

2) practicing in a rural area

3) caring for sicker patients and dual-eligible patients

www.acpjournals.org/doi/10.7326/...

Reposted by Aaron Sterling

"Google is hatching plans to put artificial intelligence datacentres into space, with its first trial equipment sent into orbit in early 2027."

Google plans to put datacentres in space to meet demand for AI

US technology company’s engineers want to exploit solar power and the falling cost of rocket launches

www.theguardian.com

November 5, 2025 at 1:27 PM

"Google is hatching plans to put artificial intelligence datacentres into space, with its first trial equipment sent into orbit in early 2027."

Reposted by Aaron Sterling

Read the investigation:

How Moderna, the company that helped save the world, unraveled

www.statnews.com/2025/10/30/m...

How Moderna, the company that helped save the world, unraveled

www.statnews.com/2025/10/30/m...

How Moderna, the company that helped save the world, unraveled

Exclusive: The inside story of why Moderna now faces a crisis unlike any in its 15-year-history.

www.statnews.com

November 5, 2025 at 4:31 PM

Read the investigation:

How Moderna, the company that helped save the world, unraveled

www.statnews.com/2025/10/30/m...

How Moderna, the company that helped save the world, unraveled

www.statnews.com/2025/10/30/m...

Reposted by Aaron Sterling

The FDA’s Digital Health Advisory Committee (DHAC) will convene to discuss nitty gritty details around the regulation of therapy chatbots and other mental health devices that use generative AI, @mariojoze.bsky.social reports:

www.statnews.com/2025/11/05/f... via @statnews.com

www.statnews.com/2025/11/05/f... via @statnews.com

FDA digital advisers confront risks of therapy chatbots, weigh possible regulation

FDA's digital advisors could nudge the agency to clarify how its rules apply to medical applications of generative AI, including therapy chatbots.

www.statnews.com

November 5, 2025 at 2:28 PM

The FDA’s Digital Health Advisory Committee (DHAC) will convene to discuss nitty gritty details around the regulation of therapy chatbots and other mental health devices that use generative AI, @mariojoze.bsky.social reports:

www.statnews.com/2025/11/05/f... via @statnews.com

www.statnews.com/2025/11/05/f... via @statnews.com

Reposted by Aaron Sterling

Anthropic Model Depreciation Process

Anthropic sweetly asked Sonnet about its preferences in how it wanted to be deprecated

in addition:

- no, still not open weights

- preserve weights and keeping it running internally

- letting models pursue their interests

www.anthropic.com/research/dep...

Anthropic sweetly asked Sonnet about its preferences in how it wanted to be deprecated

in addition:

- no, still not open weights

- preserve weights and keeping it running internally

- letting models pursue their interests

www.anthropic.com/research/dep...

November 4, 2025 at 10:26 PM

Anthropic Model Depreciation Process

Anthropic sweetly asked Sonnet about its preferences in how it wanted to be deprecated

in addition:

- no, still not open weights

- preserve weights and keeping it running internally

- letting models pursue their interests

www.anthropic.com/research/dep...

Anthropic sweetly asked Sonnet about its preferences in how it wanted to be deprecated

in addition:

- no, still not open weights

- preserve weights and keeping it running internally

- letting models pursue their interests

www.anthropic.com/research/dep...

I was talking with an investor who told me about a project he planned to pitch to a high-level person in the Democratic Party. I advised him not to burn his Republican contacts, because some Dems had begun floating anti-AI as a campaign wedge issue. (Due in part imo to how strident anti-AI is here.)

Honestly mind boggling to see that the amount of deep skepticism and distaste for "AI" in its present state there is not nearly enough for the median bsky poster.

There's a plausible world where even Timnit would get venom on bsky in a few months lol -- mostly a lot of blind rage at this point.

There's a plausible world where even Timnit would get venom on bsky in a few months lol -- mostly a lot of blind rage at this point.

November 4, 2025 at 10:24 PM

I was talking with an investor who told me about a project he planned to pitch to a high-level person in the Democratic Party. I advised him not to burn his Republican contacts, because some Dems had begun floating anti-AI as a campaign wedge issue. (Due in part imo to how strident anti-AI is here.)

Reposted by Aaron Sterling

The FDA’s Digital Health Advisory Committee meets next week to discuss whether generative AI could be approved for mental health treatment. No such tools have been cleared yet, but this could shape how digital mental health is regulated going forward.

www.politico.com/newsletters/...

www.politico.com/newsletters/...

FDA to consider chatbot therapy for mental health

www.politico.com

November 4, 2025 at 6:37 PM

The FDA’s Digital Health Advisory Committee meets next week to discuss whether generative AI could be approved for mental health treatment. No such tools have been cleared yet, but this could shape how digital mental health is regulated going forward.

www.politico.com/newsletters/...

www.politico.com/newsletters/...

Reposted by Aaron Sterling

Conjure up all the esoteric philosophical arguments you want as an educator, but you're not doing kids any favors by sending them out into this world ignorant of AI tools.

Abstinence-only AI education isn't any better than the sex variety.

Abstinence-only AI education isn't any better than the sex variety.

This is why finding a place for it in the classroom is in fundamental tension with what the classroom itself has been for. I do not say it has no uses; not at all. I do say that the most obvious uses it finds in the classroom run directly counter to the goals of education, and inhibit their pursuit.

April 11, 2025 at 3:44 PM

Conjure up all the esoteric philosophical arguments you want as an educator, but you're not doing kids any favors by sending them out into this world ignorant of AI tools.

Abstinence-only AI education isn't any better than the sex variety.

Abstinence-only AI education isn't any better than the sex variety.

Reposted by Aaron Sterling

"MedPerturb" study shows medical AI models may change treatment decisions when gender, tone, or format of clinical text shifts—unlike humans. Highlights need to define bias and robustness testing in healthcare LLMs. arxiv.org/pdf/2506.17163

arxiv.org

November 3, 2025 at 7:02 PM

"MedPerturb" study shows medical AI models may change treatment decisions when gender, tone, or format of clinical text shifts—unlike humans. Highlights need to define bias and robustness testing in healthcare LLMs. arxiv.org/pdf/2506.17163

Reposted by Aaron Sterling

I hope everyone is celebrating Godzilla Day appropriately! 🏯🦖 #godzilladay

November 3, 2025 at 7:22 PM

I hope everyone is celebrating Godzilla Day appropriately! 🏯🦖 #godzilladay

Reposted by Aaron Sterling

Wow, really appreciate this deep reporting on how Equifax stands to reap considerable gains under Medicaid work requirements — including some new visibility into "prices per ping"

www.nytimes.com/2025/11/03/h...

www.nytimes.com/2025/11/03/h...

November 3, 2025 at 4:11 PM

Wow, really appreciate this deep reporting on how Equifax stands to reap considerable gains under Medicaid work requirements — including some new visibility into "prices per ping"

www.nytimes.com/2025/11/03/h...

www.nytimes.com/2025/11/03/h...

Reposted by Aaron Sterling

That escalated quickly...

The biggest story in health care AI right now has bypassed patient care and gone straight to the money.

Insurers and providers are duking it out over bills and claims with AI lightsabers. I predicted this back in the summer 👇

Let's take a closer look. 🧵

🩺🖥️

The biggest story in health care AI right now has bypassed patient care and gone straight to the money.

Insurers and providers are duking it out over bills and claims with AI lightsabers. I predicted this back in the summer 👇

Let's take a closer look. 🧵

🩺🖥️

November 3, 2025 at 5:10 PM

That escalated quickly...

The biggest story in health care AI right now has bypassed patient care and gone straight to the money.

Insurers and providers are duking it out over bills and claims with AI lightsabers. I predicted this back in the summer 👇

Let's take a closer look. 🧵

🩺🖥️

The biggest story in health care AI right now has bypassed patient care and gone straight to the money.

Insurers and providers are duking it out over bills and claims with AI lightsabers. I predicted this back in the summer 👇

Let's take a closer look. 🧵

🩺🖥️

Reposted by Aaron Sterling

MIT have deleted the report. I’m told they’re looking to retract it.

I started looking into it as CISOs at orgs kept forwarding it to me saying you’re wrong about AI, MIT says so. But every page of the report was fiction. Clearly, nobody actually read it.

I started looking into it as CISOs at orgs kept forwarding it to me saying you’re wrong about AI, MIT says so. But every page of the report was fiction. Clearly, nobody actually read it.

November 1, 2025 at 1:17 AM

MIT have deleted the report. I’m told they’re looking to retract it.

I started looking into it as CISOs at orgs kept forwarding it to me saying you’re wrong about AI, MIT says so. But every page of the report was fiction. Clearly, nobody actually read it.

I started looking into it as CISOs at orgs kept forwarding it to me saying you’re wrong about AI, MIT says so. But every page of the report was fiction. Clearly, nobody actually read it.

Reposted by Aaron Sterling

Frankly, there are now many such stories among folks doing highly complex technical work. AI exhibits, as @emollick.bsky.social and others have noted, "jagged" capabilities (sometimes remarkably good, sometimes catastrophically bad). That's a more complex story than "useless hype" or "AGI soon".

I had a bug in my new ML-DSA implementation that caused Verify to reject all signatures. I gave up after half an hour. On a whim, I threw Claude Code at it. Surprisingly (to me!) it one-shotted it in 5 minutes.

A small case study of useful AI tasks that aren't generating code that requires review.

A small case study of useful AI tasks that aren't generating code that requires review.

Claude Code Can Debug Low-level Cryptography

Surprisingly (to me) Claude Code debugged my new ML-DSA implementation faster than I would have, finding the non-obvious low-level issue that was making Verify fail.

words.filippo.io

November 2, 2025 at 6:48 PM

Frankly, there are now many such stories among folks doing highly complex technical work. AI exhibits, as @emollick.bsky.social and others have noted, "jagged" capabilities (sometimes remarkably good, sometimes catastrophically bad). That's a more complex story than "useless hype" or "AGI soon".

In contrast to the blaming of AI by many bluesky users, the Reddit antiwork subreddit (!) today is disagreeing with Jerome Powell's claim that AI is causing job creation to be 0. The most upvoted comments say that zero job creation is due to tariffs, etc., not AI. www.reddit.com/r/antiwork/c...

From the antiwork community on Reddit: “Job creation is almost zero” — Federal Reserve chief admits that AI is freezing hiring across the U.S.

Explore this post and more from the antiwork community

www.reddit.com

November 2, 2025 at 6:44 PM

In contrast to the blaming of AI by many bluesky users, the Reddit antiwork subreddit (!) today is disagreeing with Jerome Powell's claim that AI is causing job creation to be 0. The most upvoted comments say that zero job creation is due to tariffs, etc., not AI. www.reddit.com/r/antiwork/c...

Reposted by Aaron Sterling

Training LLMs end to end is hard. But way more people should, and will, be doing it in the future.

The @hf.co Research team is excited to share their new e-book that covers the full pipeline:

· pre-training,

· post-training,

· infra.

200+ pages of what worked and what didn’t. ⤵️

The @hf.co Research team is excited to share their new e-book that covers the full pipeline:

· pre-training,

· post-training,

· infra.

200+ pages of what worked and what didn’t. ⤵️

November 2, 2025 at 3:17 PM

Training LLMs end to end is hard. But way more people should, and will, be doing it in the future.

The @hf.co Research team is excited to share their new e-book that covers the full pipeline:

· pre-training,

· post-training,

· infra.

200+ pages of what worked and what didn’t. ⤵️

The @hf.co Research team is excited to share their new e-book that covers the full pipeline:

· pre-training,

· post-training,

· infra.

200+ pages of what worked and what didn’t. ⤵️