✅ Robotics: human interaction simulation

✅ AV: building better pedestrian models for ADAS

✅ AI labs: scalable synthetic body data

✅ Healthcare: shape and pose tracking for remote monitoring

These are real production needs: not theory!

✅ Robotics: human interaction simulation

✅ AV: building better pedestrian models for ADAS

✅ AI labs: scalable synthetic body data

✅ Healthcare: shape and pose tracking for remote monitoring

These are real production needs: not theory!

The author of SAM and SAM-2, Nikhila Ravi, is one of our amazing keynote speakers this morning at #WiCV

@cvprconference.bsky.social

During her talk, she shared what’s next! 🚀

#CVPR2025 #WomenInCV

The author of SAM and SAM-2, Nikhila Ravi, is one of our amazing keynote speakers this morning at #WiCV

@cvprconference.bsky.social

During her talk, she shared what’s next! 🚀

#CVPR2025 #WomenInCV

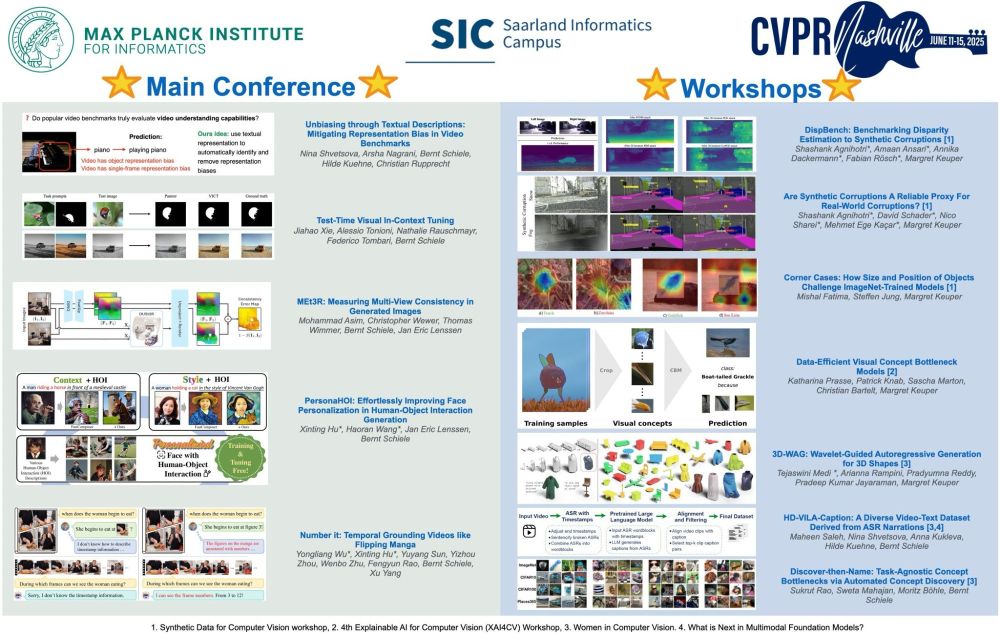

👏 Huge congrats to our members on these workshop paper acceptances! Excited to see their work at #CVPR2025 🌟

#MPI-INF #D2 #Workshop #AI #ComputerVision #PhD

@mpi-inf.mpg.de

👏 Huge congrats to our members on these workshop paper acceptances! Excited to see their work at #CVPR2025 🌟

#MPI-INF #D2 #Workshop #AI #ComputerVision #PhD

@mpi-inf.mpg.de

👏 Big congrats to everyone! Keep an eye out at #CVPR2025 🌟

#MPI-INF #D2 #ComputerVision #AI #PhD #ML

@mpi-inf.mpg.de

👏 Big congrats to everyone! Keep an eye out at #CVPR2025 🌟

#MPI-INF #D2 #ComputerVision #AI #PhD #ML

@mpi-inf.mpg.de

🗓️ June 12 • 📍 Room 202B, Nashville

Meet our incredible lineup of speakers covering topics from agile robotics to safe physical AI at: opendrivelab.com/cvpr2025/tut...

#cvpr2025

🗓️ June 12 • 📍 Room 202B, Nashville

Meet our incredible lineup of speakers covering topics from agile robotics to safe physical AI at: opendrivelab.com/cvpr2025/tut...

#cvpr2025

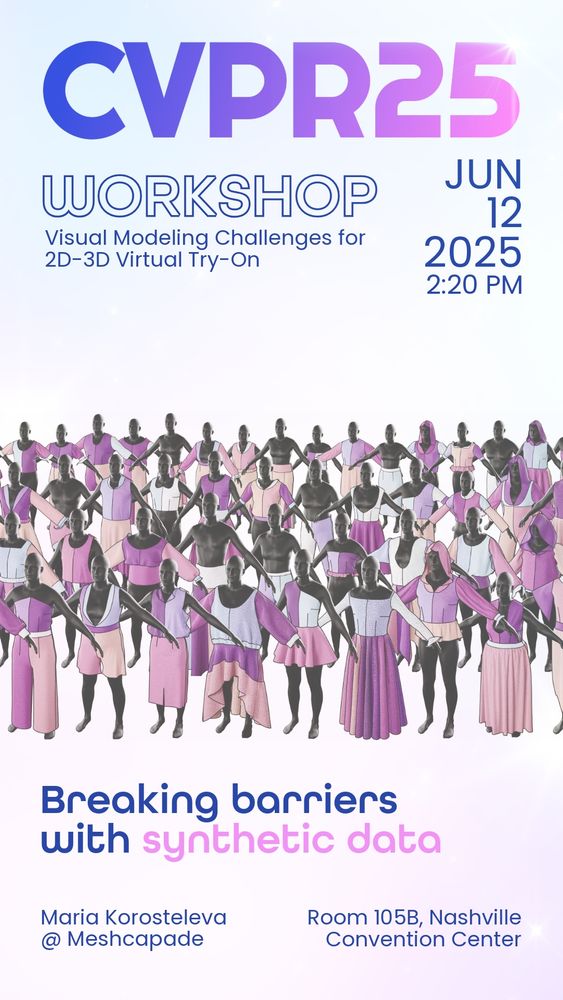

Want to create 3D garments faster and at scale?

Catch Maria Korosteleva at the Virtual Try-On Workshop on June 12, 2:20PM CDT (Room 105B) to learn how synthetic data is changing the game!

🔗 vto-at-cvpr25.github.io

#SMPL #AI #3DGarment #SyntheticData

Want to create 3D garments faster and at scale?

Catch Maria Korosteleva at the Virtual Try-On Workshop on June 12, 2:20PM CDT (Room 105B) to learn how synthetic data is changing the game!

🔗 vto-at-cvpr25.github.io

#SMPL #AI #3DGarment #SyntheticData

We turn SAM2 into a semantic few-shot segmenter:

🧠 Unlocks latent semantics in frozen SAM2

✏️ Supports any prompt: fast and scalable annotation

📦 No extra encoders

📎 github.com/ClaudiaCutta...

We turn SAM2 into a semantic few-shot segmenter:

🧠 Unlocks latent semantics in frozen SAM2

✏️ Supports any prompt: fast and scalable annotation

📦 No extra encoders

📎 github.com/ClaudiaCutta...

We introduce a simple yet effective method for improved audio-visual learning.

🔗 Project: edsonroteia.github.io/cav-mae-sync/

🧵 (1/7)👇

We introduce a simple yet effective method for improved audio-visual learning.

🔗 Project: edsonroteia.github.io/cav-mae-sync/

🧵 (1/7)👇

We’re excited to announce that our paper "𝗕𝗲𝗻𝗰𝗵𝗺𝗮𝗿𝗸𝗶𝗻𝗴 𝗢𝗯𝗷𝗲𝗰𝘁 𝗗𝗲𝘁𝗲𝗰𝘁𝗼𝗿𝘀 𝘂𝗻𝗱𝗲𝗿 𝗥𝗲𝗮𝗹-𝗪𝗼𝗿𝗹𝗱 𝗗𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗶𝗼𝗻 𝗦𝗵𝗶𝗳𝘁𝘀 𝗶𝗻 𝗦𝗮𝘁𝗲𝗹𝗹𝗶𝘁𝗲 𝗜𝗺𝗮𝗴𝗲𝗿𝘆" has been 𝗮𝗰𝗰𝗲𝗽𝘁𝗲𝗱 𝗮𝘁 #CVPR2025! 🎉

A 🧵

We’re excited to announce that our paper "𝗕𝗲𝗻𝗰𝗵𝗺𝗮𝗿𝗸𝗶𝗻𝗴 𝗢𝗯𝗷𝗲𝗰𝘁 𝗗𝗲𝘁𝗲𝗰𝘁𝗼𝗿𝘀 𝘂𝗻𝗱𝗲𝗿 𝗥𝗲𝗮𝗹-𝗪𝗼𝗿𝗹𝗱 𝗗𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗶𝗼𝗻 𝗦𝗵𝗶𝗳𝘁𝘀 𝗶𝗻 𝗦𝗮𝘁𝗲𝗹𝗹𝗶𝘁𝗲 𝗜𝗺𝗮𝗴𝗲𝗿𝘆" has been 𝗮𝗰𝗰𝗲𝗽𝘁𝗲𝗱 𝗮𝘁 #CVPR2025! 🎉

A 🧵

5 papers accepted, 5 going live 🚀

Catch PromptHMR, DiffLocks, ChatHuman, ChatGarment & PICO at Booth 1333, June 11–15.

Details about the papers in the thread! 👇

#3DBody #SMPL #GenerativeAI #MachineLearning

5 papers accepted, 5 going live 🚀

Catch PromptHMR, DiffLocks, ChatHuman, ChatGarment & PICO at Booth 1333, June 11–15.

Details about the papers in the thread! 👇

#3DBody #SMPL #GenerativeAI #MachineLearning

We are working hard to bring you an amazing edition of this event, dedicated to increasing the visibility of female researchers in computer vision.

Stay tuned for interesting updates!💜

#WiCV #CVPR2025 #WomenInCV

We are working hard to bring you an amazing edition of this event, dedicated to increasing the visibility of female researchers in computer vision.

Stay tuned for interesting updates!💜

#WiCV #CVPR2025 #WomenInCV

Project: phermosilla.github.io/msm/

Arxiv: arxiv.org/abs/2504.06719

Github: github.com/phermosilla/...

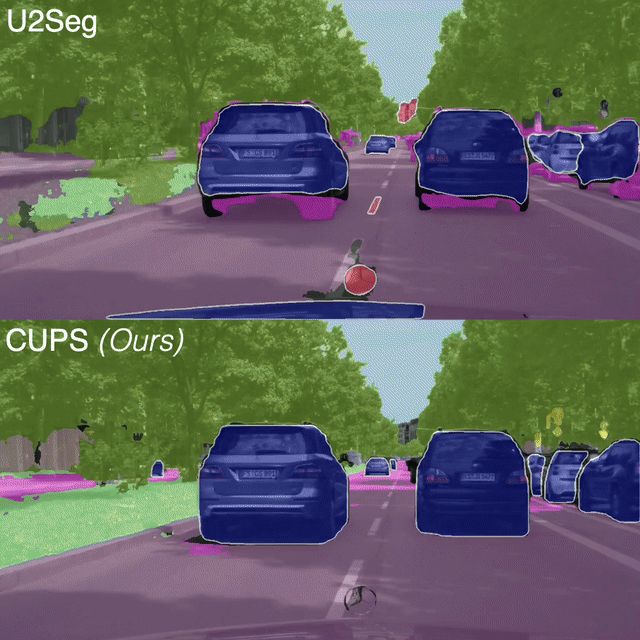

🌍 visinf.github.io/cups/

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

🌍 visinf.github.io/cups/

🎉Check out our #CVPR2025 paper: MOVIS (jason-aplp.github.io/MOVIS) 👇 (1/6)

🎉Check out our #CVPR2025 paper: MOVIS (jason-aplp.github.io/MOVIS) 👇 (1/6)

Topics: 3D-VLA models, LLM agents for 3D scene understanding, Robotic control with language.

📢 Call for papers: Deadline – April 20, 2025

🌐 Details: 3d-llm-vla.github.io

#llm #3d #Robotics #ai

Topics: 3D-VLA models, LLM agents for 3D scene understanding, Robotic control with language.

📢 Call for papers: Deadline – April 20, 2025

🌐 Details: 3d-llm-vla.github.io

#llm #3d #Robotics #ai

Check out DPRecon (dp-recon.github.io) at #CVPR2025 — it recovers all objects, achieves photorealistic mesh rendering, and supports text-based geometry & appearance editing. More details👇 (1/n)

Check out DPRecon (dp-recon.github.io) at #CVPR2025 — it recovers all objects, achieves photorealistic mesh rendering, and supports text-based geometry & appearance editing. More details👇 (1/n)

🌐 cvpr25workshop.m-haris-khan.com

📝 Submission: cmt3.research.microsoft.com/DGEBF2025/ (incl. CVPR accepts)

📅 Deadline: 27 Mar 2025

🌐 cvpr25workshop.m-haris-khan.com

📝 Submission: cmt3.research.microsoft.com/DGEBF2025/ (incl. CVPR accepts)

📅 Deadline: 27 Mar 2025

@cvpr.bsky.social #CVPR2025 🚀! A breakdown of the 7 papers covering visual navigation, distillation, 3D reconstruction (remember #DUSt3R ☺️), localization & motion segmentation. europe.naverlabs.com/updates/cvpr/ 🧵 1/9 ⬇️

@cvpr.bsky.social #CVPR2025 🚀! A breakdown of the 7 papers covering visual navigation, distillation, 3D reconstruction (remember #DUSt3R ☺️), localization & motion segmentation. europe.naverlabs.com/updates/cvpr/ 🧵 1/9 ⬇️

nianticlabs.github.io/morpheus/

Work by Jamie Wynn, Zawar Qureshi, Jakub Powierza, Jamie Watson, and myself.

🚀See you at #CVPR2025!🚀

(5/6)

nianticlabs.github.io/morpheus/

Work by Jamie Wynn, Zawar Qureshi, Jakub Powierza, Jamie Watson, and myself.

🚀See you at #CVPR2025!🚀

(5/6)

Organized by #Embed2Scale, this challenge pushes the limits of lossy neural compression for geospatial analytics.

🔗 Join the challenge now: eval.ai/web/challeng...

euspa.bsky.social

Organized by #Embed2Scale, this challenge pushes the limits of lossy neural compression for geospatial analytics.

🔗 Join the challenge now: eval.ai/web/challeng...

euspa.bsky.social

🌐 sites.google.com/view/vlms4all

For more details check out cvg.cit.tum.de

Don’t miss her talk at the workshop. Looking forward to seeing you all there! #CVPR2025

Don’t miss her talk at the workshop. Looking forward to seeing you all there! #CVPR2025

Present your latest work on Open-World 3D Scene Understanding with Foundation Models🌍☀️

📆Paper Deadline: March 25

(4 pages abstract, or 8 pages paper)

🔗OpenReview: openreview.net/group?id=thecvf.com/CVPR/2025/Workshop/OpenSUN3D

🏡Details: opensun3d.github.io

Present your latest work on Open-World 3D Scene Understanding with Foundation Models🌍☀️

📆Paper Deadline: March 25

(4 pages abstract, or 8 pages paper)

🔗OpenReview: openreview.net/group?id=thecvf.com/CVPR/2025/Workshop/OpenSUN3D

🏡Details: opensun3d.github.io