15/🧵

15/🧵

14/🧵

14/🧵

13/🧵

13/🧵

11/🧵

11/🧵

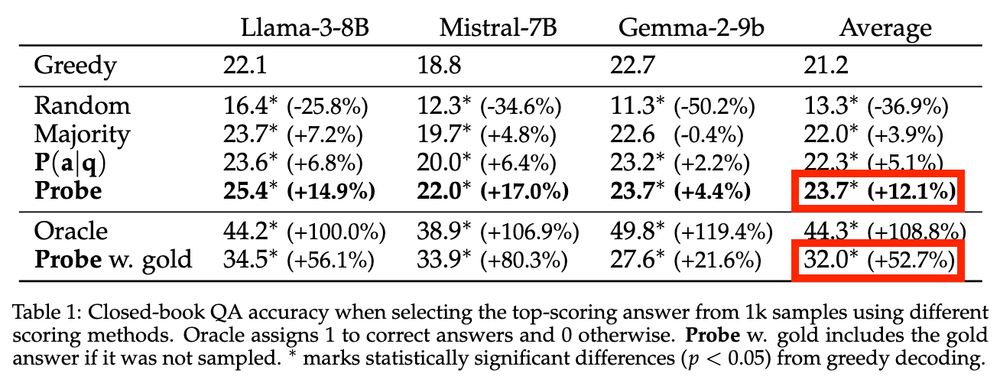

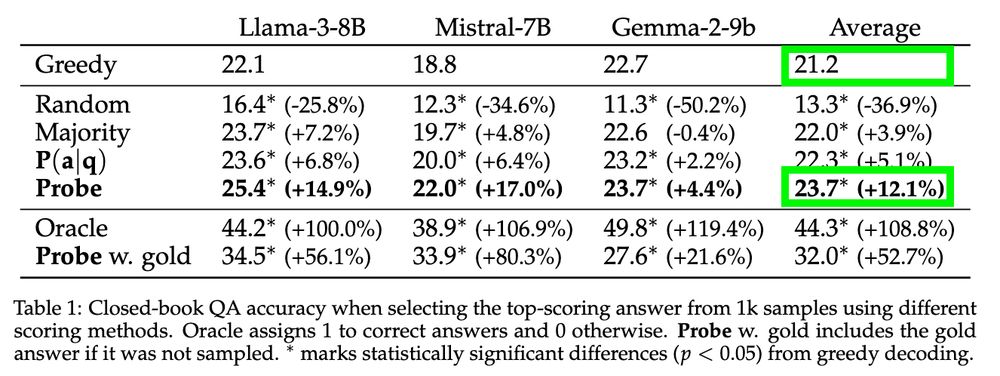

This highlights the need to understand these differences and build models that better use their knowledge, for which our framework serves as a foundation.

10/🧵

This highlights the need to understand these differences and build models that better use their knowledge, for which our framework serves as a foundation.

10/🧵

8/🧵

8/🧵

7/🧵

7/🧵

5/🧵

5/🧵

In our new paper, we clearly define this concept and design controlled experiments to test it.

1/🧵

In our new paper, we clearly define this concept and design controlled experiments to test it.

1/🧵