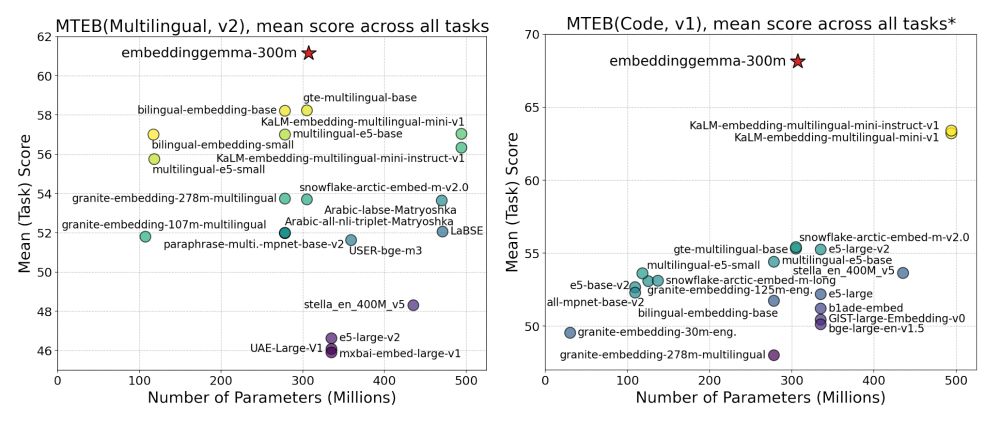

✦ SOTA on MTEB multilingual/English/code

✦ Minimal int8/int4 quantization degradation

✦ Graceful drop from 768d to 128d, still leading its class

✦ Cross-lingual: beats many larger open & API models

✦ SOTA on MTEB multilingual/English/code

✦ Minimal int8/int4 quantization degradation

✦ Graceful drop from 768d to 128d, still leading its class

✦ Cross-lingual: beats many larger open & API models

✦ "Spread-out" regularizer for quantization robustness

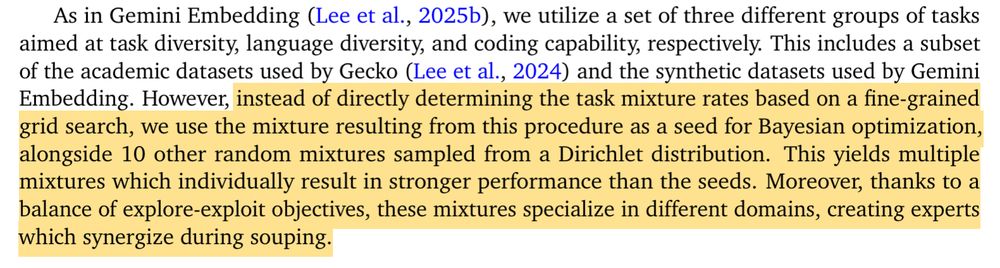

✦ Model souping across diverse data mixtures

✦ Quantization-aware training & MRL during finetuning

✦ "Spread-out" regularizer for quantization robustness

✦ Model souping across diverse data mixtures

✦ Quantization-aware training & MRL during finetuning

✦ Turn Gemma 3 into an encoder-decoder LLM using the UL2 objective (like GemmaT5)

✦ Keep the encoder, pre-finetune it on a large unsupervised dataset, then finetune on retrieval datasets with hard negatives

✦ Embedding distillation from Gemini Embedding

✦ Turn Gemma 3 into an encoder-decoder LLM using the UL2 objective (like GemmaT5)

✦ Keep the encoder, pre-finetune it on a large unsupervised dataset, then finetune on retrieval datasets with hard negatives

✦ Embedding distillation from Gemini Embedding

✦ 300M parameter text embedding model

✦ derived from Gemma 3

✦ SOTA on multilingual, English & code retrieval tasks

✦ matching performance of models 2x its size

✦ supports dimensionality reduction

✦ comes with quantization-aware checkpoints

✦ 300M parameter text embedding model

✦ derived from Gemma 3

✦ SOTA on multilingual, English & code retrieval tasks

✦ matching performance of models 2x its size

✦ supports dimensionality reduction

✦ comes with quantization-aware checkpoints

We've prepared a quick guide where we bootstrap a minimal MCP-powered chat agent with just a few lines of code:

www.zeta-alpha.com/post/build-m...

We've prepared a quick guide where we bootstrap a minimal MCP-powered chat agent with just a few lines of code:

www.zeta-alpha.com/post/build-m...

Register to receive your Zoom webinar invitation, or watch our live stream on YouTube and LinkedIn: lu.ma/trends-in-ai...

Register to receive your Zoom webinar invitation, or watch our live stream on YouTube and LinkedIn: lu.ma/trends-in-ai...

lu.ma/trends-in-ai...

lu.ma/trends-in-ai...

Register on Luma to receive your webinar invitation, or watch our live stream on YouTube & LinkedIn:

lu.ma/trends-in-ai...

Register on Luma to receive your webinar invitation, or watch our live stream on YouTube & LinkedIn:

lu.ma/trends-in-ai...

Don't miss out on our two talks today!

• RAG from Text & Images Using OpenSearch and Vision Language Models @ 14:55 - sched.co/1uxsf

• How to Search 1 Billion Vectors in OpenSearch Without Losing Your Mind or Wallet @ 16:35 - sched.co/1uxsu

Don't miss out on our two talks today!

• RAG from Text & Images Using OpenSearch and Vision Language Models @ 14:55 - sched.co/1uxsf

• How to Search 1 Billion Vectors in OpenSearch Without Losing Your Mind or Wallet @ 16:35 - sched.co/1uxsu

Scaling vector search to a billion vectors is a high-stakes balancing act. Lessons learnt from building such a system in OpenSearch, covering aspects like model selection & hardware essentials.

Scaling vector search to a billion vectors is a high-stakes balancing act. Lessons learnt from building such a system in OpenSearch, covering aspects like model selection & hardware essentials.

Learn how to incorporate Vision Language Models in a multimodal RAG pipeline with approaches like ColPali, enabling unprecedented accuracy for RAG on visually complex documents and presentations.

Learn how to incorporate Vision Language Models in a multimodal RAG pipeline with approaches like ColPali, enabling unprecedented accuracy for RAG on visually complex documents and presentations.

- A global [CLS] embedding for the entire document.

- Individual chunk embeddings through mean-pooling.

- A unified embedding from averaging all token embeddings across chunks.

- A global [CLS] embedding for the entire document.

- Individual chunk embeddings through mean-pooling.

- A unified embedding from averaging all token embeddings across chunks.

4. Filtering retrieval datasets improves multilingual retrieval.

5. Including hard negatives is beneficial, yet adding more beyond a certain point offers diminishing returns.

4. Filtering retrieval datasets improves multilingual retrieval.

5. Including hard negatives is beneficial, yet adding more beyond a certain point offers diminishing returns.

1. English-only training already exhibits both strong multilingual and cross-lingual retrieval performance.

2. Multi-lingual fine-tuning improves performance on long-tail languages in XTREME-UP.

1. English-only training already exhibits both strong multilingual and cross-lingual retrieval performance.

2. Multi-lingual fine-tuning improves performance on long-tail languages in XTREME-UP.