www.zeta-alpha.com/post/zeta-al...

Enjoy Discovery!

www.zeta-alpha.com/post/zeta-al...

Enjoy Discovery!

👉 lu.ma/trends-in-ai...

👉 lu.ma/trends-in-ai...

🔒 Secure AI infrastructure & data sovereignty

🧱 Guardrails & moderation for LLM outputs

💥 Jailbreaks & prompt injections in the wild

📜 Regulation like SB 53 and ISO 42001 compliance

🧠 Information integrity & the “AI workslop” productivity killer

🔒 Secure AI infrastructure & data sovereignty

🧱 Guardrails & moderation for LLM outputs

💥 Jailbreaks & prompt injections in the wild

📜 Regulation like SB 53 and ISO 42001 compliance

🧠 Information integrity & the “AI workslop” productivity killer

search.zeta-alpha.com/documents/4b...

search.zeta-alpha.com/documents/4b...

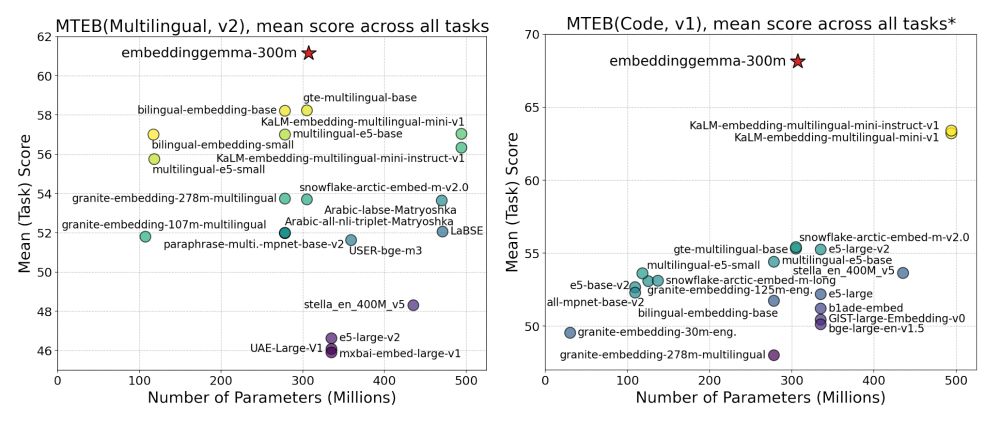

✦ SOTA on MTEB multilingual/English/code

✦ Minimal int8/int4 quantization degradation

✦ Graceful drop from 768d to 128d, still leading its class

✦ Cross-lingual: beats many larger open & API models

✦ SOTA on MTEB multilingual/English/code

✦ Minimal int8/int4 quantization degradation

✦ Graceful drop from 768d to 128d, still leading its class

✦ Cross-lingual: beats many larger open & API models

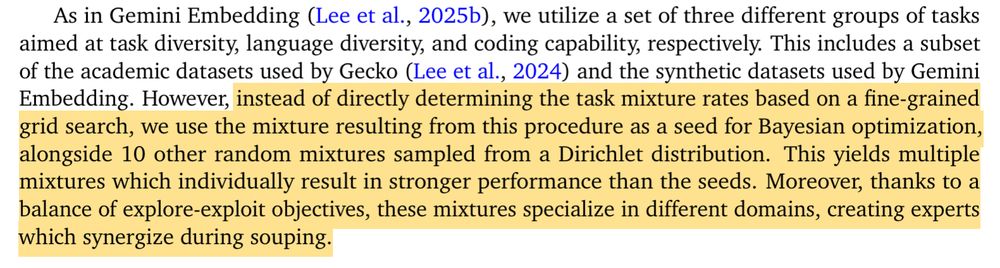

✦ "Spread-out" regularizer for quantization robustness

✦ Model souping across diverse data mixtures

✦ Quantization-aware training & MRL during finetuning

✦ "Spread-out" regularizer for quantization robustness

✦ Model souping across diverse data mixtures

✦ Quantization-aware training & MRL during finetuning

✦ Turn Gemma 3 into an encoder-decoder LLM using the UL2 objective (like GemmaT5)

✦ Keep the encoder, pre-finetune it on a large unsupervised dataset, then finetune on retrieval datasets with hard negatives

✦ Embedding distillation from Gemini Embedding

✦ Turn Gemma 3 into an encoder-decoder LLM using the UL2 objective (like GemmaT5)

✦ Keep the encoder, pre-finetune it on a large unsupervised dataset, then finetune on retrieval datasets with hard negatives

✦ Embedding distillation from Gemini Embedding

✦ 300M parameter text embedding model

✦ derived from Gemma 3

✦ SOTA on multilingual, English & code retrieval tasks

✦ matching performance of models 2x its size

✦ supports dimensionality reduction

✦ comes with quantization-aware checkpoints

✦ 300M parameter text embedding model

✦ derived from Gemma 3

✦ SOTA on multilingual, English & code retrieval tasks

✦ matching performance of models 2x its size

✦ supports dimensionality reduction

✦ comes with quantization-aware checkpoints