We show how to identify training samples most vulnerable to membership inference attacks - FOR FREE, using artifacts naturally available during training! No shadow models needed.

Learn more: computationalprivacy.github.io/loss_traces/

Thread below 🧵

A thread 🧵

A thread 🧵

Plz RT 🔄

Plz RT 🔄

Paper: arxiv.org/pdf/2312.051...

Paper: arxiv.org/pdf/2312.051...

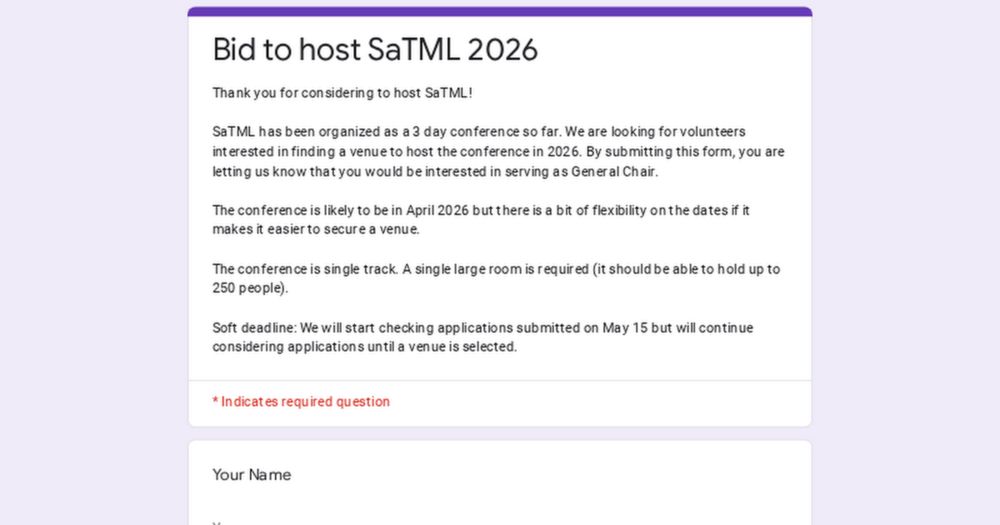

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

We are on the hunt for a 2026 host city - and you could lead the way. Submit a bid to become General Chair of the conference:

forms.gle/vozsaXjCoPzc...

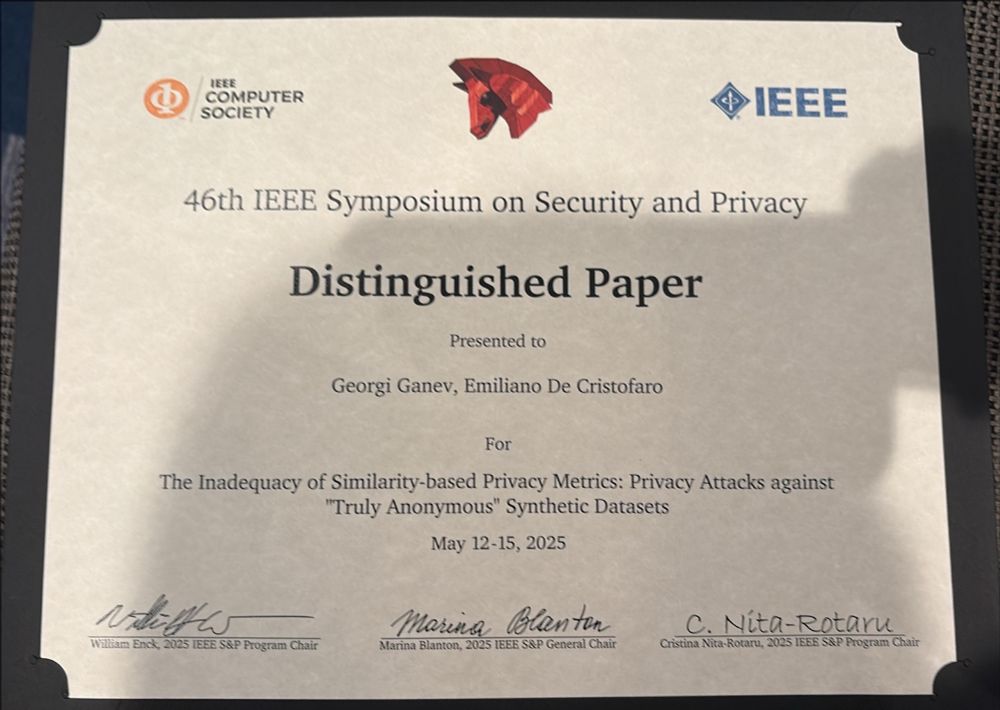

If your answer is “we checked Distance to Closest Record (DCR),” then… we might have bad news for you.

Our latest work shows DCR and other proxy metrics to be inadequate measures of the privacy risk of synthetic data.

If your answer is “we checked Distance to Closest Record (DCR),” then… we might have bad news for you.

Our latest work shows DCR and other proxy metrics to be inadequate measures of the privacy risk of synthetic data.

I definitely used to think this, until we started looking into it two years ago.

A thread 🧵

I definitely used to think this, until we started looking into it two years ago.

A thread 🧵

On Tuesday April 8th, we have awesome privacy researcher @yvesalexandre.bsky.social visiting the group. Yves is a bold and creative scientist, and also former advisor to Marianne Vestager.

Yves will give a talk at SODAS at 3pm that's open to the public (details below)

On Tuesday April 8th, we have awesome privacy researcher @yvesalexandre.bsky.social visiting the group. Yves is a bold and creative scientist, and also former advisor to Marianne Vestager.

Yves will give a talk at SODAS at 3pm that's open to the public (details below)

A thread 🧵:

A thread 🧵:

🗓️ Date: Feb 4 @ 6pm

📍 Imperial College London

📅 February 4th, 6pm, Imperial College London

We are happy to announce the new date for the first Privacy in ML Meetup @ Imperial, bringing together researchers from across academia and industry.

RSVP: www.imperial.ac.uk/events/18318...

🗓️ Date: Feb 4 @ 6pm

📍 Imperial College London

@yvesalexandre.bsky.social’s entire body of work is instructive here

@yvesalexandre.bsky.social’s entire body of work is instructive here

We show how in the #NeurIPS2024 paper:

arxiv.org/abs/2407.02191

Short summary👇

We show how in the #NeurIPS2024 paper:

arxiv.org/abs/2407.02191

Short summary👇