Paul Hagemann

@yungbayesian.bsky.social

PhD student at TU Berlin, working on generative models and inverse problems

he/him

he/him

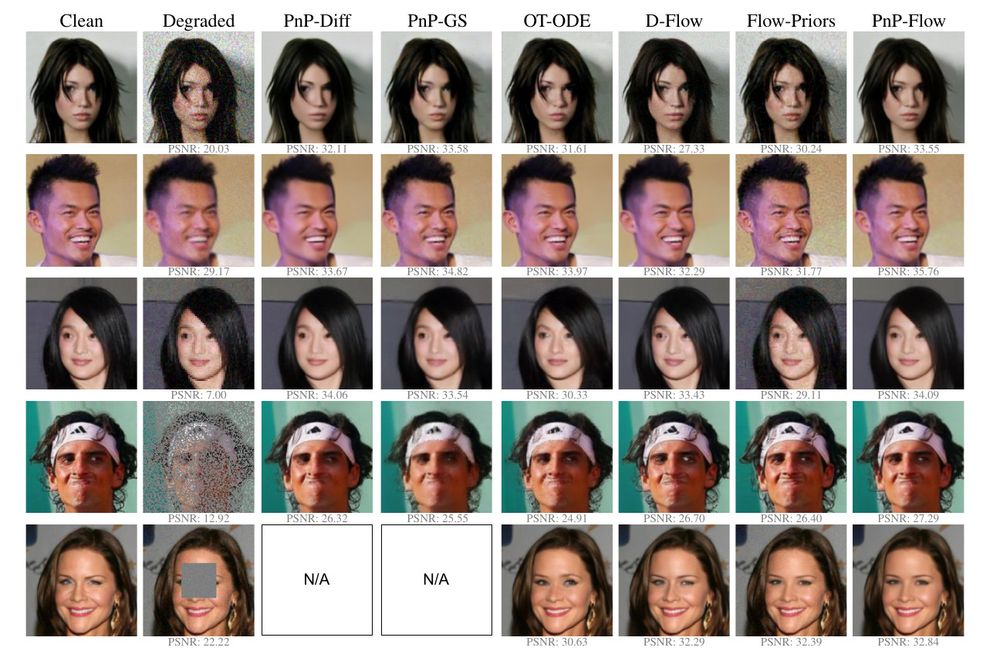

Compared to diffusion methods, we can handle arbitrary latent distributions and also get (theoretically) straighter paths! We evaluate on multiple image datasets against flow matching+diffusion+standard PnP based restoration methods!

January 23, 2025 at 11:00 AM

Compared to diffusion methods, we can handle arbitrary latent distributions and also get (theoretically) straighter paths! We evaluate on multiple image datasets against flow matching+diffusion+standard PnP based restoration methods!

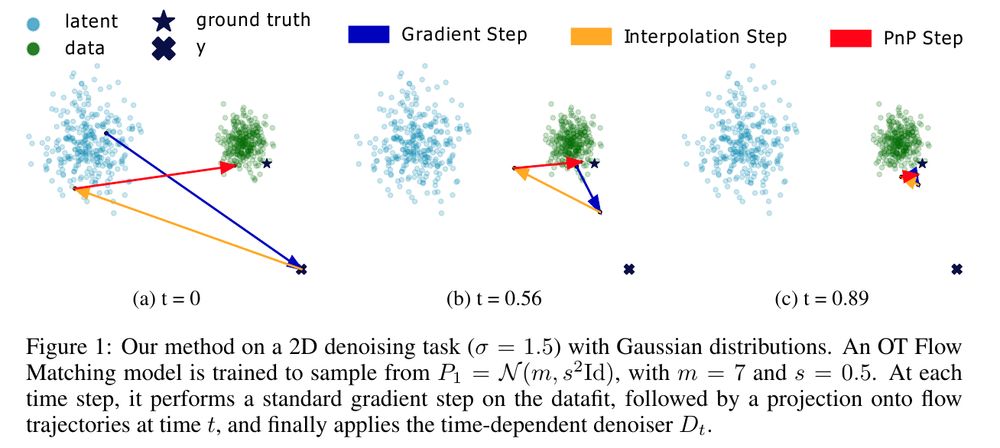

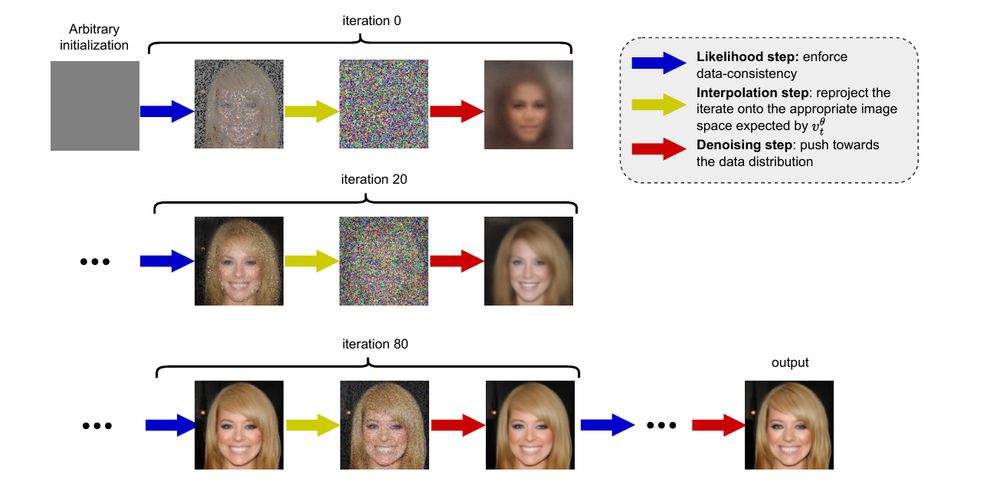

Therefore, we use the plug and play framework and rewrite our velocity field (which predicts a direction) to instead denoise the image x_t (i.e., predict the MMSE image x_1). Then we obtain a "time" conditional PnP version, where we solve do the forward backward PnP at the current time and reproject

January 23, 2025 at 10:57 AM

Therefore, we use the plug and play framework and rewrite our velocity field (which predicts a direction) to instead denoise the image x_t (i.e., predict the MMSE image x_1). Then we obtain a "time" conditional PnP version, where we solve do the forward backward PnP at the current time and reproject

Our paper "PnP-Flow: Plug-and-Play Image Restoration with Flow Matching" has been accepted to ICLR 2025. Here a short explainer: We want to restore images (i.e., solve inverse problems) using pretrained velocity fields from flow matching. However, using change of variables is super costly.

January 23, 2025 at 10:53 AM

Our paper "PnP-Flow: Plug-and-Play Image Restoration with Flow Matching" has been accepted to ICLR 2025. Here a short explainer: We want to restore images (i.e., solve inverse problems) using pretrained velocity fields from flow matching. However, using change of variables is super costly.

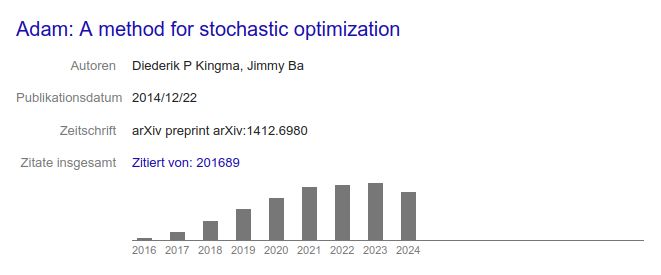

i guess the adam paper is a pretty good indicator how much ml papers are being published. looks like we are saturating since 2021

November 28, 2024 at 10:16 AM

i guess the adam paper is a pretty good indicator how much ml papers are being published. looks like we are saturating since 2021

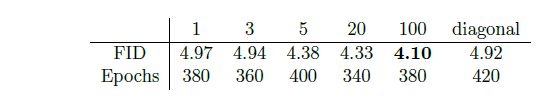

Here, one can see FID results for different beta! Indeed it seems to be fruitful to restrict mass movement in Y for class conditional cifar! We apply this also to other interesting inverse problems, the article can be found at arxiv.org/abs/2403.18705

November 20, 2024 at 9:14 AM

Here, one can see FID results for different beta! Indeed it seems to be fruitful to restrict mass movement in Y for class conditional cifar! We apply this also to other interesting inverse problems, the article can be found at arxiv.org/abs/2403.18705

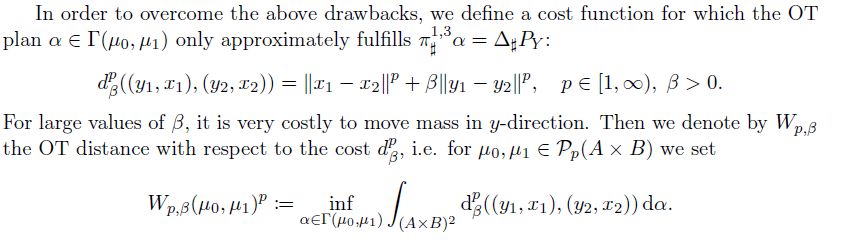

We want to approximate this distance with standard OT solvers, and therefore introduce a twisted cost function. With this at hand, we can now do OT flow matching for inverse problems! The factor beta controls how much mass leakage we allow in Y.

November 20, 2024 at 9:12 AM

We want to approximate this distance with standard OT solvers, and therefore introduce a twisted cost function. With this at hand, we can now do OT flow matching for inverse problems! The factor beta controls how much mass leakage we allow in Y.

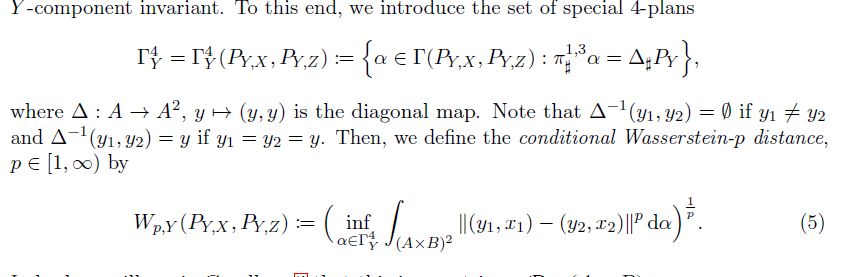

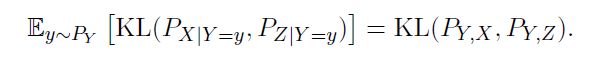

This object has already been of some interest, i.e., it pops up in the theory of gradient flows. It generalizes the KL property quite nicely, and unifies some ideas present in conditional generative modelling. For instance, its dual is the loss usually used in conditional wasserstein gans.

November 20, 2024 at 9:10 AM

This object has already been of some interest, i.e., it pops up in the theory of gradient flows. It generalizes the KL property quite nicely, and unifies some ideas present in conditional generative modelling. For instance, its dual is the loss usually used in conditional wasserstein gans.

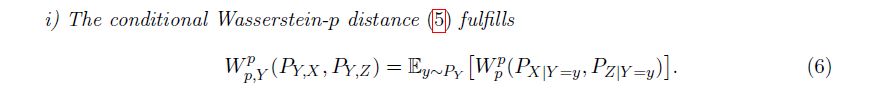

Now does the same hold for the Wasserstein distance? Unfortunately not, since moving mass in Y-direction can be more efficient for some measures. However, we can fix that if we restrict the suitable couplings to ones, that only move mass in Y-direction.

November 20, 2024 at 9:08 AM

Now does the same hold for the Wasserstein distance? Unfortunately not, since moving mass in Y-direction can be more efficient for some measures. However, we can fix that if we restrict the suitable couplings to ones, that only move mass in Y-direction.

In a somewhat recent paper we introduced conditional Wasserstein Distances. They generalize a property that basically explains why KL works well for generative modelling, the chain rule of KL!

It says that if one wants to approximate the posterior, one can also minimize the KL between joints.

It says that if one wants to approximate the posterior, one can also minimize the KL between joints.

November 20, 2024 at 9:07 AM

In a somewhat recent paper we introduced conditional Wasserstein Distances. They generalize a property that basically explains why KL works well for generative modelling, the chain rule of KL!

It says that if one wants to approximate the posterior, one can also minimize the KL between joints.

It says that if one wants to approximate the posterior, one can also minimize the KL between joints.