EiCs Gautam Kamath (@gautamkamath.com) and Nihar Shah will be there -- if you are an AE or an Expert Reviewer, or have a Featured or Outstanding Certification, you can get a free TMLR laptop sticker! Locations ⬇️

EiCs Gautam Kamath (@gautamkamath.com) and Nihar Shah will be there -- if you are an AE or an Expert Reviewer, or have a Featured or Outstanding Certification, you can get a free TMLR laptop sticker! Locations ⬇️

But, what you really want to hear about is stats .... right? -> 🧵

But, what you really want to hear about is stats .... right? -> 🧵

This addition lets OpenAI scale RL by making more data available to train on, but has new downstream problems to solve.

This addition lets OpenAI scale RL by making more data available to train on, but has new downstream problems to solve.

Looks about as simple as we would expect it to be, lots of details to uncover.

Liu et al. Visual-RFT: Visual Reinforcement Fine-Tuning

buff.ly/DbGuYve

(posted a week ago, oops)

Looks about as simple as we would expect it to be, lots of details to uncover.

Liu et al. Visual-RFT: Visual Reinforcement Fine-Tuning

buff.ly/DbGuYve

(posted a week ago, oops)

Promoting Obfuscation cdn.openai.com/pdf/34f2ada6...

Promoting Obfuscation cdn.openai.com/pdf/34f2ada6...

Reinforcement Learning on the Base Model

github.com/Open-Reasone...

Reinforcement Learning on the Base Model

github.com/Open-Reasone...

Nature is healing.

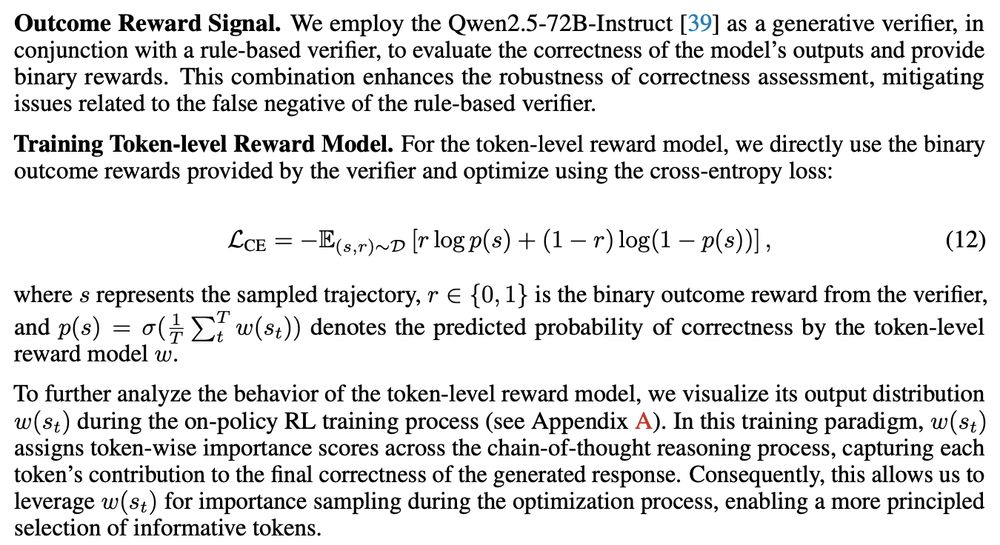

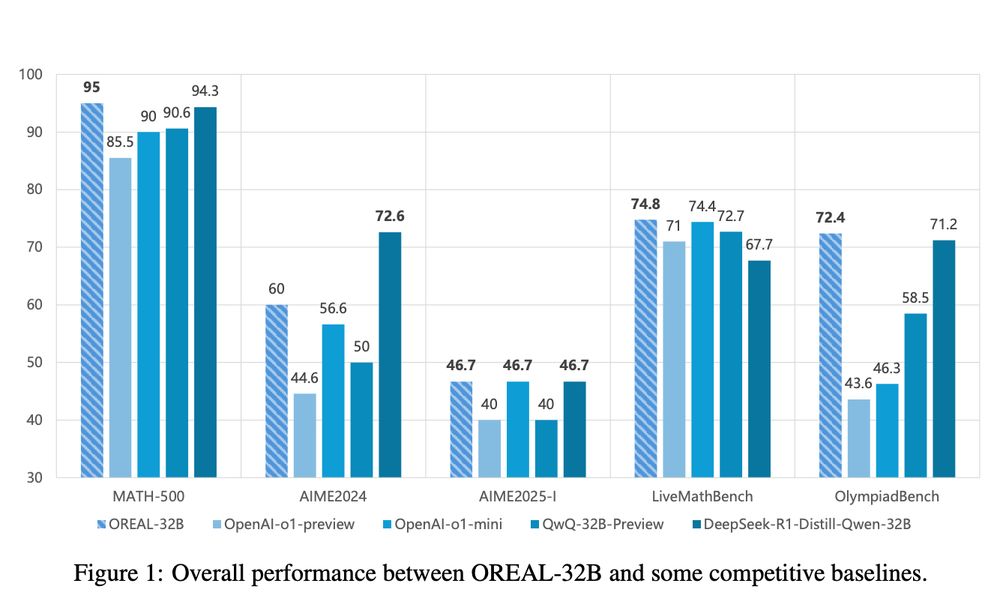

Exploring the Limit of Outcome Reward for Learning Mathematical Reasoning

Lyu et al

arxiv.org/abs/2502.06781

Nature is healing.

Exploring the Limit of Outcome Reward for Learning Mathematical Reasoning

Lyu et al

arxiv.org/abs/2502.06781

There May Not be Aha Moment in R1-Zero-like Training — A Pilot Study

#Papers

There May Not be Aha Moment in R1-Zero-like Training — A Pilot Study

#Papers

Regardless, is a great plot.

arxiv.org/abs/2501.18585

Regardless, is a great plot.

arxiv.org/abs/2501.18585

50% of papers have discussion - let’s bring this number up!

50% of papers have discussion - let’s bring this number up!