Check it out: yiqingxu.org/packages/fect/

Special thanks to Ziyi, Rivka, and Tianzhu for their incredible work and dedication. More features are on the way!

Check it out: yiqingxu.org/packages/fect/

Special thanks to Ziyi, Rivka, and Tianzhu for their incredible work and dedication. More features are on the way!

Comments and suggestions are more than welcome!

Comments and suggestions are more than welcome!

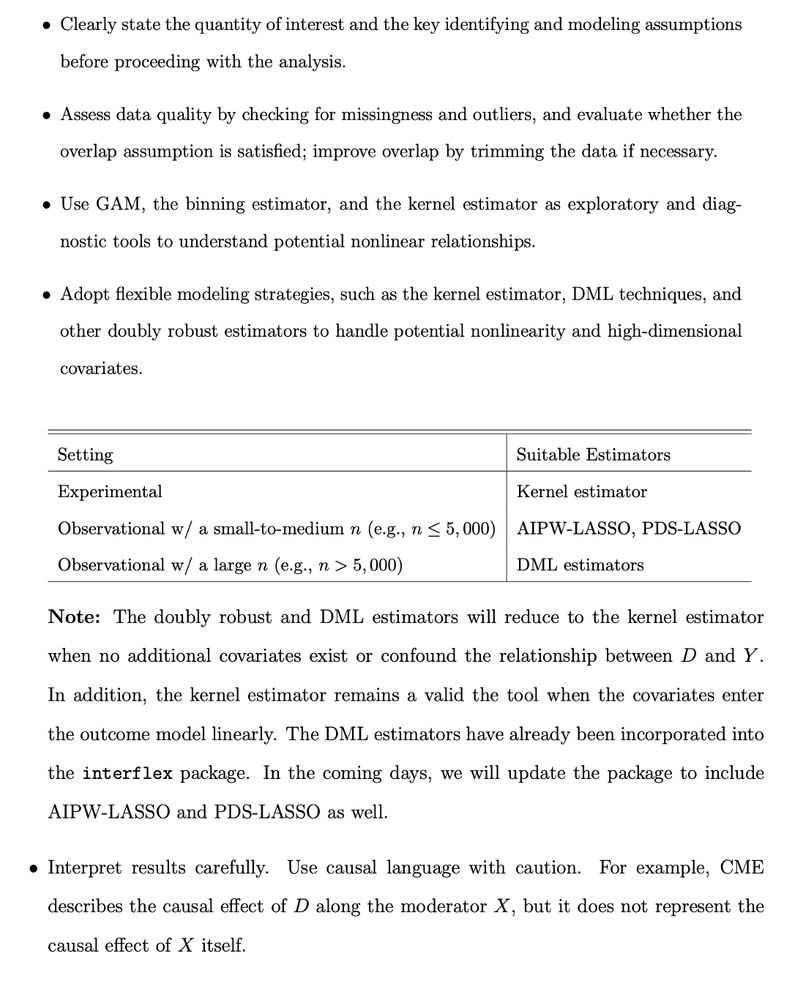

First, we adapt AIPW-Lasso & Partialing-Out Lasso, both w/ basis expansion & Lasso double selection.

We walk through signal construction step-by-step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

First, we adapt AIPW-Lasso & Partialing-Out Lasso, both w/ basis expansion & Lasso double selection.

We walk through signal construction step-by-step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

We then review & improve the semiparametric kernel estimator. The improvements include fully moderated models, adaptive kernels, and uniform confidence intervals.

We then review & improve the semiparametric kernel estimator. The improvements include fully moderated models, adaptive kernels, and uniform confidence intervals.

Scholars are often interested in how treatment effects vary with a moderating variable (example below).

We hope this will serve as a useful reference for this common task down the road.

Scholars are often interested in how treatment effects vary with a moderating variable (example below).

We hope this will serve as a useful reference for this common task down the road.

First, we adapt AIPW-Lasso & partialing-out Lasso (PO-Lasso), both w/ basis expansion & Lasso double selection.

We walk through signal construction step by step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

First, we adapt AIPW-Lasso & partialing-out Lasso (PO-Lasso), both w/ basis expansion & Lasso double selection.

We walk through signal construction step by step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

We then review & improve the semiparametric kernel estimator by introducing fully moderated models, adaptive kernels, and uniform confidence intervals.

We then review & improve the semiparametric kernel estimator by introducing fully moderated models, adaptive kernels, and uniform confidence intervals.

Scholars are often interested in how treatment effects vary with a moderating variable (example below). We hope this Element will serve as a useful reference for such tasks down the road.

Scholars are often interested in how treatment effects vary with a moderating variable (example below). We hope this Element will serve as a useful reference for such tasks down the road.

w/ Jens Hainmueller, Jiehan Liu, Ziyi Liu & Jonathan Mummolo

w/ Jens Hainmueller, Jiehan Liu, Ziyi Liu & Jonathan Mummolo

We provide software support for various estimation strategies: yiqingxu.org/packages/int...

We provide software support for various estimation strategies: yiqingxu.org/packages/int...