Check it out: yiqingxu.org/packages/fect/

Special thanks to Ziyi, Rivka, and Tianzhu for their incredible work and dedication. More features are on the way!

Check it out: yiqingxu.org/packages/fect/

Special thanks to Ziyi, Rivka, and Tianzhu for their incredible work and dedication. More features are on the way!

polmeth.org/statistical-...

To celebrate, we've just released fect v2.0.5 on CRAN & Github 🎉

polmeth.org/statistical-...

To celebrate, we've just released fect v2.0.5 on CRAN & Github 🎉

Comments and suggestions are more than welcome!

Comments and suggestions are more than welcome!

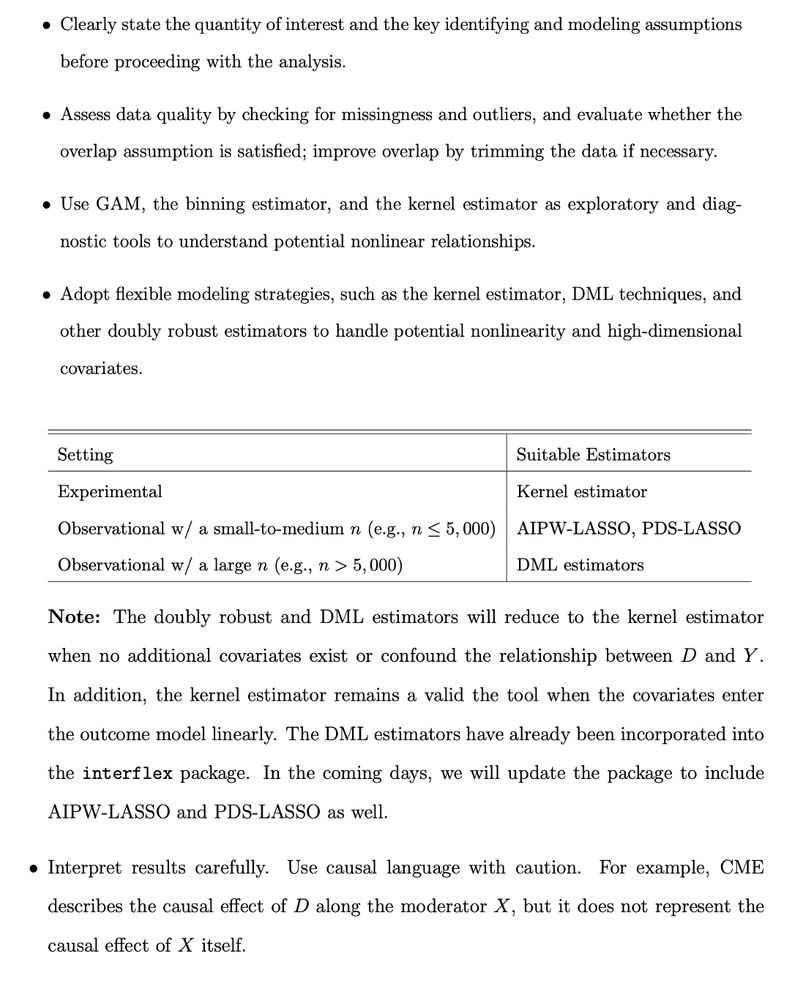

First, we adapt AIPW-Lasso & Partialing-Out Lasso, both w/ basis expansion & Lasso double selection.

We walk through signal construction step-by-step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

First, we adapt AIPW-Lasso & Partialing-Out Lasso, both w/ basis expansion & Lasso double selection.

We walk through signal construction step-by-step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

We then review & improve the semiparametric kernel estimator. The improvements include fully moderated models, adaptive kernels, and uniform confidence intervals.

We then review & improve the semiparametric kernel estimator. The improvements include fully moderated models, adaptive kernels, and uniform confidence intervals.

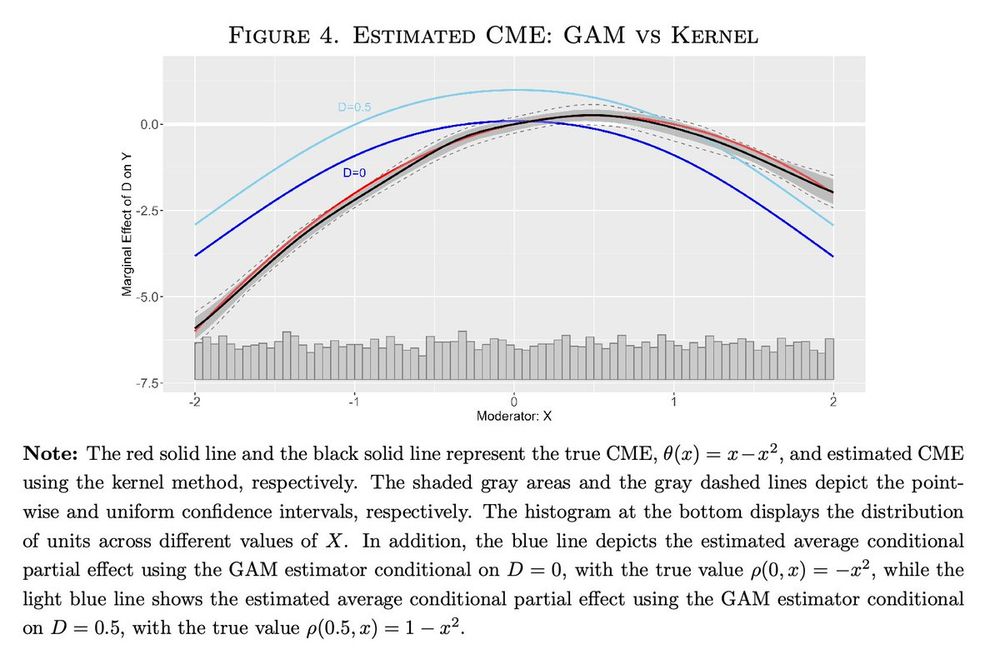

Scholars are often interested in how treatment effects vary with a moderating variable (example below).

We hope this will serve as a useful reference for this common task down the road.

Scholars are often interested in how treatment effects vary with a moderating variable (example below).

We hope this will serve as a useful reference for this common task down the road.

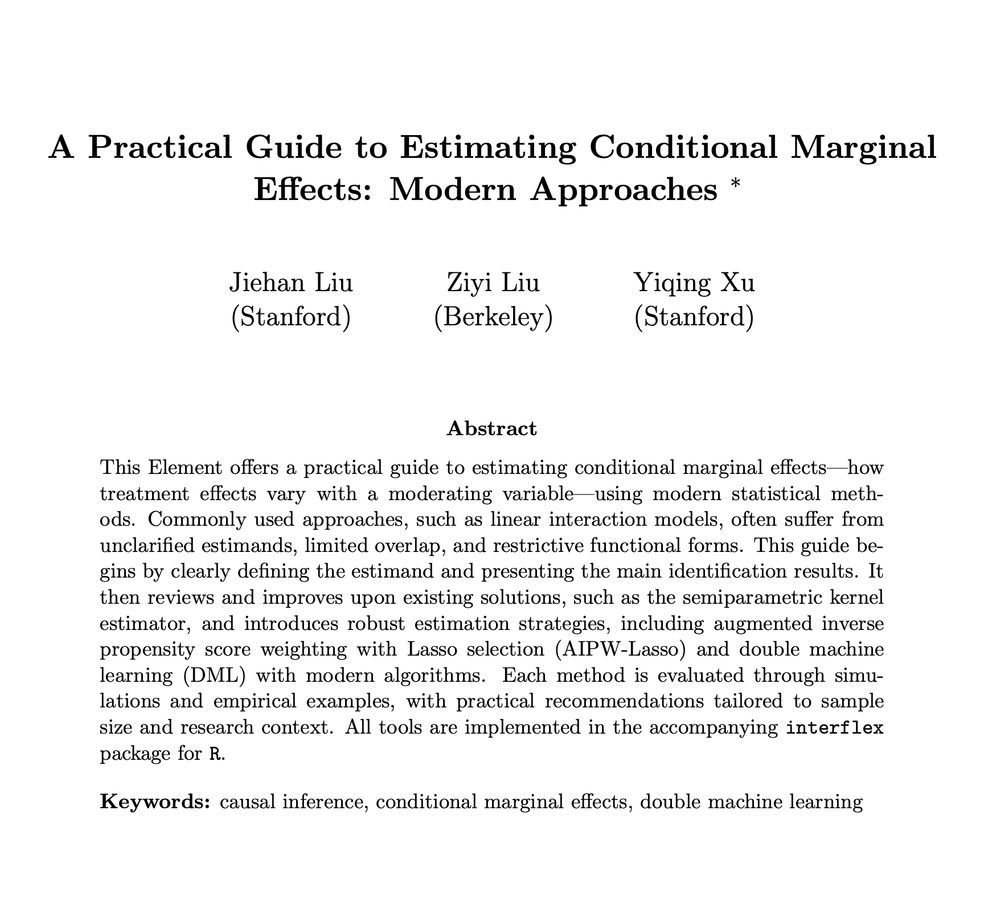

w/ two amazing grad students, Jiehan_Liu & Ziyi Liu 🧵

w/ two amazing grad students, Jiehan_Liu & Ziyi Liu 🧵

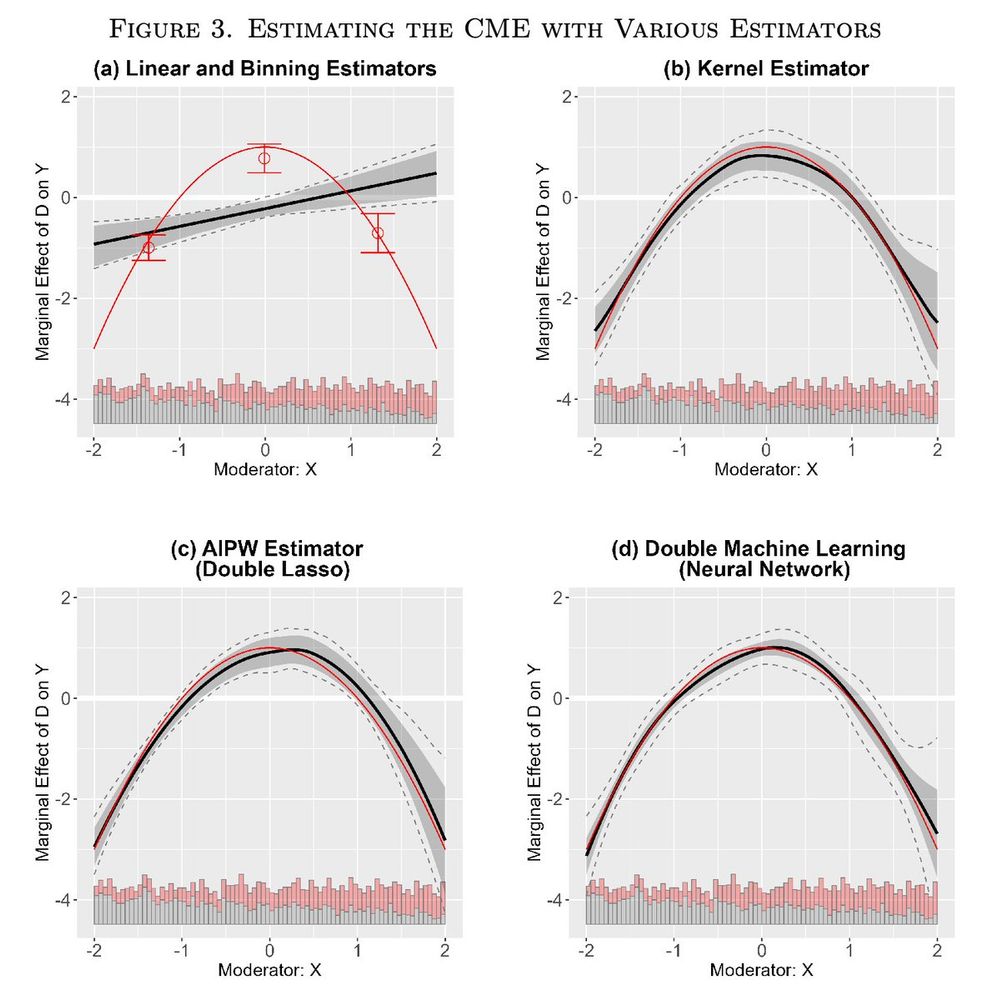

First, we adapt AIPW-Lasso & partialing-out Lasso (PO-Lasso), both w/ basis expansion & Lasso double selection.

We walk through signal construction step by step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

First, we adapt AIPW-Lasso & partialing-out Lasso (PO-Lasso), both w/ basis expansion & Lasso double selection.

We walk through signal construction step by step to aid intuition. For smoothing, we support both kernel and B-spline regressions.

We then review & improve the semiparametric kernel estimator by introducing fully moderated models, adaptive kernels, and uniform confidence intervals.

We then review & improve the semiparametric kernel estimator by introducing fully moderated models, adaptive kernels, and uniform confidence intervals.

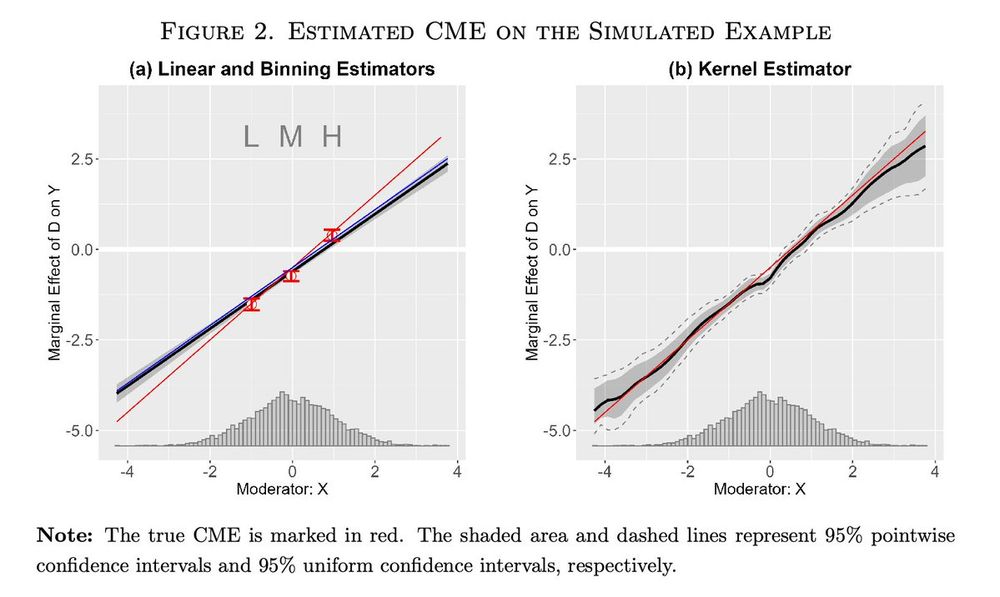

Scholars are often interested in how treatment effects vary with a moderating variable (example below). We hope this Element will serve as a useful reference for such tasks down the road.

Scholars are often interested in how treatment effects vary with a moderating variable (example below). We hope this Element will serve as a useful reference for such tasks down the road.

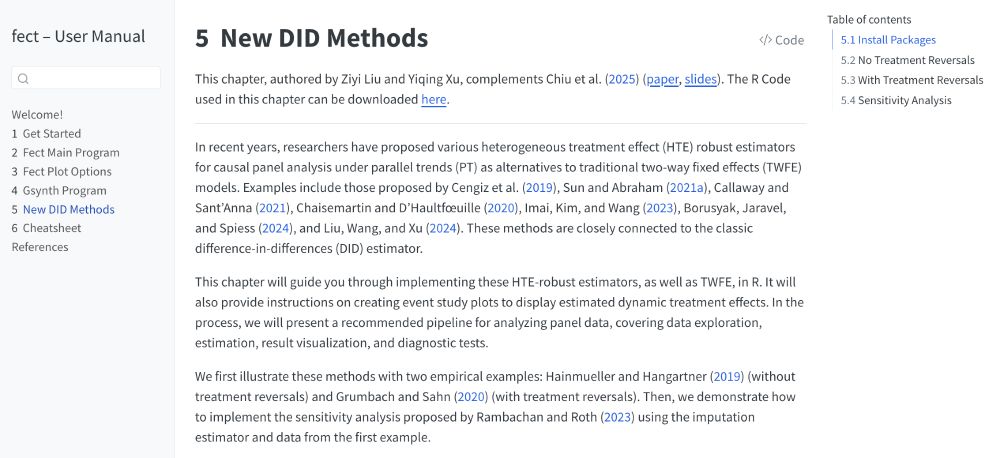

yiqingxu.org/packages/fec...

Hope you no longer need to spend months figuring out what these estimators are and how to use them.

yiqingxu.org/packages/fec...

Hope you no longer need to spend months figuring out what these estimators are and how to use them.

We provide software support for various estimation strategies: yiqingxu.org/packages/int...

We provide software support for various estimation strategies: yiqingxu.org/packages/int...

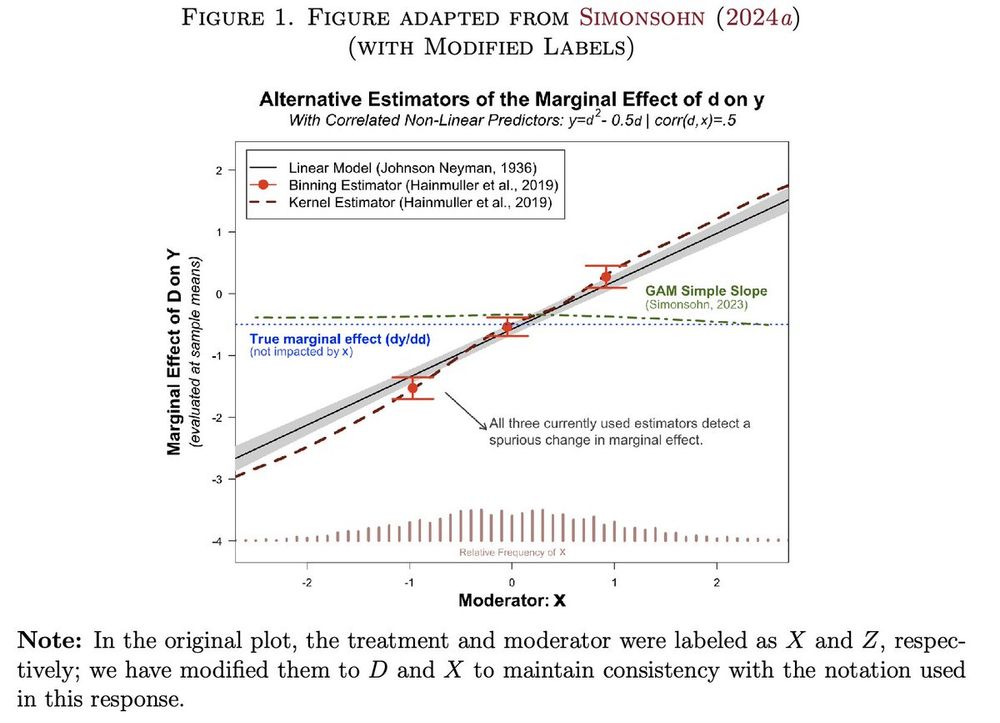

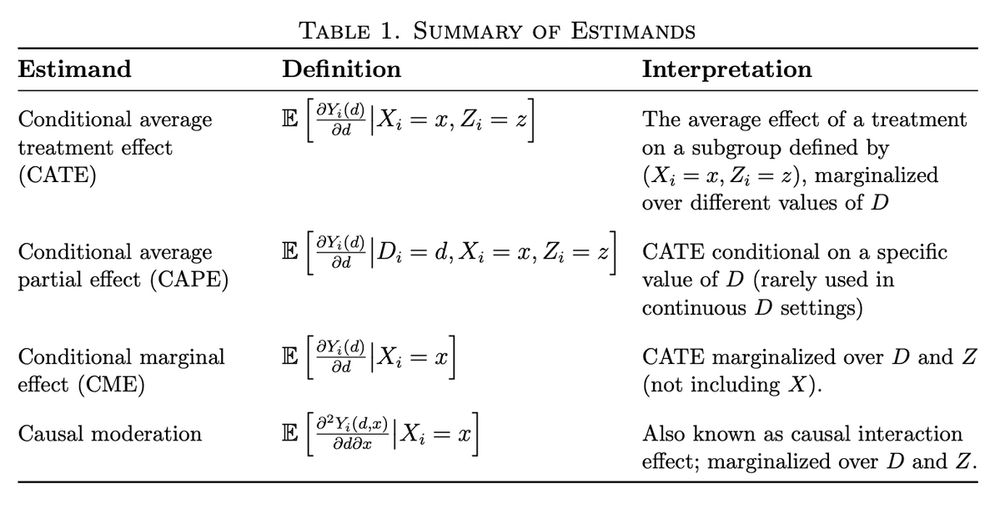

Most applied research focuses on estimating the CME, whereas the critique centers on CAPE, an estimand rarely used in applied work.

Most applied research focuses on estimating the CME, whereas the critique centers on CAPE, an estimand rarely used in applied work.

1/ Recently, Professor Uri Simonsohn critiqued Hainmueller, Mummolo & Xu (2019), arguing that the proposed methods fail to recover the conditional marginal effect (CME): datacolada.org/121

We appreciate the critique and offer this response: arxiv.org/pdf/2502.05717 🧵

1/ Recently, Professor Uri Simonsohn critiqued Hainmueller, Mummolo & Xu (2019), arguing that the proposed methods fail to recover the conditional marginal effect (CME): datacolada.org/121

We appreciate the critique and offer this response: arxiv.org/pdf/2502.05717 🧵