Sharing our new paper @iclr_conf led by Yedi Zhang with Peter Latham

arxiv.org/abs/2512.20607

Sharing our new paper @iclr_conf led by Yedi Zhang with Peter Latham

arxiv.org/abs/2512.20607

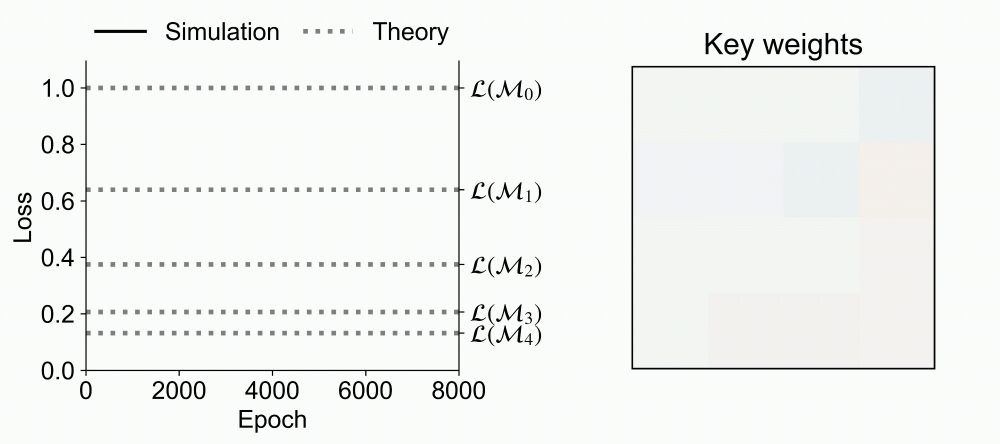

Sharing our new Spotlight paper @icmlconf.bsky.social: Training Dynamics of In-Context Learning in Linear Attention

arxiv.org/abs/2501.16265

Led by Yedi Zhang with @aaditya6284.bsky.social and Peter Latham

Sharing our new Spotlight paper @icmlconf.bsky.social: Training Dynamics of In-Context Learning in Linear Attention

arxiv.org/abs/2501.16265

Led by Yedi Zhang with @aaditya6284.bsky.social and Peter Latham