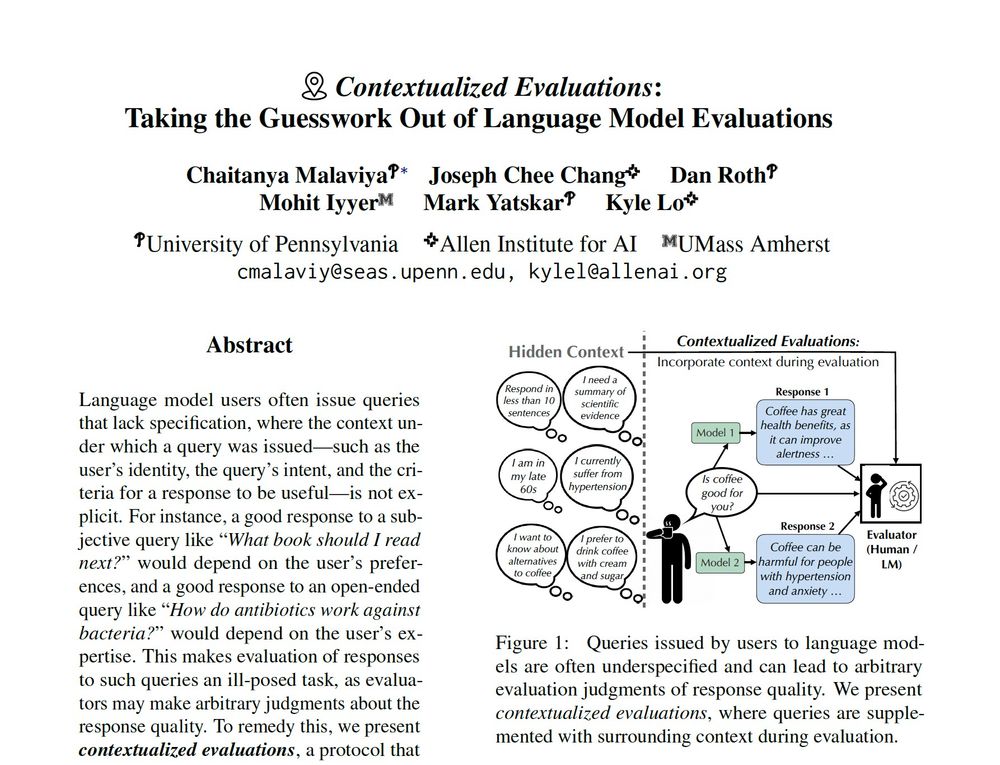

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

Submit to the LM4Sci Workshop @ COLM 2025 in Montreal 🇨🇦

🧠 Large Language Modeling for Scientific Discovery (LM4Sci)

📅 New Deadline: June 30

📢 Notification: July 24

📍 Workshop: Oct 10, 2025

📝 Non-archival short (2–4p) & full (up to 8p) papers welcome!

Submit to the LM4Sci Workshop @ COLM 2025 in Montreal 🇨🇦

🧠 Large Language Modeling for Scientific Discovery (LM4Sci)

📅 New Deadline: June 30

📢 Notification: July 24

📍 Workshop: Oct 10, 2025

📝 Non-archival short (2–4p) & full (up to 8p) papers welcome!

Excited to announce the Large Language Modeling for Scientific Discovery (LM4Sci) workshop at COLM 2025 in Montreal, Canada!

Submission Deadline: June 23

Notification: July 24

Workshop: October 10, 2025

Excited to announce the Large Language Modeling for Scientific Discovery (LM4Sci) workshop at COLM 2025 in Montreal, Canada!

Submission Deadline: June 23

Notification: July 24

Workshop: October 10, 2025

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓