chaitanyamalaviya.github.io

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

Jul 30, 11:00-12:30 at Hall 4X, board 424.

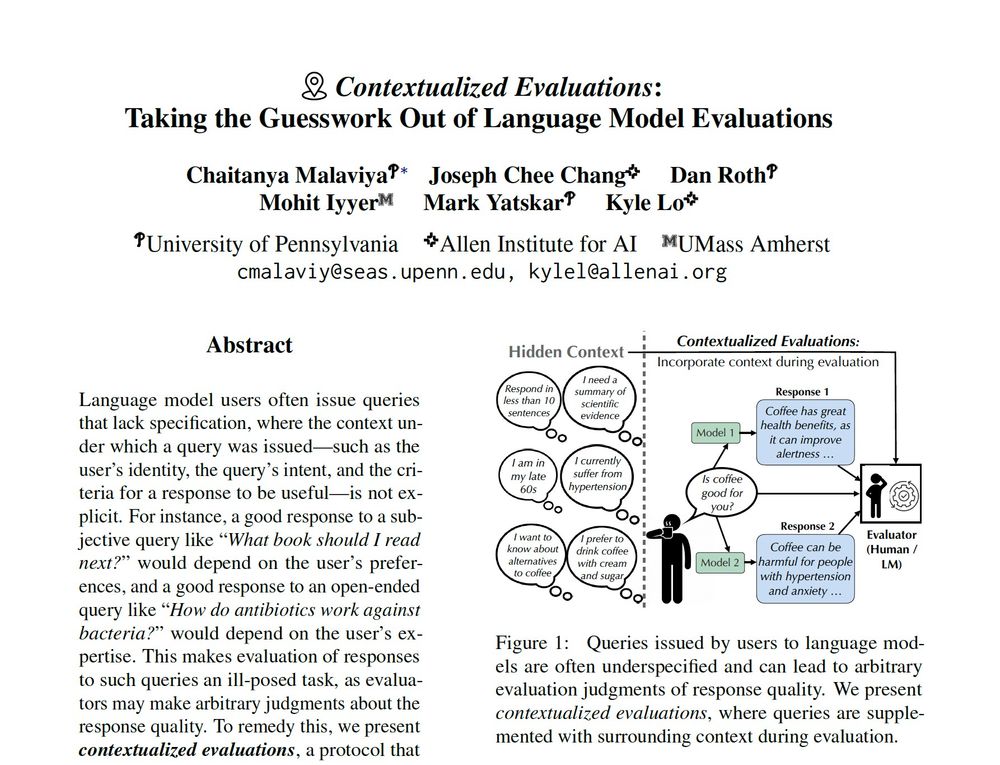

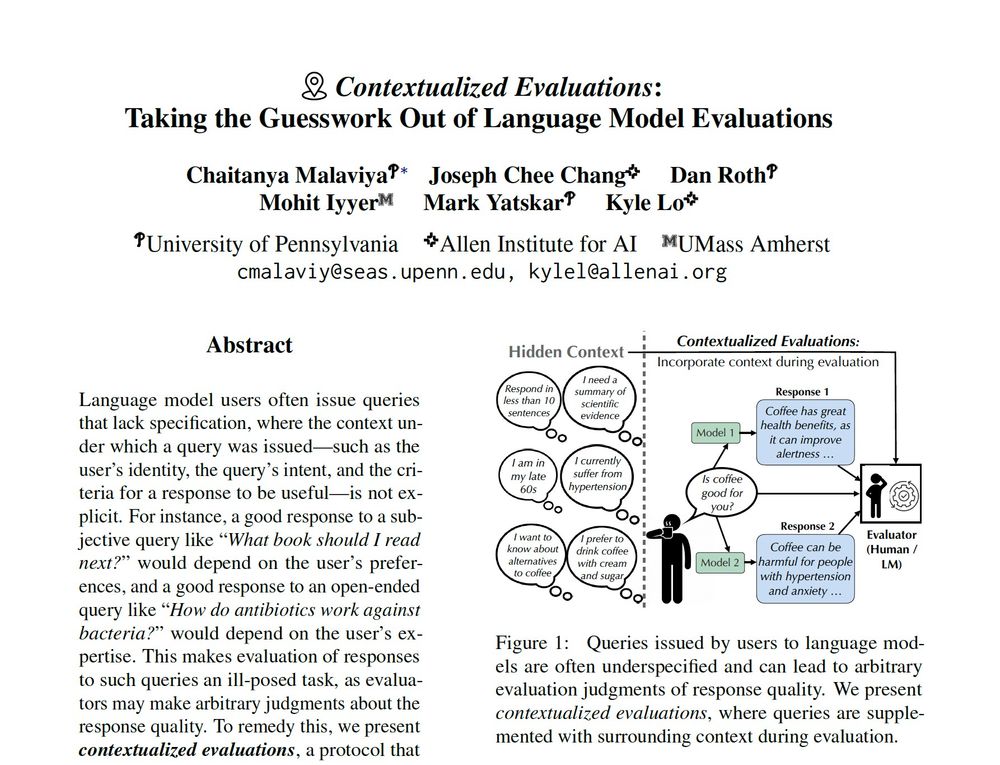

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

Jul 30, 11:00-12:30 at Hall 4X, board 424.

🐡data contains cases where the "bad" response is just as good as chosen one

🐟model rankings can feel off (claude ranks lower than expected)

led by @cmalaviya.bsky.social, we study underspecified queries & detrimental effect on model evals; accepted to TACL 2025

🐡data contains cases where the "bad" response is just as good as chosen one

🐟model rankings can feel off (claude ranks lower than expected)

led by @cmalaviya.bsky.social, we study underspecified queries & detrimental effect on model evals; accepted to TACL 2025

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

Our new paper investigates these and other idiosyncratic biases in preference models, and presents a simple post-training recipe to mitigate them! Thread below 🧵↓

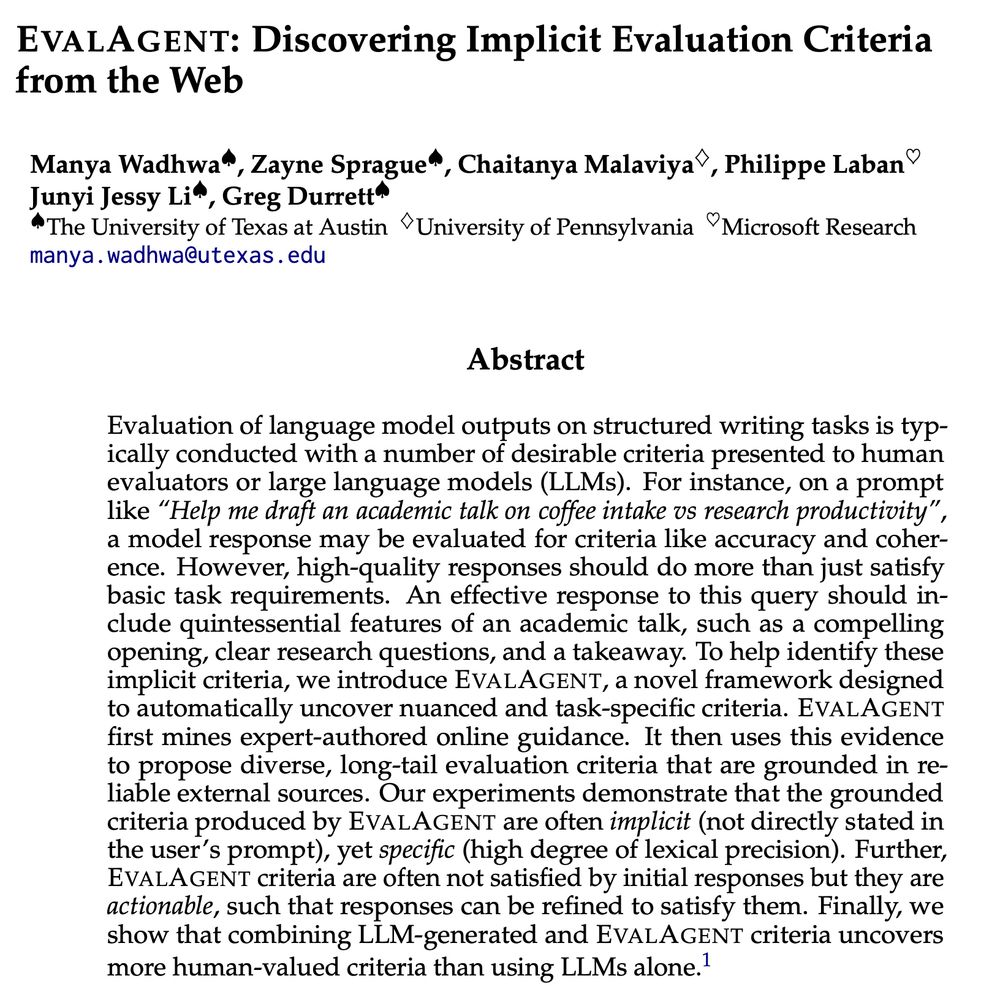

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓