supervised by Josef Sivic and Patrick Pérez

https://vobecant.github.io/

Check out DIP an effective post-training strategy by @ssirko.bsky.social @spyrosgidaris.bsky.social

@vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome 👇

#iccv2025

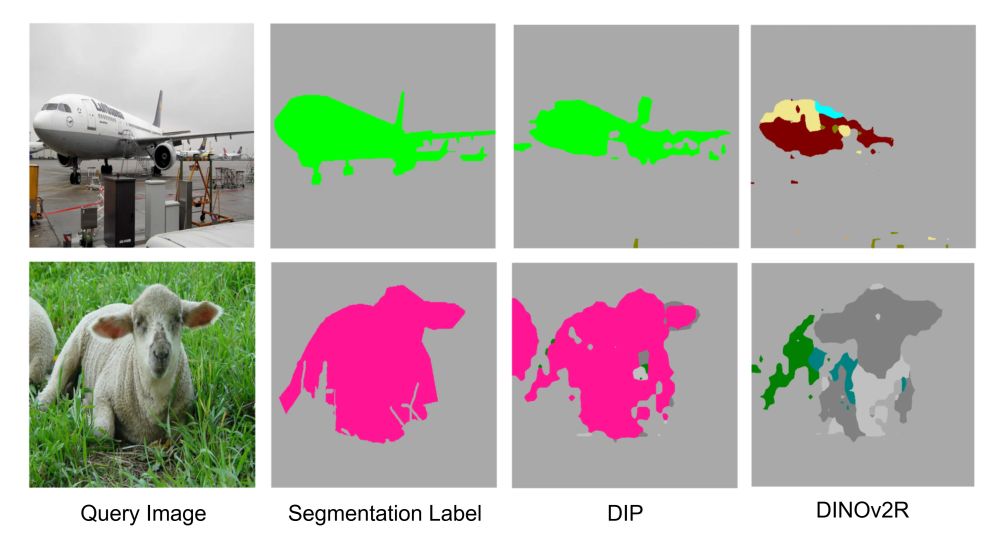

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Check out DIP an effective post-training strategy by @ssirko.bsky.social @spyrosgidaris.bsky.social

@vobeckya.bsky.social @abursuc.bsky.social and Nicolas Thome 👇

#iccv2025

by S. Gidaris, A. Bursuc, O. Simeoni, A. Vobecky, N. Komodakis, M. Cord, P. Pérez

Unify mask-and-predict & contrastive SSL objectives -> better performance w/ 3x faster training

by S. Gidaris, A. Bursuc, O. Simeoni, A. Vobecky, N. Komodakis, M. Cord, P. Pérez

Unify mask-and-predict & contrastive SSL objectives -> better performance w/ 3x faster training