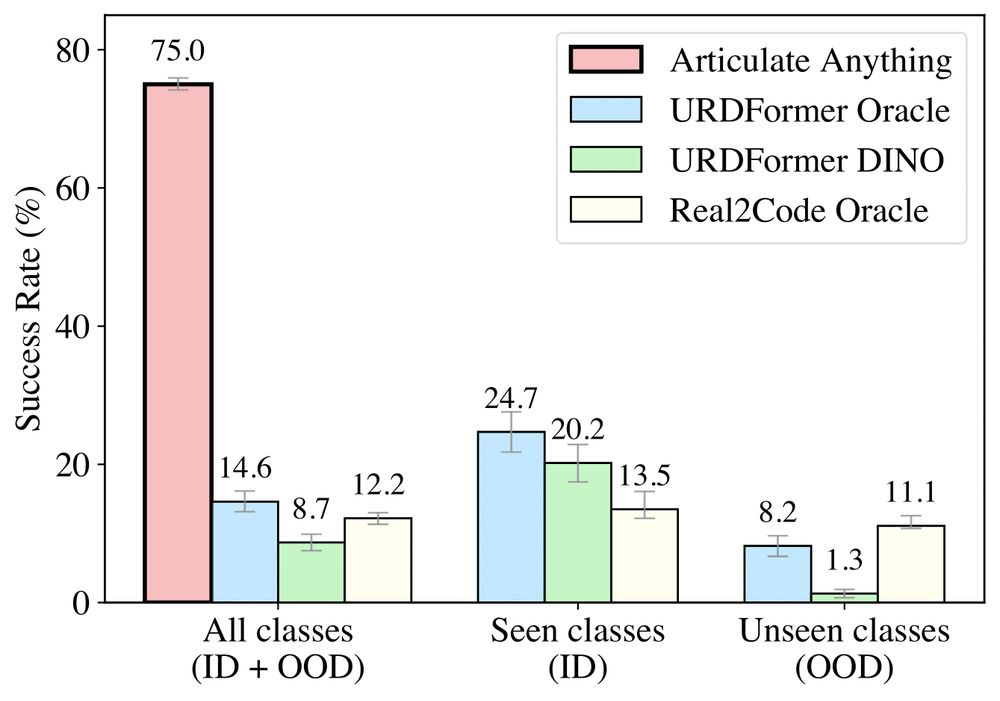

Articulate-Anything is much better than the baselines both quantitatively and qualitatively. This is possible due to (1) leveraging richer input modalities, (2) modeling articulation as a high-level program synthesis, (3) leveraging a closed-loop actor-critic system

Articulate-Anything is much better than the baselines both quantitatively and qualitatively. This is possible due to (1) leveraging richer input modalities, (2) modeling articulation as a high-level program synthesis, (3) leveraging a closed-loop actor-critic system

Articulate-Anything breaks the problem into three steps: (1) Mesh retrieval, (2) Link placement, which spatially arranges the parts together, and (3) Joint prediction, which determines the kinematic movement between parts. Take a look at a video explaining this pipeline!

Articulate-Anything breaks the problem into three steps: (1) Mesh retrieval, (2) Link placement, which spatially arranges the parts together, and (3) Joint prediction, which determines the kinematic movement between parts. Take a look at a video explaining this pipeline!

Creating interactable 3D models of the world is hard. An artist have to model the physical appearance of the object to create a mesh. Then a roboticist needs to manually annotate the kinematic joints to give object movement in URDF.

But what we can automate all these steps?

Creating interactable 3D models of the world is hard. An artist have to model the physical appearance of the object to create a mesh. Then a roboticist needs to manually annotate the kinematic joints to give object movement in URDF.

But what we can automate all these steps?

Excited to share our work,Articulate-Anything 🐵, exploring how VLMs can bridge the gap between the physical and digital worlds.

Website: articulate-anything.github.io

Excited to share our work,Articulate-Anything 🐵, exploring how VLMs can bridge the gap between the physical and digital worlds.

Website: articulate-anything.github.io