https://veds12.github.io/

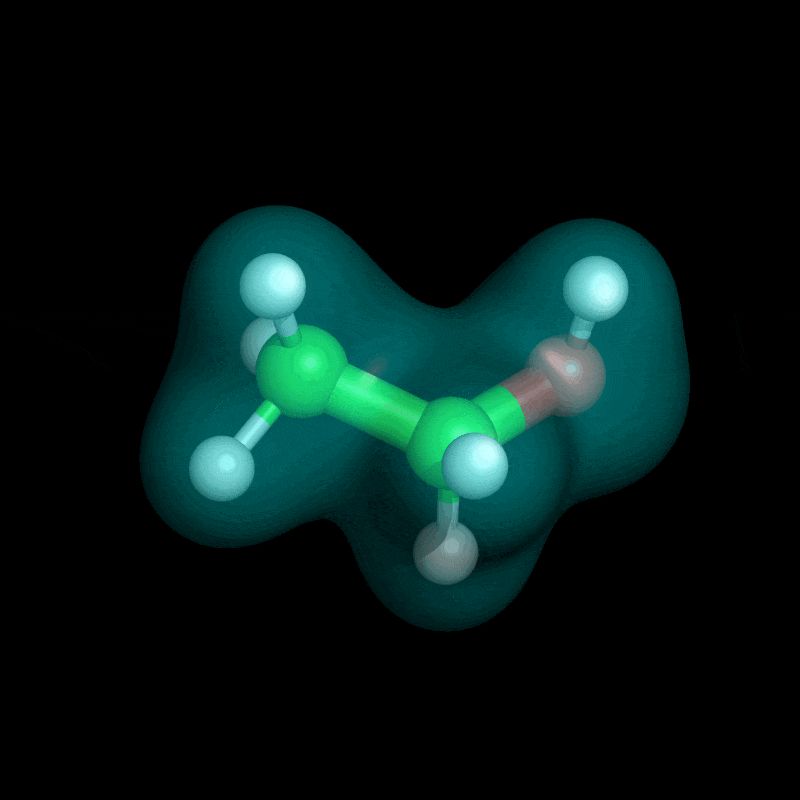

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

Location: West Meeting Room 118-120

Time: 11:00 AM - 12:30 PM; 4:00 PM - 5:00 PM

Come by if you want to chat about designing difficult evaluation benchmarks, follow-up work, and mathematical reasoning in LLMs!

Location: West Meeting Room 118-120

Time: 11:00 AM - 12:30 PM; 4:00 PM - 5:00 PM

Come by if you want to chat about designing difficult evaluation benchmarks, follow-up work, and mathematical reasoning in LLMs!

"AI-Assisted Generation of Difficult Math Questions"

at the MATH-AI Workshop on Saturday 🚀!

Would love to chat if you are interested in topics related to LLM reasoning and systematic generalization!

arxiv.org/abs/2407.21009

"AI-Assisted Generation of Difficult Math Questions"

at the MATH-AI Workshop on Saturday 🚀!

Would love to chat if you are interested in topics related to LLM reasoning and systematic generalization!

arxiv.org/abs/2407.21009