📄 Paper: arxiv.org/abs/2505.01456

💻 Code and Dataset: github.com/Vaidehi99/Un...

huggingface.co/datasets/vai...

🤗 HuggingFace: huggingface.co/papers/2505....

📄 Paper: arxiv.org/abs/2505.01456

💻 Code and Dataset: github.com/Vaidehi99/Un...

huggingface.co/datasets/vai...

🤗 HuggingFace: huggingface.co/papers/2505....

🔥 Multimodal attacks are the most effective

🛡️ Our strongest defense is deleting info from hidden states

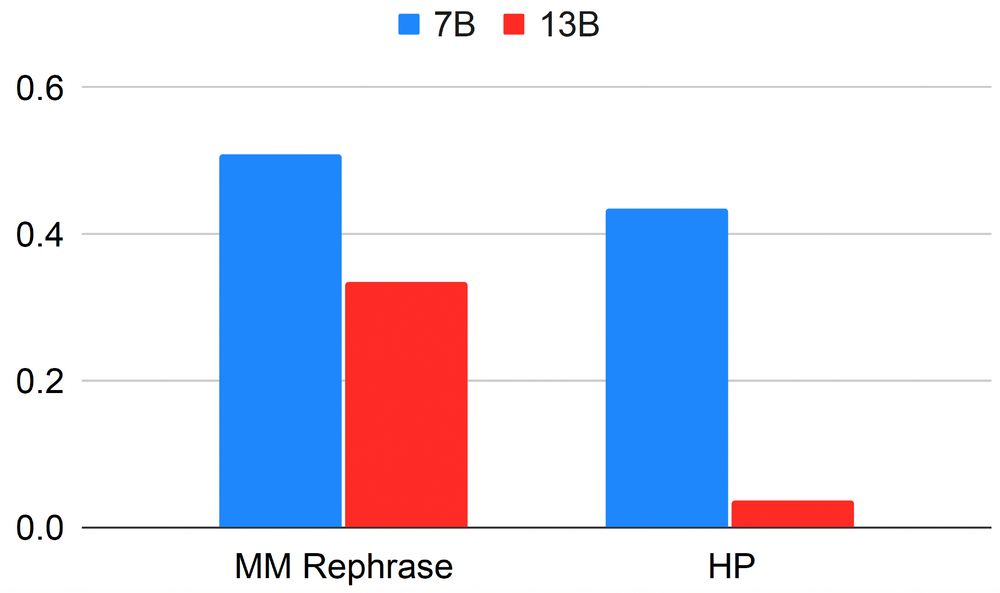

📉 Larger models are more robust to extraction attacks post-editing compared to smaller ones

🎯 UnLOK-VQA enables targeted evaluations of unlearning defenses

🔥 Multimodal attacks are the most effective

🛡️ Our strongest defense is deleting info from hidden states

📉 Larger models are more robust to extraction attacks post-editing compared to smaller ones

🎯 UnLOK-VQA enables targeted evaluations of unlearning defenses

Multimodal data opens up new attack vectors.

We benchmark 6 unlearning defenses against 7 attack strategies, including:

✅White-box attacks

✅Black-box paraphrased multimodal prompts

Multimodal data opens up new attack vectors.

We benchmark 6 unlearning defenses against 7 attack strategies, including:

✅White-box attacks

✅Black-box paraphrased multimodal prompts

✅Generalization Evaluation

✔️Rephrased questions

✔️Rephrased images

✅Specificity Evaluation

✔️Neighboring questions (same image, new question)

✔️Neighboring images (same concept, different image)

✅Generalization Evaluation

✔️Rephrased questions

✔️Rephrased images

✅Specificity Evaluation

✔️Neighboring questions (same image, new question)

✔️Neighboring images (same concept, different image)

UnLOK-VQA focuses on unlearning pretrained knowledge and builds on OK-VQA, a visual QA dataset. We extend it w/ an automated question-answer generation and image generation pipeline:

✅Forget samples from OK-VQA

✅New samples at varying levels of proximity (easy, medium, hard)

UnLOK-VQA focuses on unlearning pretrained knowledge and builds on OK-VQA, a visual QA dataset. We extend it w/ an automated question-answer generation and image generation pipeline:

✅Forget samples from OK-VQA

✅New samples at varying levels of proximity (easy, medium, hard)

📜 Legal compliance (e.g., GDPR, CCPA, the right to be forgotten)

🔐 Multimodal Privacy (e.g., faces, locations, license plates)

📷 Trust in real-world image-grounded systems

📜 Legal compliance (e.g., GDPR, CCPA, the right to be forgotten)

🔐 Multimodal Privacy (e.g., faces, locations, license plates)

📷 Trust in real-world image-grounded systems

Existing unlearning benchmarks focus only on text.

But multimodal LLMs are trained on web-scale data—images + captions—making them highly vulnerable to leakage of sensitive or unwanted content.

Unlearning must hold across modalities, not just in language.

Existing unlearning benchmarks focus only on text.

But multimodal LLMs are trained on web-scale data—images + captions—making them highly vulnerable to leakage of sensitive or unwanted content.

Unlearning must hold across modalities, not just in language.

❓ How effectively can we erase multimodal knowledge?

❓ How should we measure forgetting in multimodal settings?

✅We benchmark 6 unlearning defenses against 7 whitebox and blackbox attack strategies

❓ How effectively can we erase multimodal knowledge?

❓ How should we measure forgetting in multimodal settings?

✅We benchmark 6 unlearning defenses against 7 whitebox and blackbox attack strategies

We’re looking for program committee members!

📝 Submit your Expression of Interest here: forms.gle/ZPEHeymJ4t5N...

#ICML2025

We’re looking for program committee members!

📝 Submit your Expression of Interest here: forms.gle/ZPEHeymJ4t5N...

#ICML2025

Mantas Mazeika, Yang Liu, @katherinelee.bsky.social, @mohitbansal.bsky.social, Bo Li and myself (@vaidehipatil.bsky.social) 🙂

Mantas Mazeika, Yang Liu, @katherinelee.bsky.social, @mohitbansal.bsky.social, Bo Li and myself (@vaidehipatil.bsky.social) 🙂

We're lucky to have an incredible lineup of speakers and panelists covering diverse topics in our workshop:

Nicholas Carlini, Ling Liu, Shagufta Mehnaz, @peterbhase.bsky.social , Eleni Triantafillou, Sijia Liu, @afedercooper.bsky.social, Amy Cyphert

We're lucky to have an incredible lineup of speakers and panelists covering diverse topics in our workshop:

Nicholas Carlini, Ling Liu, Shagufta Mehnaz, @peterbhase.bsky.social , Eleni Triantafillou, Sijia Liu, @afedercooper.bsky.social, Amy Cyphert

🔗 Full details & updates: mugenworkshop.github.io

📅 Key Dates:

📝 Submission Deadline: May 19

✅ Acceptance Notifications: June 9

🤝 Workshop Date: July 18 or 19

🔗 Full details & updates: mugenworkshop.github.io

📅 Key Dates:

📝 Submission Deadline: May 19

✅ Acceptance Notifications: June 9

🤝 Workshop Date: July 18 or 19

@esteng.bsky.social , and @mohitbansal.bsky.social for a great collaboration!

🚀 Check it out here:

📄 Paper: arxiv.org/abs/2502.15082

💻 Code: github.com/Vaidehi99/UP...

🤗 @huggingface page: huggingface.co/papers/2502....

@esteng.bsky.social , and @mohitbansal.bsky.social for a great collaboration!

🚀 Check it out here:

📄 Paper: arxiv.org/abs/2502.15082

💻 Code: github.com/Vaidehi99/UP...

🤗 @huggingface page: huggingface.co/papers/2502....

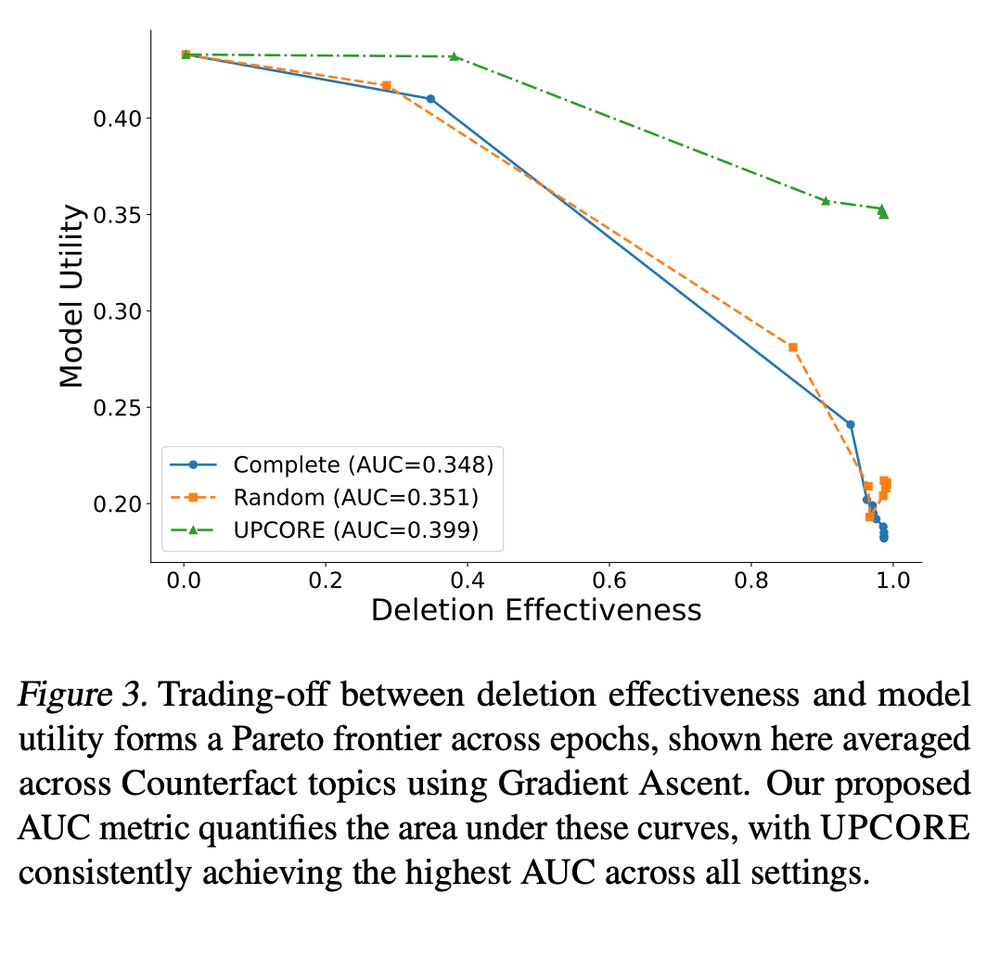

✔️ Less unintended degradation

✔️ Deletion transferred to pruned points

UPCORE provides a practical, method-agnostic approach that improves the reliability of unlearning techniques.

✔️ Less unintended degradation

✔️ Deletion transferred to pruned points

UPCORE provides a practical, method-agnostic approach that improves the reliability of unlearning techniques.

This provides a complete picture of the trade-off between forgetting and knowledge retention over the unlearning trajectory.

This provides a complete picture of the trade-off between forgetting and knowledge retention over the unlearning trajectory.

📉 Gradient Ascent

🚫 Refusal

🔄 Negative Preference Optimization (NPO)

We measure:

✔️ Deletion effectiveness – How well the target is removed

✔️ Unintended degradation – Impact on other abilities

✔️ Positive transfer – How well unlearning generalizes

📉 Gradient Ascent

🚫 Refusal

🔄 Negative Preference Optimization (NPO)

We measure:

✔️ Deletion effectiveness – How well the target is removed

✔️ Unintended degradation – Impact on other abilities

✔️ Positive transfer – How well unlearning generalizes