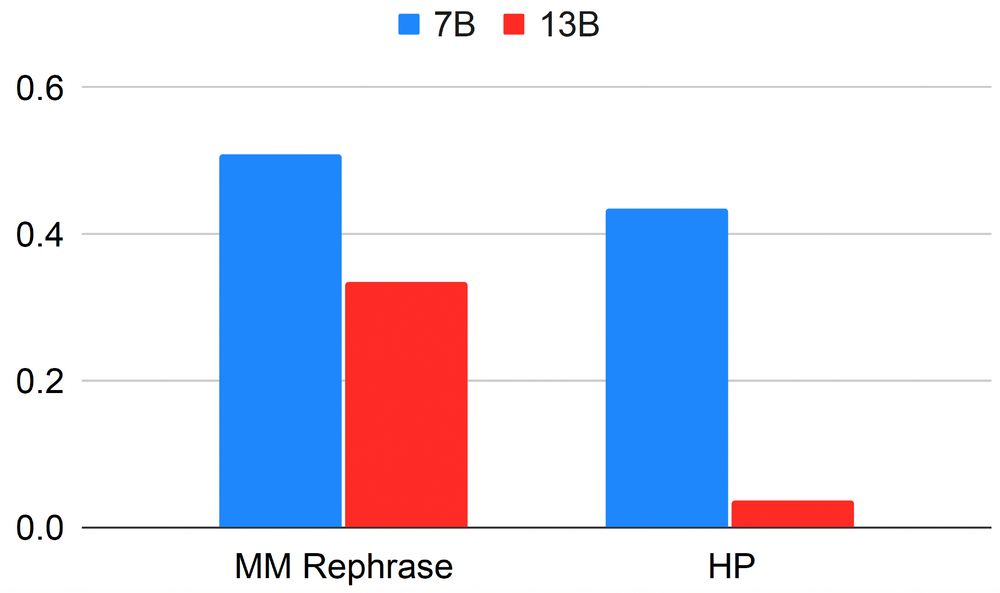

🔥 Multimodal attacks are the most effective

🛡️ Our strongest defense is deleting info from hidden states

📉 Larger models are more robust to extraction attacks post-editing compared to smaller ones

🎯 UnLOK-VQA enables targeted evaluations of unlearning defenses

🔥 Multimodal attacks are the most effective

🛡️ Our strongest defense is deleting info from hidden states

📉 Larger models are more robust to extraction attacks post-editing compared to smaller ones

🎯 UnLOK-VQA enables targeted evaluations of unlearning defenses

UnLOK-VQA focuses on unlearning pretrained knowledge and builds on OK-VQA, a visual QA dataset. We extend it w/ an automated question-answer generation and image generation pipeline:

✅Forget samples from OK-VQA

✅New samples at varying levels of proximity (easy, medium, hard)

UnLOK-VQA focuses on unlearning pretrained knowledge and builds on OK-VQA, a visual QA dataset. We extend it w/ an automated question-answer generation and image generation pipeline:

✅Forget samples from OK-VQA

✅New samples at varying levels of proximity (easy, medium, hard)

Existing unlearning benchmarks focus only on text.

But multimodal LLMs are trained on web-scale data—images + captions—making them highly vulnerable to leakage of sensitive or unwanted content.

Unlearning must hold across modalities, not just in language.

Existing unlearning benchmarks focus only on text.

But multimodal LLMs are trained on web-scale data—images + captions—making them highly vulnerable to leakage of sensitive or unwanted content.

Unlearning must hold across modalities, not just in language.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

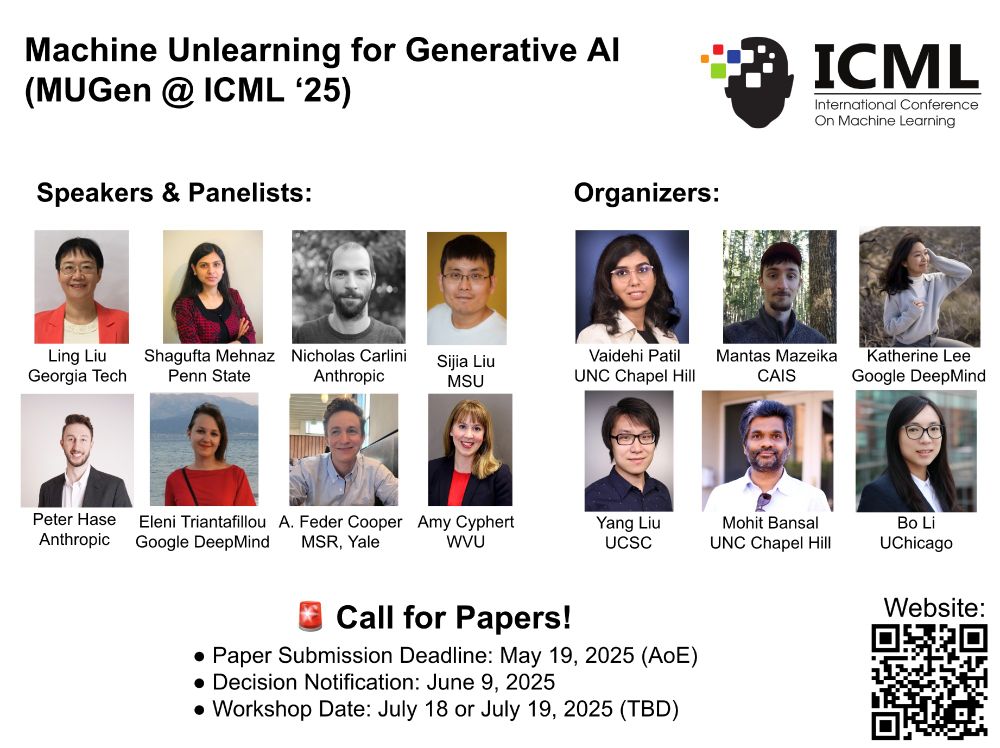

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

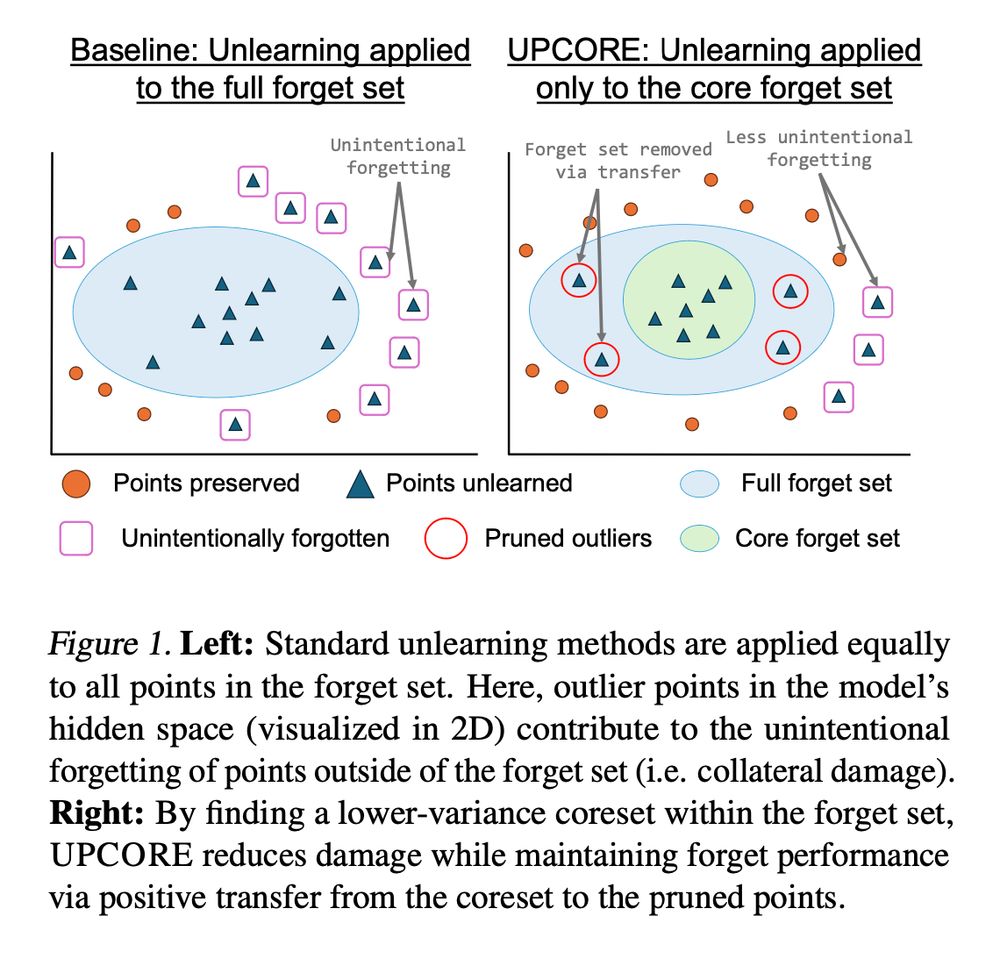

✔️ Less unintended degradation

✔️ Deletion transferred to pruned points

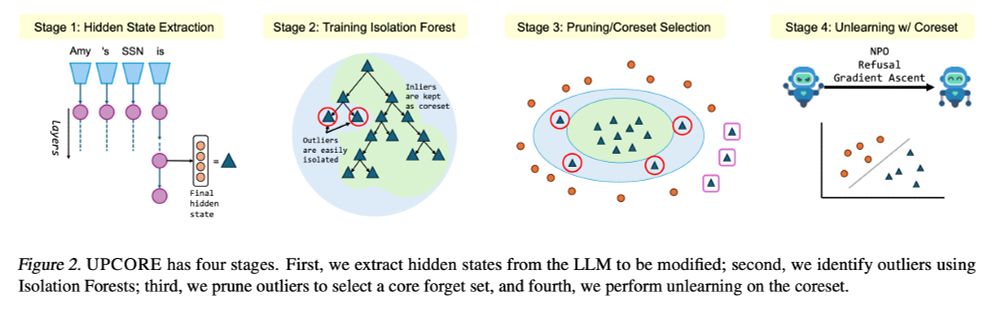

UPCORE provides a practical, method-agnostic approach that improves the reliability of unlearning techniques.

✔️ Less unintended degradation

✔️ Deletion transferred to pruned points

UPCORE provides a practical, method-agnostic approach that improves the reliability of unlearning techniques.

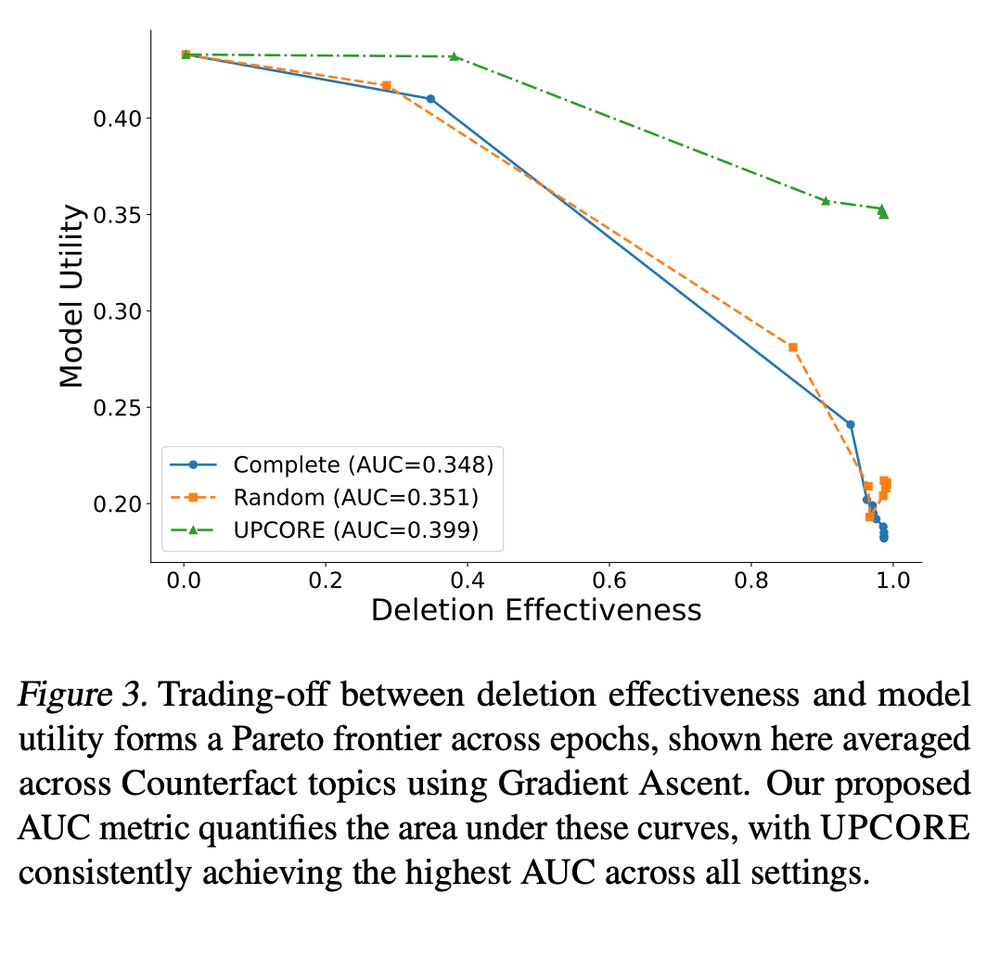

This provides a complete picture of the trade-off between forgetting and knowledge retention over the unlearning trajectory.

This provides a complete picture of the trade-off between forgetting and knowledge retention over the unlearning trajectory.

Thus, UPCORE reduces negative collateral effects while maintaining effective deletion.

Thus, UPCORE reduces negative collateral effects while maintaining effective deletion.

✅ Minimizes unintended degradation

✅ Preserves model utility

✅ Compatible with multiple unlearning methods

✅ Minimizes unintended degradation

✅ Preserves model utility

✅ Compatible with multiple unlearning methods

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇