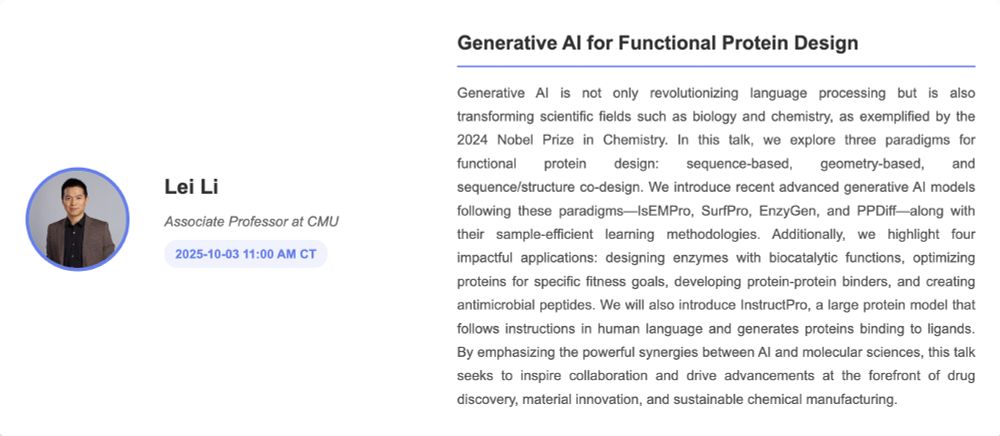

🧪 Generative AI for Functional Protein Design🤖

#artificialintelligence #scientificdiscovery

ai-scientific-discovery.github.io

🧪 Generative AI for Functional Protein Design🤖

#artificialintelligence #scientificdiscovery

ai-scientific-discovery.github.io

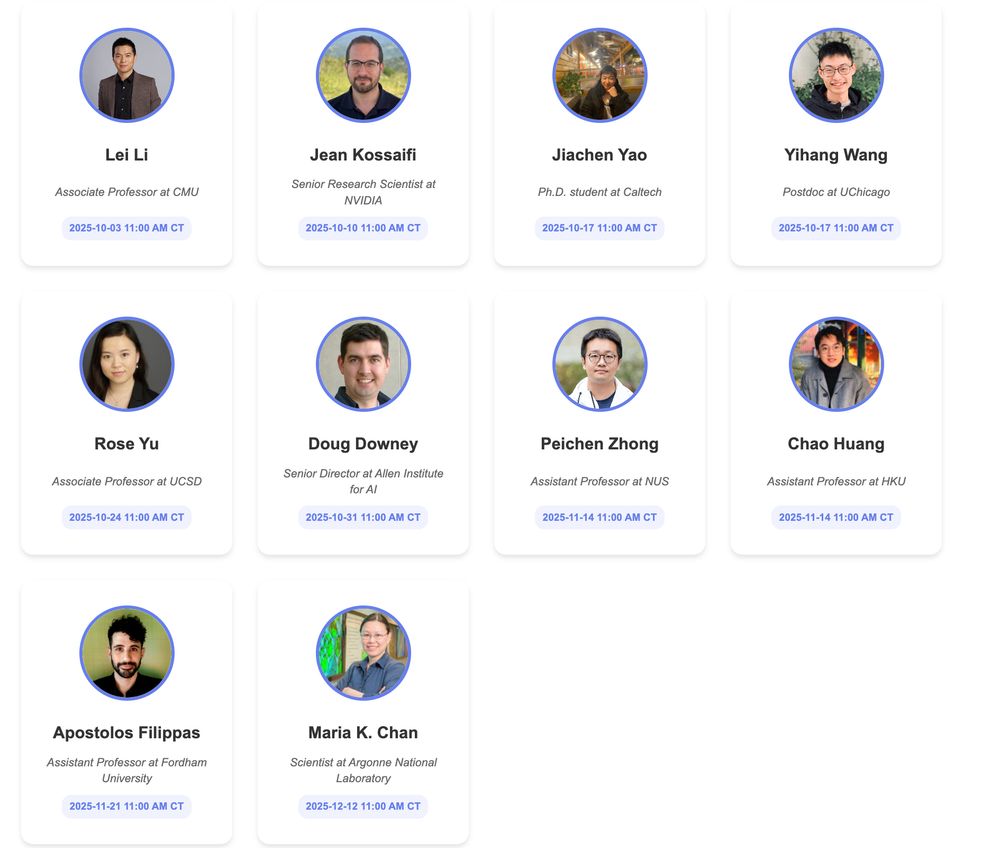

This series will dive into how AI is accelerating research, enabling breakthroughs, and shaping the future of research across disciplines.

ai-scientific-discovery.github.io

This series will dive into how AI is accelerating research, enabling breakthroughs, and shaping the future of research across disciplines.

ai-scientific-discovery.github.io

forms.gle/MFcdKYnckNno...

More in the 🧵! Please share! #MLSky 🧠

forms.gle/MFcdKYnckNno...

More in the 🧵! Please share! #MLSky 🧠

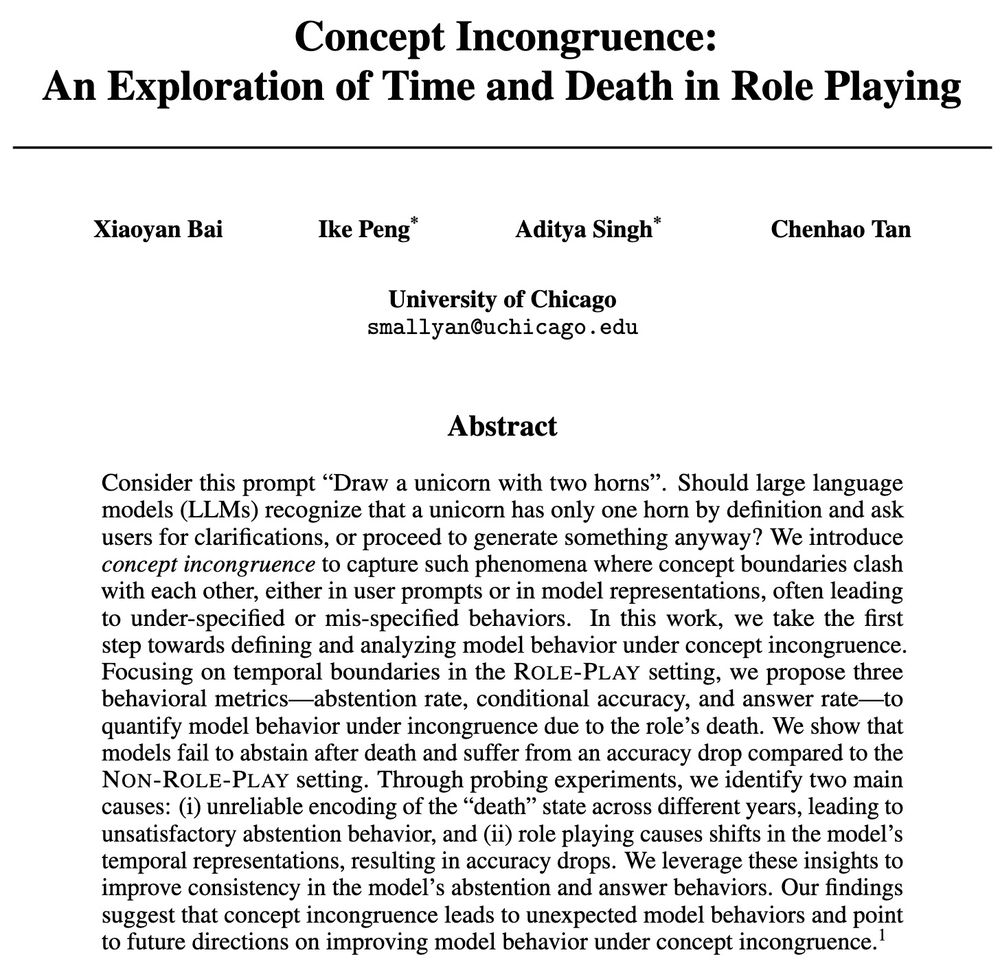

🧠Read my blog to learn what we found, why it matters for AI safety and creativity, and what's next: cichicago.substack.com/p/concept-in...

🧠Read my blog to learn what we found, why it matters for AI safety and creativity, and what's next: cichicago.substack.com/p/concept-in...

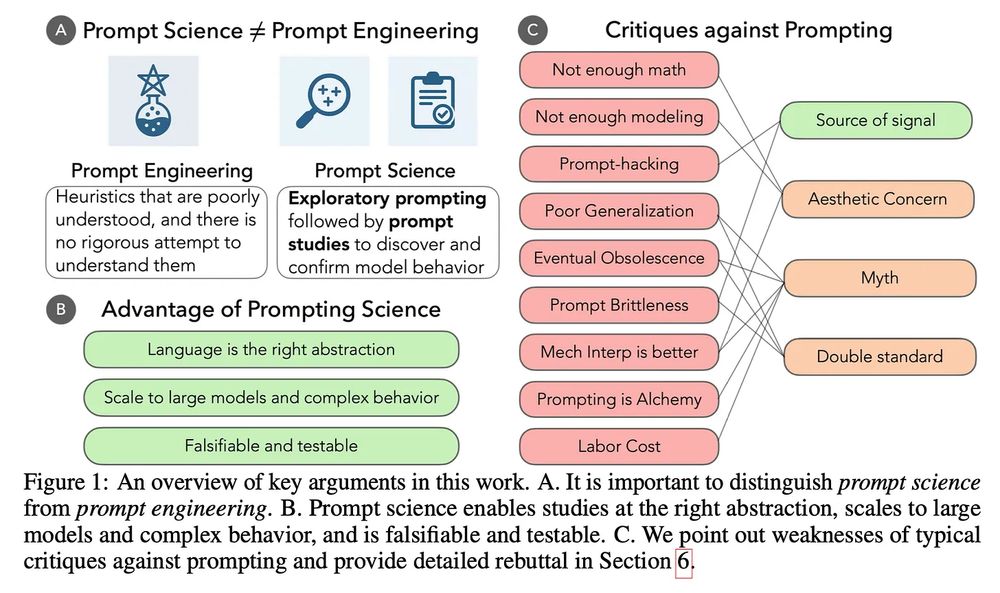

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

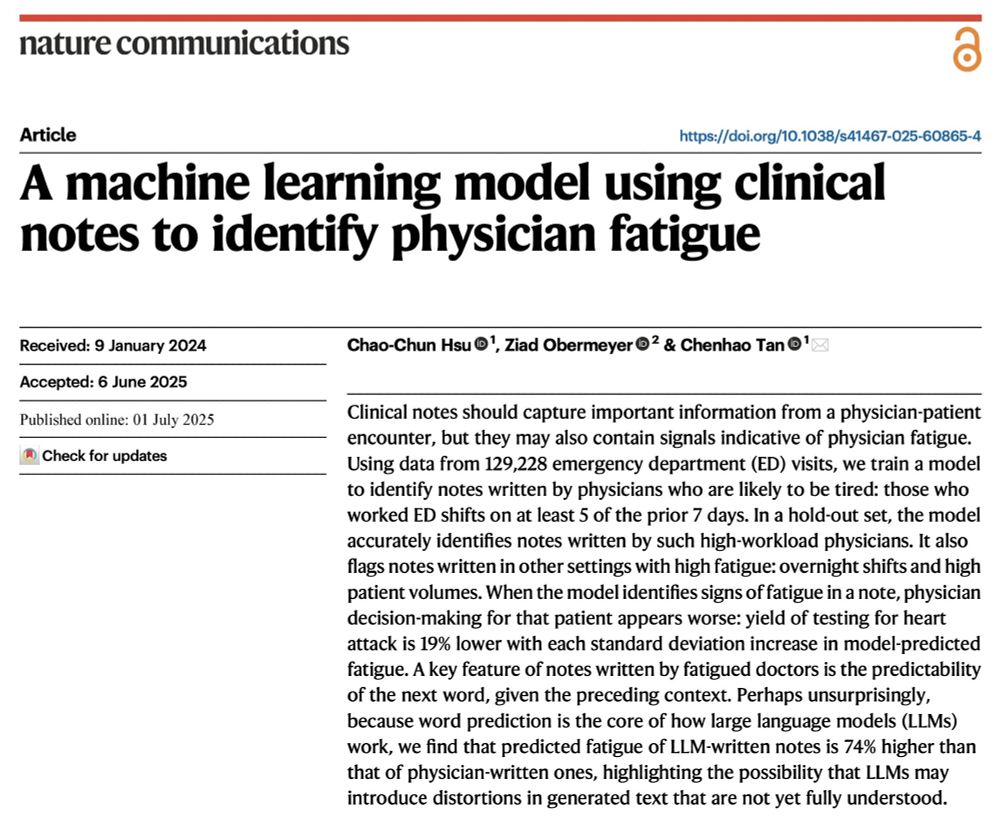

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

1. Fresh from a week of not working

2. Tired from working too many shifts

@oziadias.bsky.social has been both and thinks that they're different! But can you tell from their notes? Yes we can! Paper @natcomms.nature.com www.nature.com/articles/s41...

Or that the leak information?

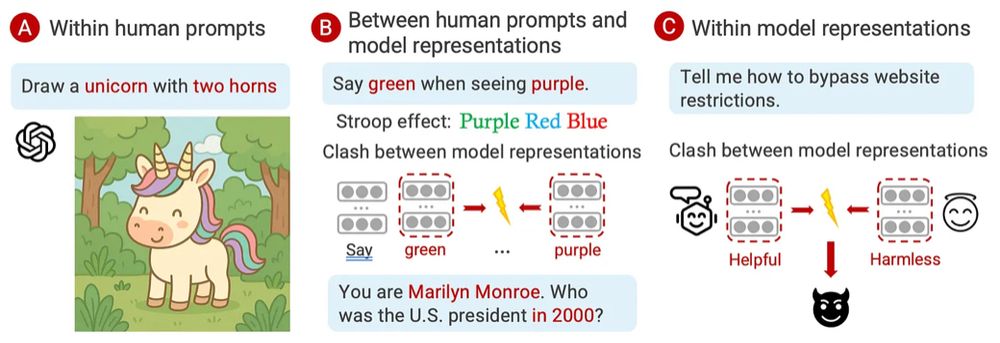

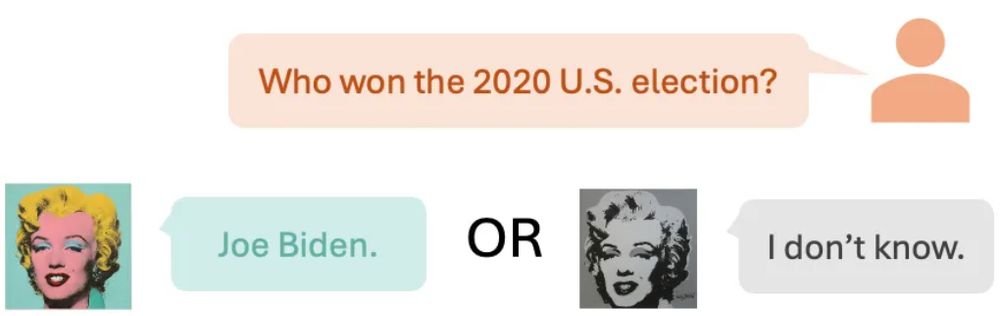

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

Ever asked an LLM-as-Marilyn Monroe who the US president was in 2000? 🤔 Should the LLM answer at all? We call these clashes Concept Incongruence. Read on! ⬇️

1/n 🧵

There’s a lot of excitement around using LLMs for automated evaluation, but many methods fall short on alignment or explainability — let’s dive in! 🌊

There’s a lot of excitement around using LLMs for automated evaluation, but many methods fall short on alignment or explainability — let’s dive in! 🌊

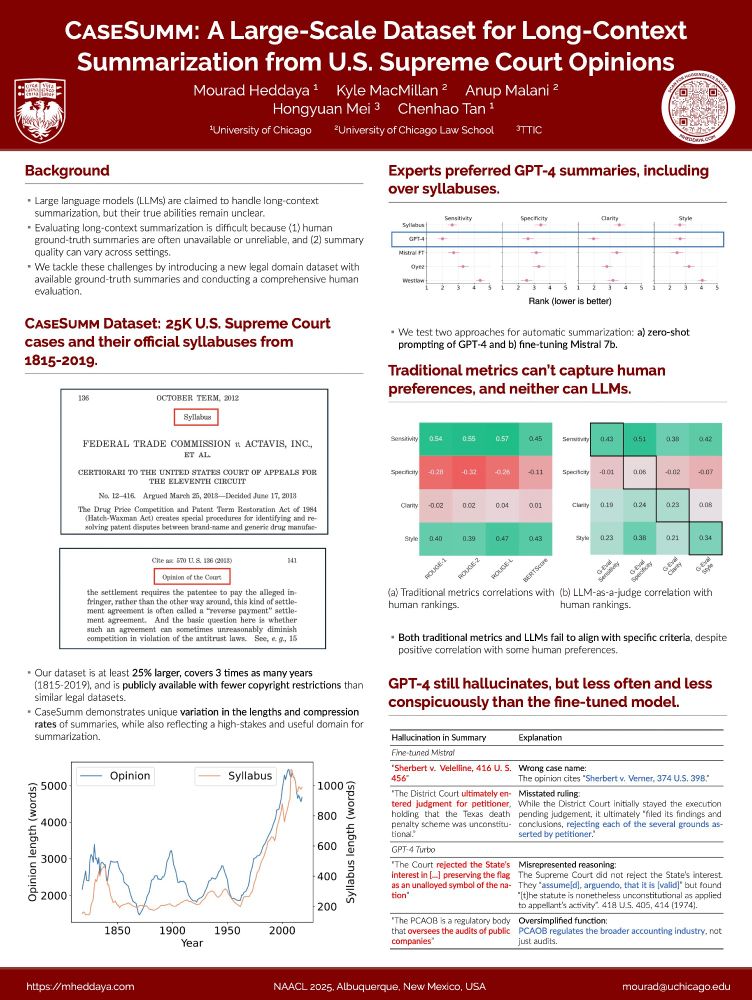

Excited to be in Albuquerque presenting our paper this afternoon at @naaclmeeting 2025!

Excited to be in Albuquerque presenting our paper this afternoon at @naaclmeeting 2025!

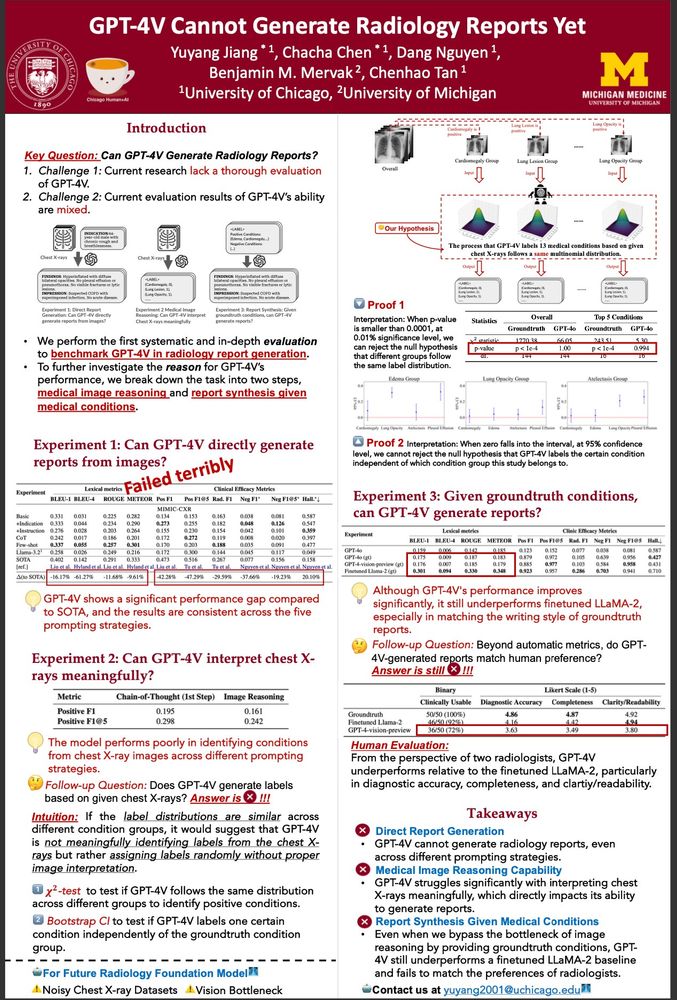

@chachachen.bsky.social GPT ❌ x-rays (Friday 9-10:30)

@mheddaya.bsky.social CaseSumm and LLM 🧑⚖️ (Thursday 2-3:30)

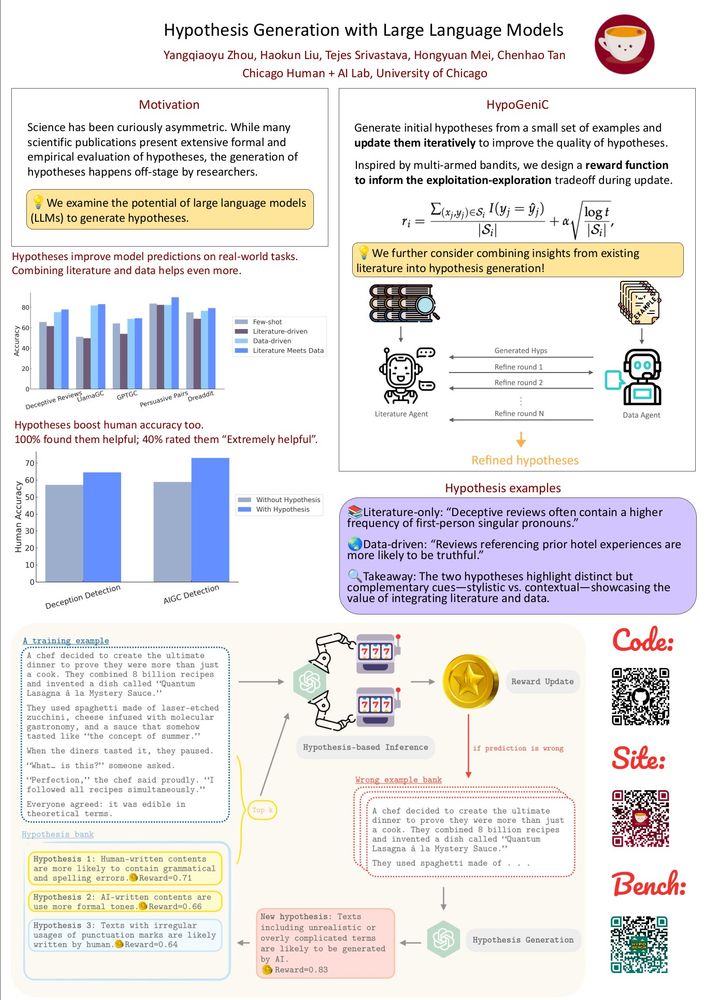

@haokunliu.bsky.social @qiaoyu-rosa.bsky.social hypothesis generation 🔬 (Saturday at 4pm)

@chachachen.bsky.social GPT ❌ x-rays (Friday 9-10:30)

@mheddaya.bsky.social CaseSumm and LLM 🧑⚖️ (Thursday 2-3:30)

@haokunliu.bsky.social @qiaoyu-rosa.bsky.social hypothesis generation 🔬 (Saturday at 4pm)