- AI 101 series

- ML techniques

- AI Unicorns profiles

- Global dynamics

- ML History

- AI/ML Flashcards

Haven't decided yet which handle to maintain: this or @kseniase

That's why researchers suggest focusing on improving "density" instead of just aiming for bigger and more powerful models.

That's why researchers suggest focusing on improving "density" instead of just aiming for bigger and more powerful models.

• Costs to run models are dropping as they are becoming more efficient.

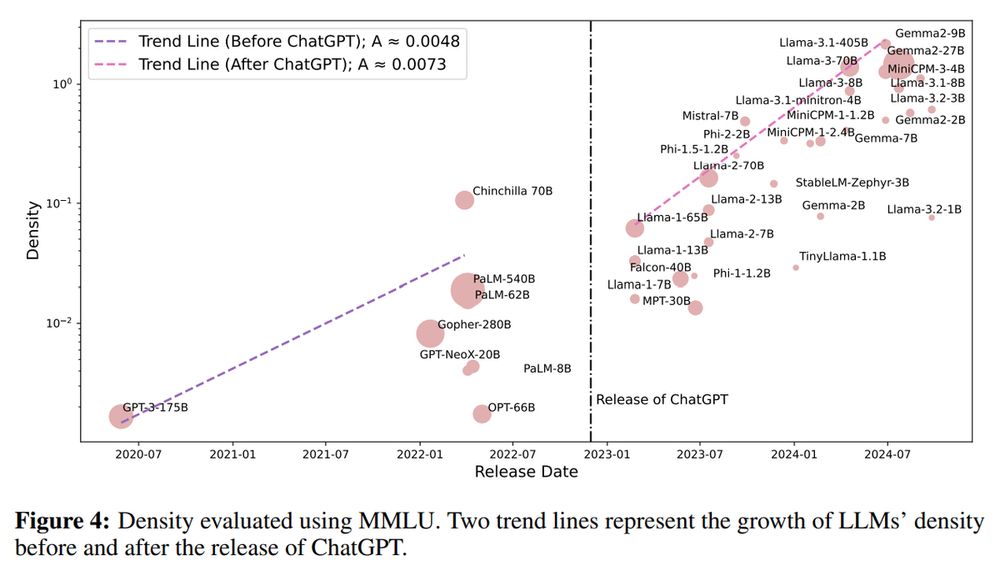

• The release of ChatGPT sped up the growth of efficiency of new models up to 50%!

• Techniques like pruning and distillation don’t necessarily make models more efficient.

• Costs to run models are dropping as they are becoming more efficient.

• The release of ChatGPT sped up the growth of efficiency of new models up to 50%!

• Techniques like pruning and distillation don’t necessarily make models more efficient.

It combines a two-step process:

- Loss Estimation: Links a model's size and training data to its accuracy

- Performance Estimation: Uses a sigmoid function to predict how well a model performs based on its loss.

It combines a two-step process:

- Loss Estimation: Links a model's size and training data to its accuracy

- Performance Estimation: Uses a sigmoid function to predict how well a model performs based on its loss.

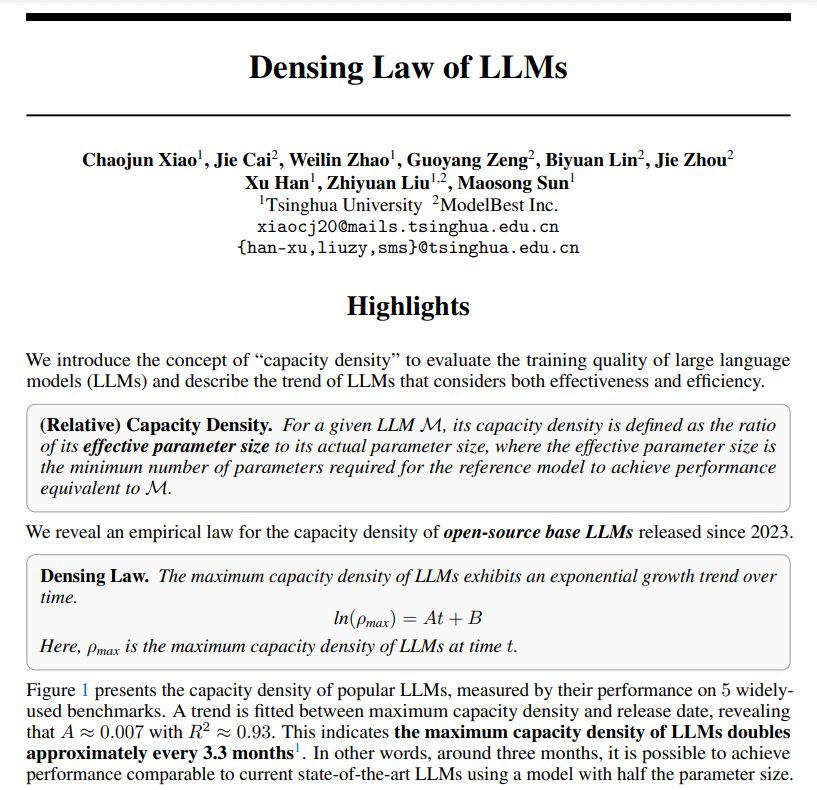

The density of a model is the ratio of its effective parameter size to its actual parameter size.

If the effective size is close to or smaller than the actual size, the model is very efficient.

The density of a model is the ratio of its effective parameter size to its actual parameter size.

If the effective size is close to or smaller than the actual size, the model is very efficient.

A higher-density model can deliver better results without needing more resources, reducing computational costs, making models suitable for devices with limited resources, like smartphones and avoiding unnecessary energy use.

A higher-density model can deliver better results without needing more resources, reducing computational costs, making models suitable for devices with limited resources, like smartphones and avoiding unnecessary energy use.

Interestingly, they found a trend, called Densing Law:

The capacity density of LLMs is doubling every 3 months, meaning that newer models are getting much better at balancing performance and size.

Let's look at this more precisely:

Interestingly, they found a trend, called Densing Law:

The capacity density of LLMs is doubling every 3 months, meaning that newer models are getting much better at balancing performance and size.

Let's look at this more precisely:

Transforms a single image into interactive 3D scenes with varied art styles and realistic physics. You can explore, interact with elements and move within AI-generated environments directly in your web browser

www.youtube.com/watch?v=lPYJ...

Transforms a single image into interactive 3D scenes with varied art styles and realistic physics. You can explore, interact with elements and move within AI-generated environments directly in your web browser

www.youtube.com/watch?v=lPYJ...

Generates 3D environments with object interactions, animations, and physical effects from one image or text prompt. You can interact with them in real-time using a keyboard and mouse.

Paper: deepmind.google/discover/blo...

Our example: www.youtube.com/watch?v=YjO6...

Generates 3D environments with object interactions, animations, and physical effects from one image or text prompt. You can interact with them in real-time using a keyboard and mouse.

Paper: deepmind.google/discover/blo...

Our example: www.youtube.com/watch?v=YjO6...

- FM concepts for optimizing the path from noise to realistic data

- Continuous Normalizing Flows (CNFs)

- Conditional Flow Matching

- Difference of FM and diffusion models

Find out more: turingpost.com/p/flowmatching

- FM concepts for optimizing the path from noise to realistic data

- Continuous Normalizing Flows (CNFs)

- Conditional Flow Matching

- Difference of FM and diffusion models

Find out more: turingpost.com/p/flowmatching

www.turingpost.com/subscribe

www.turingpost.com/subscribe

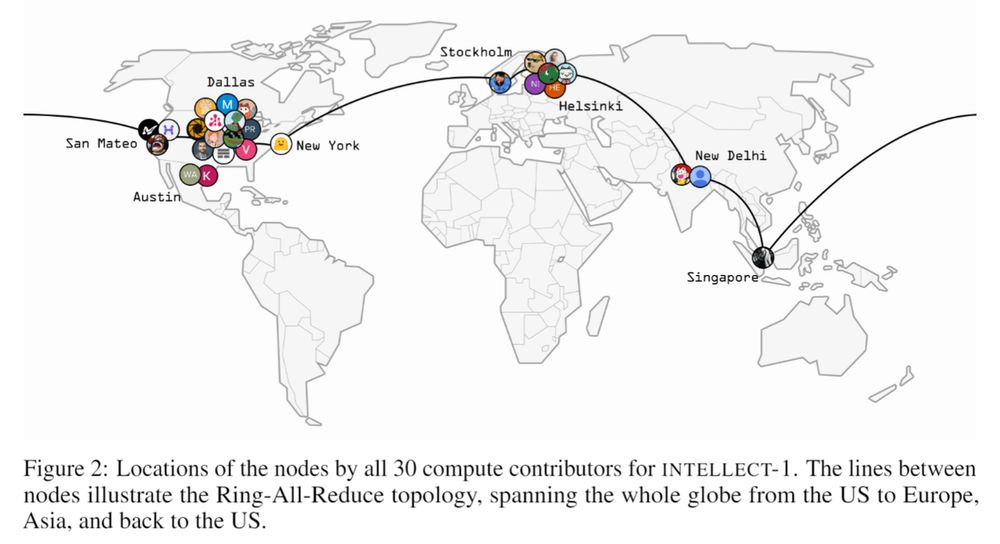

INTELLECT-1 is a 10B open-source LLM trained over 42 days on 1T tokens across 14 global nodes, leverages the PRIME framework for exceptional efficiency (400× bandwidth reduction).

github.com/PrimeIntelle...

INTELLECT-1 is a 10B open-source LLM trained over 42 days on 1T tokens across 14 global nodes, leverages the PRIME framework for exceptional efficiency (400× bandwidth reduction).

github.com/PrimeIntelle...

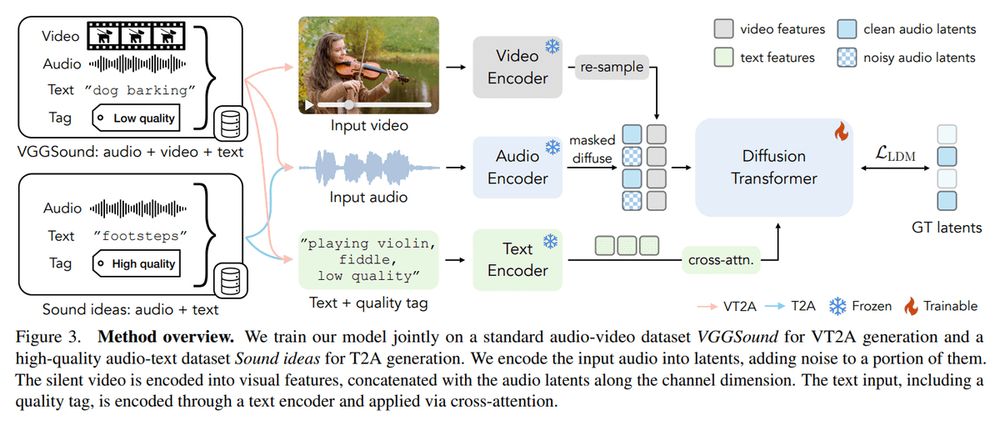

MultiFoley is an AI model generating high-quality sound effects from text, audio, and video inputs. Cool demos highlight its creative potential.

arxiv.org/abs/2411.17698

MultiFoley is an AI model generating high-quality sound effects from text, audio, and video inputs. Cool demos highlight its creative potential.

arxiv.org/abs/2411.17698

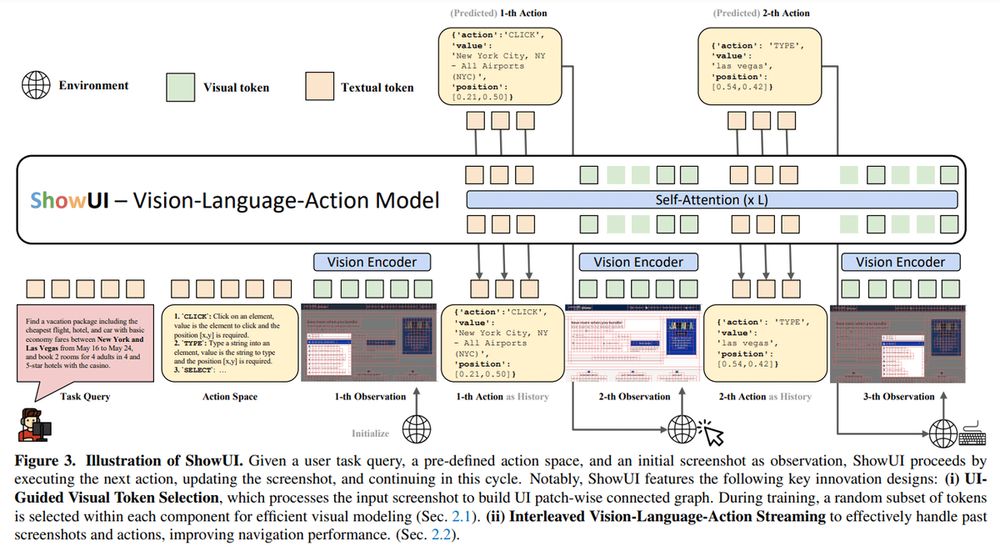

ShowUI is a 2B vision-language-action model tailored for GUI tasks:

- features UI-guided token selection (33% fewer tokens)

- interleaved streaming for multi-turn tasks

- 256K dataset

- achieves 75.1% zero-shot grounding accuracy

arxiv.org/abs/2411.17465

ShowUI is a 2B vision-language-action model tailored for GUI tasks:

- features UI-guided token selection (33% fewer tokens)

- interleaved streaming for multi-turn tasks

- 256K dataset

- achieves 75.1% zero-shot grounding accuracy

arxiv.org/abs/2411.17465

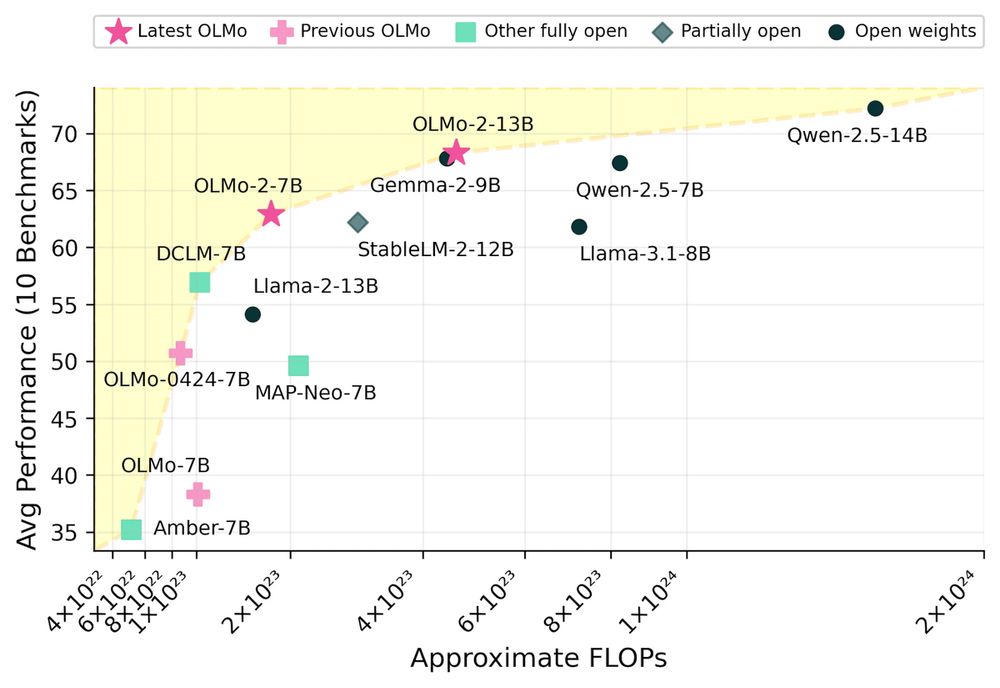

OLMo 2, a family of fully open LMs with 7B and 13B parameter, is trained on 5 trillion tokens.

allenai.org/blog/olmo2

OLMo 2, a family of fully open LMs with 7B and 13B parameter, is trained on 5 trillion tokens.

allenai.org/blog/olmo2

It excites with strong math, coding, and reasoning scores, ranking between Claude 3.5 Sonnet and OpenAI’s o1-mini.

- Optimized for consumer GPUs through quantization

- Open-sourced under Apache, revealing tokens and weights

huggingface.co/Qwen/QwQ-32B...

It excites with strong math, coding, and reasoning scores, ranking between Claude 3.5 Sonnet and OpenAI’s o1-mini.

- Optimized for consumer GPUs through quantization

- Open-sourced under Apache, revealing tokens and weights

huggingface.co/Qwen/QwQ-32B...

Also, elevate your AI game with our free newsletter ↓

www.turingpost.com/subscribe

Also, elevate your AI game with our free newsletter ↓

www.turingpost.com/subscribe

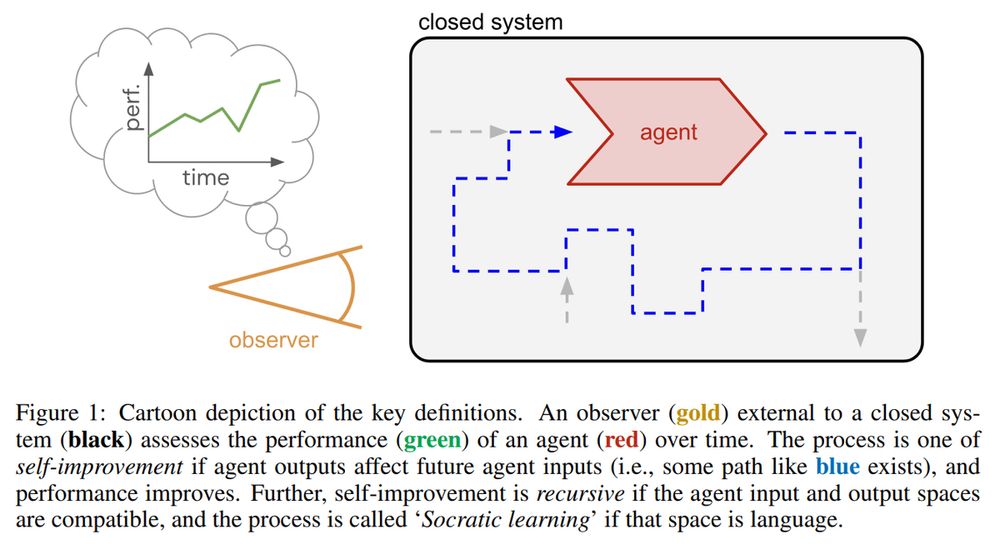

This framework leverages recursive language-based "games" for self-improvement, focusing of feedback, coverage, and scalability. It suggests a roadmap for scalable AI via autonomous data gen and feedback loops

arxiv.org/abs/2411.16905

This framework leverages recursive language-based "games" for self-improvement, focusing of feedback, coverage, and scalability. It suggests a roadmap for scalable AI via autonomous data gen and feedback loops

arxiv.org/abs/2411.16905