Martin Trapp

@trappmartin.bsky.social

Assistant Prof in ML @ KTH 🇸🇪.

Previous: Aalto University 🇫🇮, TU Graz 🇦🇹, originally from 🇩🇪.

Doing: Reliable ML | uncertainty stuff | Bayesian stats | probabilistic circuits

https://trappmartin.github.io/

Previous: Aalto University 🇫🇮, TU Graz 🇦🇹, originally from 🇩🇪.

Doing: Reliable ML | uncertainty stuff | Bayesian stats | probabilistic circuits

https://trappmartin.github.io/

The mathematical bridge.

September 26, 2025 at 9:33 PM

The mathematical bridge.

I mean, it did start with a rather illuminati-cult like logo.

September 11, 2025 at 7:47 AM

I mean, it did start with a rather illuminati-cult like logo.

Swimming in foggy water with friends, what else could one wish for. 🤩

August 30, 2025 at 8:56 AM

Swimming in foggy water with friends, what else could one wish for. 🤩

Considering how “warm” I feel now, this was probably the last swim of the year. But what a beautiful swim. 🤩

August 18, 2025 at 6:24 PM

Considering how “warm” I feel now, this was probably the last swim of the year. But what a beautiful swim. 🤩

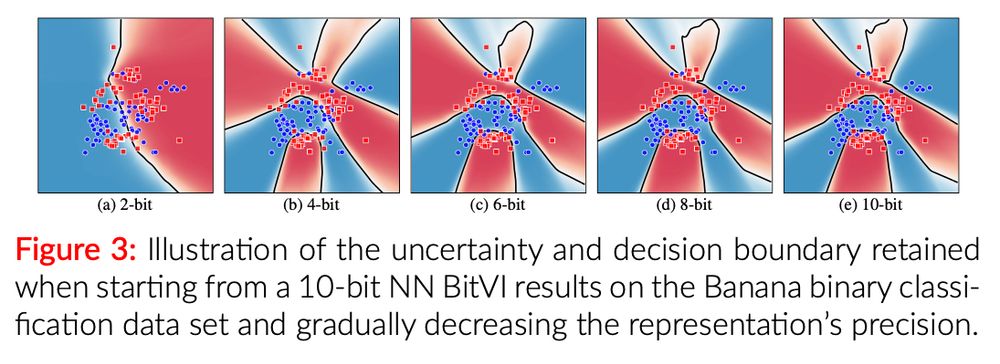

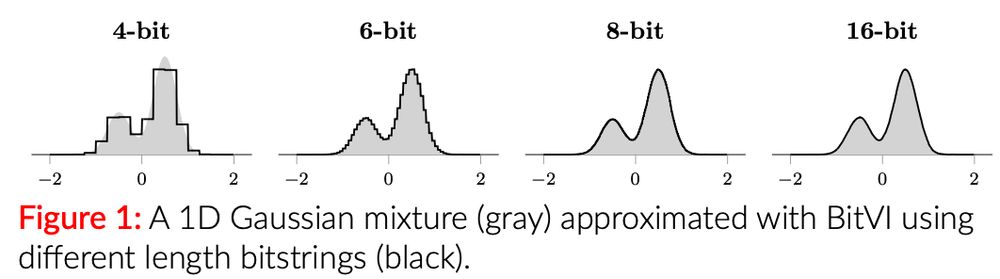

Moreover, #BitVI can help identify the numerical precision required to represent a target density, a crucial task in the quantisation of neural networks and when working with low-precision regimes.

July 21, 2025 at 11:41 AM

Moreover, #BitVI can help identify the numerical precision required to represent a target density, a crucial task in the quantisation of neural networks and when working with low-precision regimes.

In #BitVI, we exploit the fixed-point representations of numbers and a tractable variational approximation based on #circuits. Thus, enabling efficient ELBO computation and control of the numerical precision. Additionally, BitVI is easily extendable to other number systems, such as floating-point.

July 21, 2025 at 11:41 AM

Remember that computers use bitstrings to represent numbers? We exploit this in our recent @auai.org paper and introduce #BitVI.

#BitVI directly learns an approximation in the space of bitstring representations, thus, capturing complex distributions under varying numerical precision regimes.

#BitVI directly learns an approximation in the space of bitstring representations, thus, capturing complex distributions under varying numerical precision regimes.

July 21, 2025 at 11:41 AM

Life could be worse.

July 11, 2025 at 7:05 PM

Life could be worse.

Fixed it for you...

June 18, 2025 at 4:59 PM

Fixed it for you...

Paris was such a treat! Back to 🇫🇮!

June 10, 2025 at 5:06 PM

Paris was such a treat! Back to 🇫🇮!

Big tech made it possible to finally have one of the most important problems of mankind solved.

I give you… ChickenZilla!!!!

I give you… ChickenZilla!!!!

April 28, 2025 at 11:53 AM

Big tech made it possible to finally have one of the most important problems of mankind solved.

I give you… ChickenZilla!!!!

I give you… ChickenZilla!!!!

We had a pretty dystopian atmosphere one evening in Jakarta with heavy rain. So I made it look even more dystopian. 😅

April 15, 2025 at 3:27 PM

We had a pretty dystopian atmosphere one evening in Jakarta with heavy rain. So I made it look even more dystopian. 😅

Crazy evening sky today in Helsinki.

April 2, 2025 at 5:58 PM

Crazy evening sky today in Helsinki.

Feel in meme mode today. 😅

March 22, 2025 at 2:18 PM

Feel in meme mode today. 😅

Instead of increasing the inference (prediction) cost by a factor of ~5 - 70 x (depending on the method), you can now make predictions with almost no additional overhead.

March 19, 2025 at 9:32 AM

Instead of increasing the inference (prediction) cost by a factor of ~5 - 70 x (depending on the method), you can now make predictions with almost no additional overhead.

Because we effectively turn the NN into a multilinear function, we can also assess the model's sensitivity to input perturbations. For example, we can estimate sensitivity maps by learning Gaussian input densities for the covariates and visualise their input sensitivity information.

March 19, 2025 at 9:32 AM

Because we effectively turn the NN into a multilinear function, we can also assess the model's sensitivity to input perturbations. For example, we can estimate sensitivity maps by learning Gaussian input densities for the covariates and visualise their input sensitivity information.

We ask how we can have a fast and practical approximation of the posterior predictive distribution in BNNs.

Our "Streamlining" approach achieves this by approximating the posterior predictive with little computational overhead through local linearisations and local Gaussian approximations.

Our "Streamlining" approach achieves this by approximating the posterior predictive with little computational overhead through local linearisations and local Gaussian approximations.

March 19, 2025 at 9:32 AM

We ask how we can have a fast and practical approximation of the posterior predictive distribution in BNNs.

Our "Streamlining" approach achieves this by approximating the posterior predictive with little computational overhead through local linearisations and local Gaussian approximations.

Our "Streamlining" approach achieves this by approximating the posterior predictive with little computational overhead through local linearisations and local Gaussian approximations.

Are you going to be at #WACV and want to know if “Flatness Improves Backbone Generalisation in Few-shot Classification”?

Then join the oral presentation by @ruili-pml.bsky.social of our paper!

🔗 lnkd.in/dBMmN7Vs

Done together with @marcusklasson.bsky.social and @arnosolin.bsky.social.

Then join the oral presentation by @ruili-pml.bsky.social of our paper!

🔗 lnkd.in/dBMmN7Vs

Done together with @marcusklasson.bsky.social and @arnosolin.bsky.social.

February 23, 2025 at 4:54 PM

Are you going to be at #WACV and want to know if “Flatness Improves Backbone Generalisation in Few-shot Classification”?

Then join the oral presentation by @ruili-pml.bsky.social of our paper!

🔗 lnkd.in/dBMmN7Vs

Done together with @marcusklasson.bsky.social and @arnosolin.bsky.social.

Then join the oral presentation by @ruili-pml.bsky.social of our paper!

🔗 lnkd.in/dBMmN7Vs

Done together with @marcusklasson.bsky.social and @arnosolin.bsky.social.

So much new snow tonight in Helsinki. Amazing 🤩

February 16, 2025 at 8:31 PM

So much new snow tonight in Helsinki. Amazing 🤩

What a beautiful day today. ☀️

February 1, 2025 at 1:22 PM

What a beautiful day today. ☀️

I’m so excited to test the old telescope that we found in the back of the garage. Night sky awaits.

December 29, 2024 at 5:44 PM

I’m so excited to test the old telescope that we found in the back of the garage. Night sky awaits.