But also a slog.

We spent the last year (actually a bit longer) training an LLM with recurrent depth at scale.

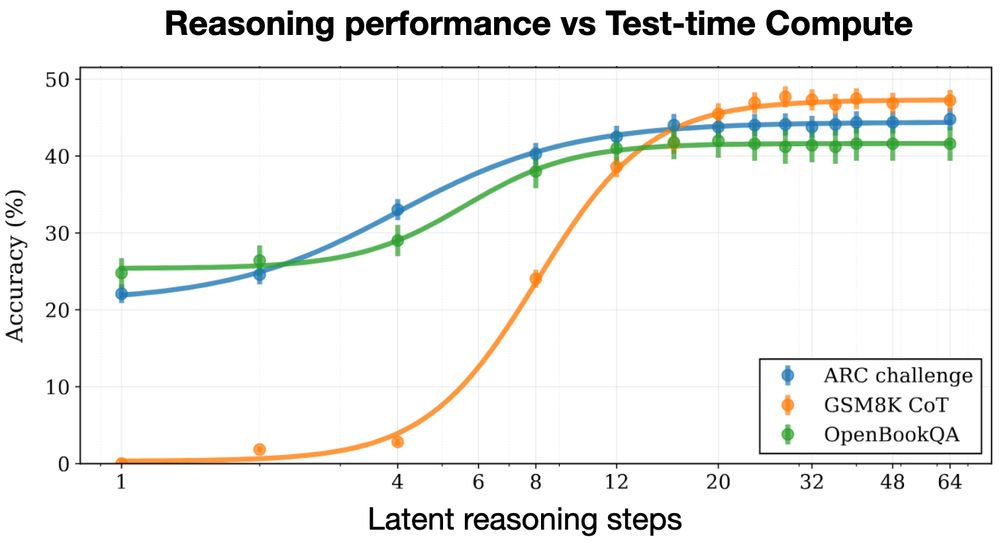

The model has an internal latent space in which it can adaptively spend more compute to think longer.

I think the tech report ...🐦⬛

But also a slog.

Huginn-3.5B reasons implicitly in latent space 🧠

Unlike O1 and R1, latent reasoning doesn’t need special chain-of-thought training data, and doesn't produce extra CoT tokens at test time.

We trained on 800B tokens 👇

Huginn-3.5B reasons implicitly in latent space 🧠

Unlike O1 and R1, latent reasoning doesn’t need special chain-of-thought training data, and doesn't produce extra CoT tokens at test time.

We trained on 800B tokens 👇

The workshops always have tons of interesting things on at once, so the FOMO is real😵💫 Luckily it's all recorded, so I've been catching up on what I missed.

Thread below with some personal highlights🧵

The workshops always have tons of interesting things on at once, so the FOMO is real😵💫 Luckily it's all recorded, so I've been catching up on what I missed.

Thread below with some personal highlights🧵

The v3 model is an MoE with 37B (out of 671B) active parameters. Let's compare to the cost of a 34B dense model. 🧵

The v3 model is an MoE with 37B (out of 671B) active parameters. Let's compare to the cost of a 34B dense model. 🧵