Our dynamixel-api package lets you control any Dynamixel-based gripper directly from Python.

🔗 github.com/TimSchneider...

Special thanks to Erik Helmut!

Our dynamixel-api package lets you control any Dynamixel-based gripper directly from Python.

🔗 github.com/TimSchneider...

Special thanks to Erik Helmut!

🔗 github.com/TimSchneider...

🔗 github.com/TimSchneider...

But please don't tell Franka Robotics 🤫, because using their API like that is probably illegal.

But please don't tell Franka Robotics 🤫, because using their API like that is probably illegal.

franky exposes most libfranka functionality in its Python API:

🔧 Redefine end-effector properties

⚖️ Tune joint impedance

🛑 Set force/torque thresholds

…and much more!

franky exposes most libfranka functionality in its Python API:

🔧 Redefine end-effector properties

⚖️ Tune joint impedance

🛑 Set force/torque thresholds

…and much more!

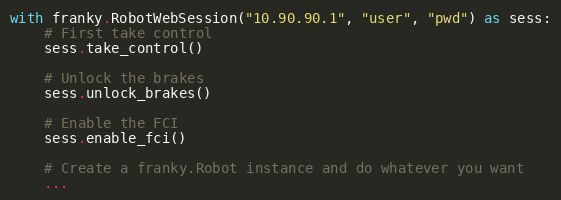

No ROS nodes. No launch files. Just five lines of Python.

No ROS nodes. No launch files. Just five lines of Python.

1️⃣ Install a real-time kernel

2️⃣ Grant real-time permissions to your user

3️⃣ pip install franky-control

…and you’re ready to control your Franka robot!

1️⃣ Install a real-time kernel

2️⃣ Grant real-time permissions to your user

3️⃣ pip install franky-control

…and you’re ready to control your Franka robot!

With franky, you get real-time control both in C++ & Python: commands are fully preemptible, and Ruckig replans smooth trajectories on the fly.

With franky, you get real-time control both in C++ & Python: commands are fully preemptible, and Ruckig replans smooth trajectories on the fly.

Paper: arxiv.org/abs/2410.23860

Paper: arxiv.org/abs/2410.23860

Turns out, as long as the hole is wide enough, a vision-only agent learns to solve the task just as well as a vision-tactile agent. However, once we make the hole tighter, we see that the vision-only agent fails to solve the task and gets stuck in a local minimum.

Turns out, as long as the hole is wide enough, a vision-only agent learns to solve the task just as well as a vision-tactile agent. However, once we make the hole tighter, we see that the vision-only agent fails to solve the task and gets stuck in a local minimum.

We built a real, fully autonomous, and self-resetting tactile insertion setup and trained model-based RL directly in the real world. Using this setup, we run extensive experiments to understand the role of vision and touch in this task.

We built a real, fully autonomous, and self-resetting tactile insertion setup and trained model-based RL directly in the real world. Using this setup, we run extensive experiments to understand the role of vision and touch in this task.

Interested in active perception, transformers, or tactile robotics? Stop by poster 105 at RLDM this afternoon and let’s connect!

🗓️ 16:30 - 19:30

📍 Poster 105

Paper preprint: arxiv.org/pdf/2505.06182

TactileMNIST benchmark: sites.google.com/robot-learni...

Interested in active perception, transformers, or tactile robotics? Stop by poster 105 at RLDM this afternoon and let’s connect!

🗓️ 16:30 - 19:30

📍 Poster 105

Paper preprint: arxiv.org/pdf/2505.06182

TactileMNIST benchmark: sites.google.com/robot-learni...

Like all deep RL, TAP needs a lot of data. Next steps:

- Improve sample efficiency (think: pre-trained models)

- Apply TAP on real robots (sim2real transfer)

- Scale up to multi-finger/multi-modal (vision+touch) perception

Like all deep RL, TAP needs a lot of data. Next steps:

- Improve sample efficiency (think: pre-trained models)

- Apply TAP on real robots (sim2real transfer)

- Scale up to multi-finger/multi-modal (vision+touch) perception

TAP outperformed both random and prior state-of-the-art (HAM) baselines, highlighting the value of attention-based models and off-policy RL for tactile exploration.

TAP outperformed both random and prior state-of-the-art (HAM) baselines, highlighting the value of attention-based models and off-policy RL for tactile exploration.

We tested TAP on a variety of ap_gym (github.com/TimSchneider...) tasks from the TactileMNIST benchmark (sites.google.com/robot-learni...).

In all cases, TAP learns to actively explore & infer object properties efficiently.

We tested TAP on a variety of ap_gym (github.com/TimSchneider...) tasks from the TactileMNIST benchmark (sites.google.com/robot-learni...).

In all cases, TAP learns to actively explore & infer object properties efficiently.

TAP jointly learns action and prediction with a shared transformer encoder using a combination of RL and supervised learning. We show that TAP's formulation arises naturally when optimizing a supervised learning objective w.r.t action and prediction.

TAP jointly learns action and prediction with a shared transformer encoder using a combination of RL and supervised learning. We show that TAP's formulation arises naturally when optimizing a supervised learning objective w.r.t action and prediction.

We propose TAP (Task-agnostic Active Perception) — a novel method that combines RL and transformer models for tactile exploration. Unlike previous methods, TAP is completely task-agnostic, i.e., it can learn to solve a variety of active perception problems.

We propose TAP (Task-agnostic Active Perception) — a novel method that combines RL and transformer models for tactile exploration. Unlike previous methods, TAP is completely task-agnostic, i.e., it can learn to solve a variety of active perception problems.