Currently obsessed with ray tracing 💡

However, it seems like NEE results in slightly brighter images. That points to a bug, the converged outcome should be identical.

A proper denoising algorithm could speed up image generation further.

However, it seems like NEE results in slightly brighter images. That points to a bug, the converged outcome should be identical.

A proper denoising algorithm could speed up image generation further.

Both images finally look similar — but NEE reached this quality already with much fewer samples 🙌. So those extra samples were maybe not needed.

Render time: ~10–15 minutes 💻

Both images finally look similar — but NEE reached this quality already with much fewer samples 🙌. So those extra samples were maybe not needed.

Render time: ~10–15 minutes 💻

NEE is almost fully converged, while path tracing still has plenty of grain and noise

NEE is almost fully converged, while path tracing still has plenty of grain and noise

At 10 samples, NEE is starting to clean up nicely 👌

Path tracing still struggles — lots of missed light paths and black areas

At 10 samples, NEE is starting to clean up nicely 👌

Path tracing still struggles — lots of missed light paths and black areas

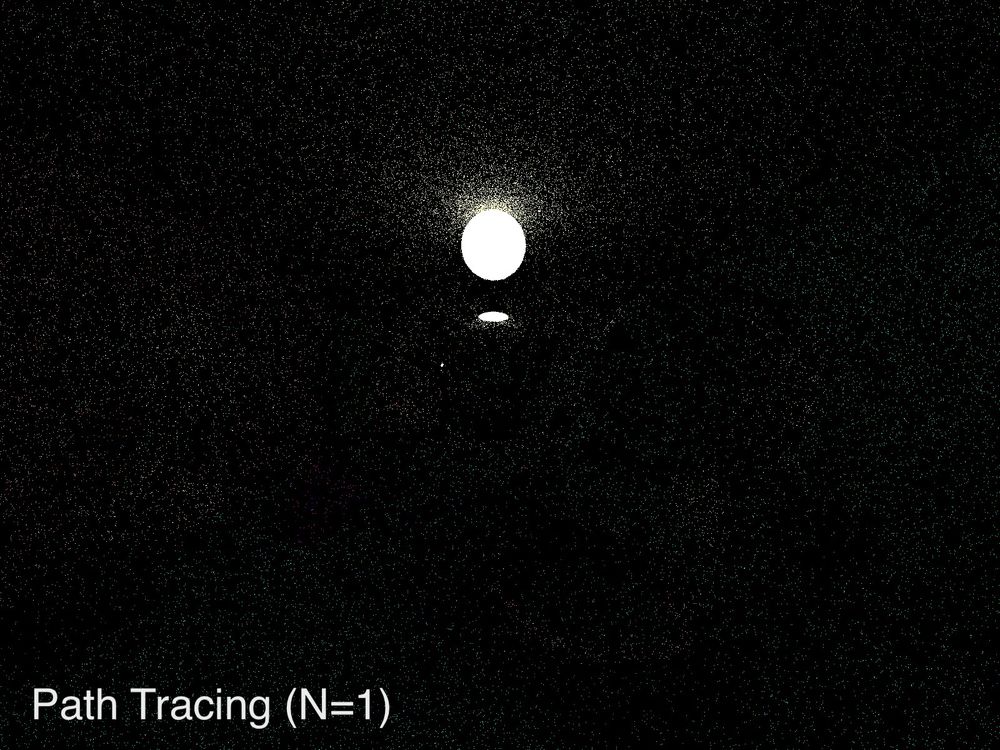

With just one sample per pixel, brute force path tracing wastes tons of rays — you can see all the black pixels 🕳️

NEE, on the other hand, already captures the main light contributions 💡 (still noisy, of course)

With just one sample per pixel, brute force path tracing wastes tons of rays — you can see all the black pixels 🕳️

NEE, on the other hand, already captures the main light contributions 💡 (still noisy, of course)

🎥 1 sample per frame, 30 fps

⏱️ ~1–2 minutes render time / video

🎥 1 sample per frame, 30 fps

⏱️ ~1–2 minutes render time / video

Don't get dizzy...

Don't get dizzy...

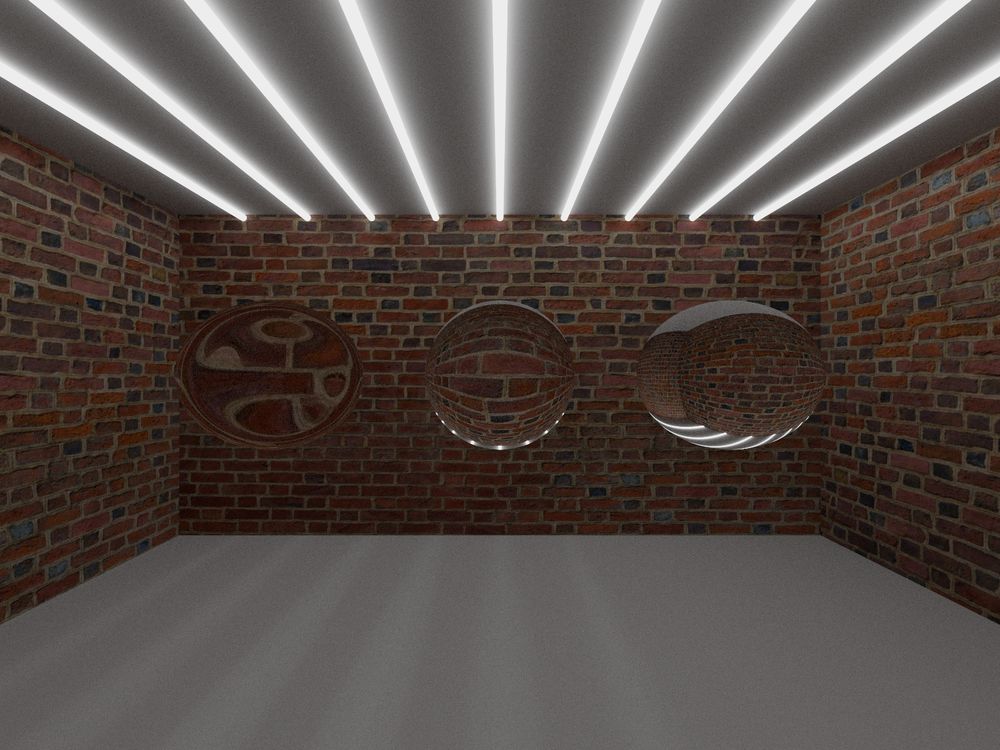

Path tracing gives the best result (check out e.g. the ceiling), but is super slow.

To optimize, I’m adding:

• Direct light sampling — directly sampling visible light sources to reduce wasted rays.

• Denoising — cleaning up noise so fewer samples are needed for a smooth result.

Path tracing gives the best result (check out e.g. the ceiling), but is super slow.

To optimize, I’m adding:

• Direct light sampling — directly sampling visible light sources to reduce wasted rays.

• Denoising — cleaning up noise so fewer samples are needed for a smooth result.

It simulates full global illumination: Light bounces multiple times, capturing indirect light and color bleeding.

The single-sample image is almost black, most rays never hit the lamp.

After ~15000 samples/pixel (≈3 hr), the scene is still noisy.

It simulates full global illumination: Light bounces multiple times, capturing indirect light and color bleeding.

The single-sample image is almost black, most rays never hit the lamp.

After ~15000 samples/pixel (≈3 hr), the scene is still noisy.

Introduces stochastic light sampling for reflections, refractions, and light sources.

The first image shows a single sample per pixel (noisy but fast). The second is the converged result after 2500 samples.

I have to figure out what causes the few "hot pixels"...

Introduces stochastic light sampling for reflections, refractions, and light sources.

The first image shows a single sample per pixel (noisy but fast). The second is the converged result after 2500 samples.

I have to figure out what causes the few "hot pixels"...

Adds physically based light interactions: direct light (and therefore shadows), reflections, and refractions are handled explicitly.

No randomness — everything is computed deterministically for stable, noise-free results.

Adds physically based light interactions: direct light (and therefore shadows), reflections, and refractions are handled explicitly.

No randomness — everything is computed deterministically for stable, noise-free results.

This one ignores all light transport — it just shows the raw base colors of materials.

It’s useful for debugging geometry, textures, and UV mapping before diving into lighting.

This one ignores all light transport — it just shows the raw base colors of materials.

It’s useful for debugging geometry, textures, and UV mapping before diving into lighting.

While having already parallelized my code, rendering each of these images still takes around 7-8 minutes, i.e. 0.002 fps. :(

My next goal is to speed up my renderer significantly. I already have two promising ideas. Let's see.

While having already parallelized my code, rendering each of these images still takes around 7-8 minutes, i.e. 0.002 fps. :(

My next goal is to speed up my renderer significantly. I already have two promising ideas. Let's see.

But first, I might increase the fidelity of my generated images by simulating the Fresnel effect. But more on that next time.

But first, I might increase the fidelity of my generated images by simulating the Fresnel effect. But more on that next time.

Here, I tried to simulate a bronze, silver, and golden sphere.

Here, I tried to simulate a bronze, silver, and golden sphere.

You get a more "milky" glass.

You get a more "milky" glass.

Check out the light patterns on the floor.

Check out the light patterns on the floor.