PhD from the Technion ECE

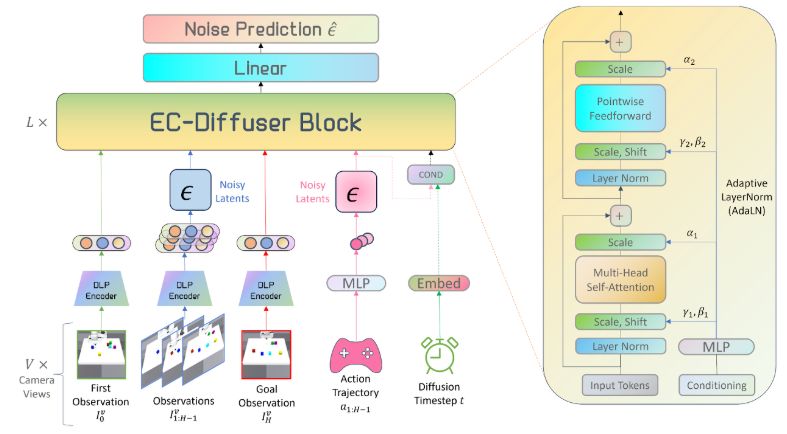

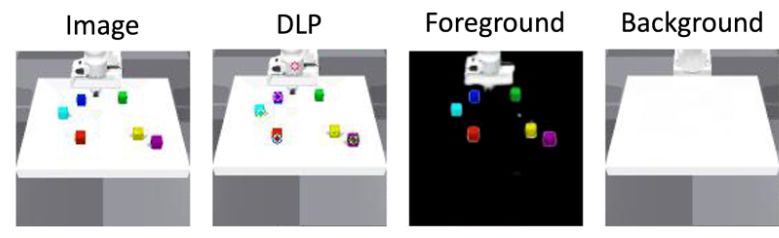

Reseach interests include Unsupervised Representation Learning, Generative Modeling, RL and Robotics.

https://taldatech.github.io

This is a joint work with an amazing team: Carl Qi, Dan Haramati, Tal Daniel, Aviv Tamar, and Amy Zhang.

This is a joint work with an amazing team: Carl Qi, Dan Haramati, Tal Daniel, Aviv Tamar, and Amy Zhang.

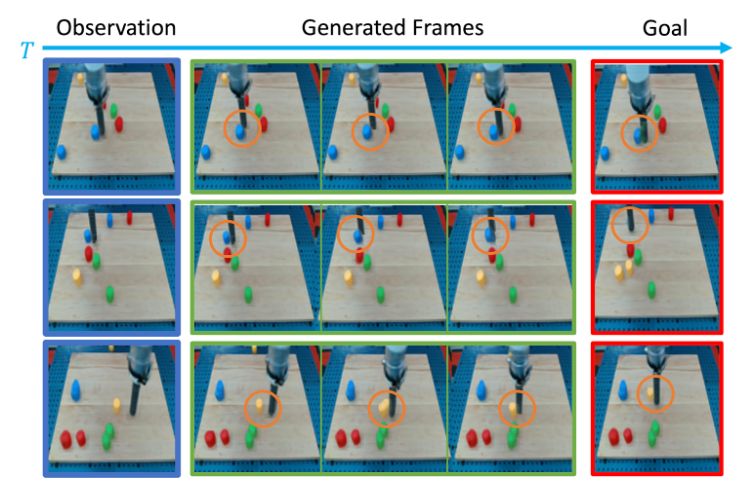

Object manipulation from pixels is challenging: high-dimensional, unstructured data creates a combinatorial explosion in states & goals, making multi-object control hard. Traditional BC methods need massive data/compute and still miss the diverse behaviors required.

Object manipulation from pixels is challenging: high-dimensional, unstructured data creates a combinatorial explosion in states & goals, making multi-object control hard. Traditional BC methods need massive data/compute and still miss the diverse behaviors required.