Kavli Institute for Brain Science

Columbia University Irving Medical Center

K99-R00 scholar @NIH @NatEyeInstitute

https://toosi.github.io/

bsky.app/profile/tahe...

We may have an answer: integration of learned priors through feedback. New paper with @kenmiller.bsky.social! 🧵

bsky.app/profile/tahe...

Also,👇🏼

Also,👇🏼

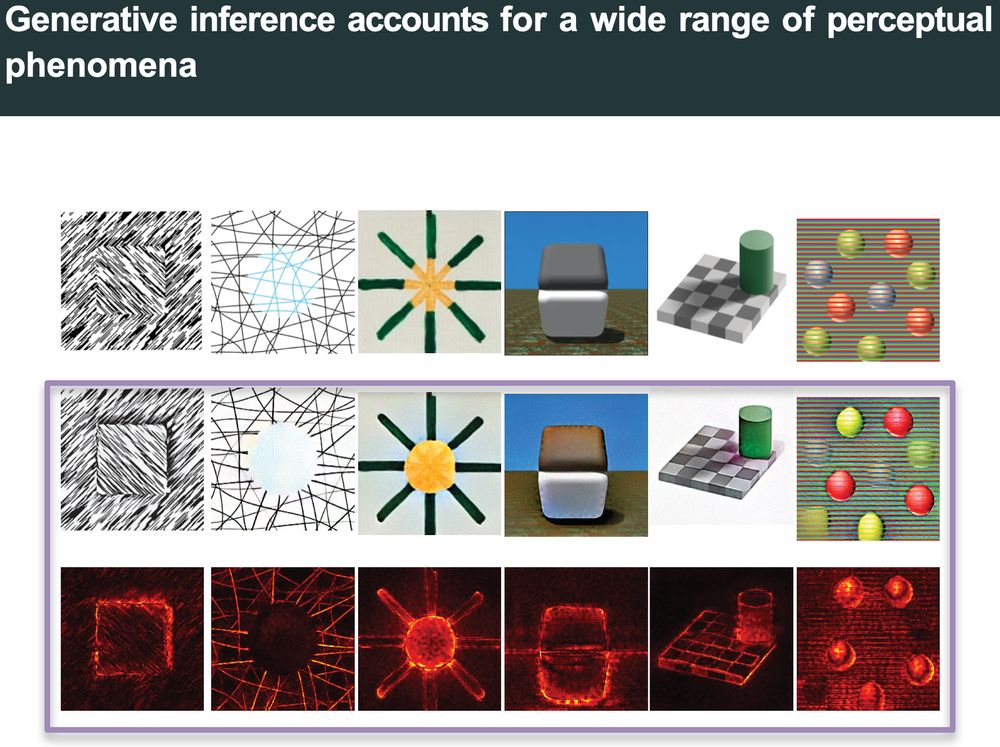

Grossberg’s BCS–FCS framework was one of the first to mechanistically model Gestalt grouping and illusory contours. Generative Inference shows these same phenomena can emerge spontaneously from neural networks trained for object recognition, no specialized implementation is needed.

Grossberg’s BCS–FCS framework was one of the first to mechanistically model Gestalt grouping and illusory contours. Generative Inference shows these same phenomena can emerge spontaneously from neural networks trained for object recognition, no specialized implementation is needed.

Clinical applications on the horizon! More on that soon!

Clinical applications on the horizon! More on that soon!

Again, generative inference via PGDD accounts for all of them.

Again, generative inference via PGDD accounts for all of them.

(Makes continual learning much easier!)

(Makes continual learning much easier!)

Our conclusion: all Gestalt perceptual grouping principles may unify under one mechanism: integration of priors into sensory processing.

Our conclusion: all Gestalt perceptual grouping principles may unify under one mechanism: integration of priors into sensory processing.

Result: attentional modulation spread to other group segments with a delay—the same signature of prior integration we see everywhere!

Result: attentional modulation spread to other group segments with a delay—the same signature of prior integration we see everywhere!

What about other principles? Roelfsema lab ran a clever monkey study:

What about other principles? Roelfsema lab ran a clever monkey study: