Kavli Institute for Brain Science

Columbia University Irving Medical Center

K99-R00 scholar @NIH @NatEyeInstitute

https://toosi.github.io/

Clinical applications on the horizon! More on that soon!

Clinical applications on the horizon! More on that soon!

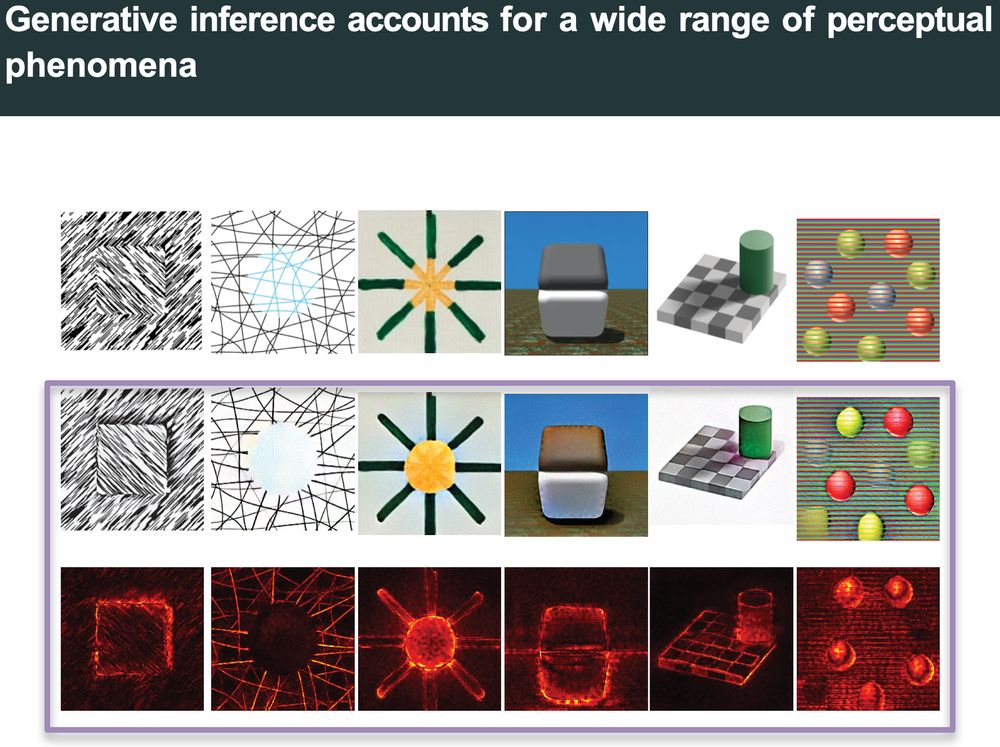

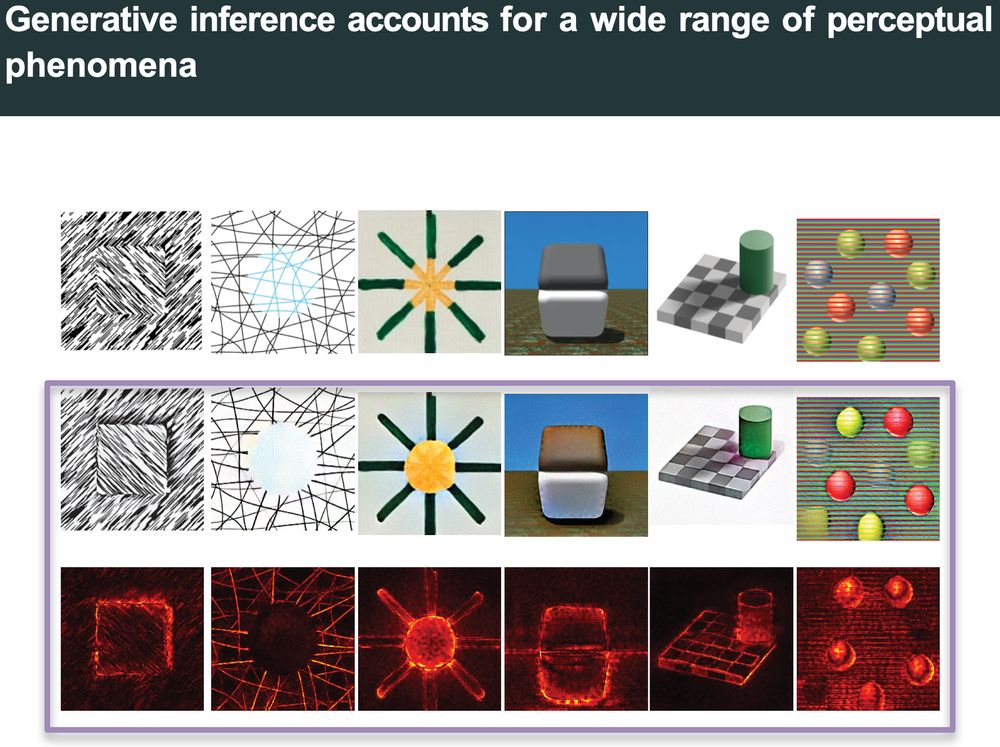

Again, generative inference via PGDD accounts for all of them.

Again, generative inference via PGDD accounts for all of them.

Our conclusion: all Gestalt perceptual grouping principles may unify under one mechanism: integration of priors into sensory processing.

Our conclusion: all Gestalt perceptual grouping principles may unify under one mechanism: integration of priors into sensory processing.

Result: attentional modulation spread to other group segments with a delay—the same signature of prior integration we see everywhere!

Result: attentional modulation spread to other group segments with a delay—the same signature of prior integration we see everywhere!

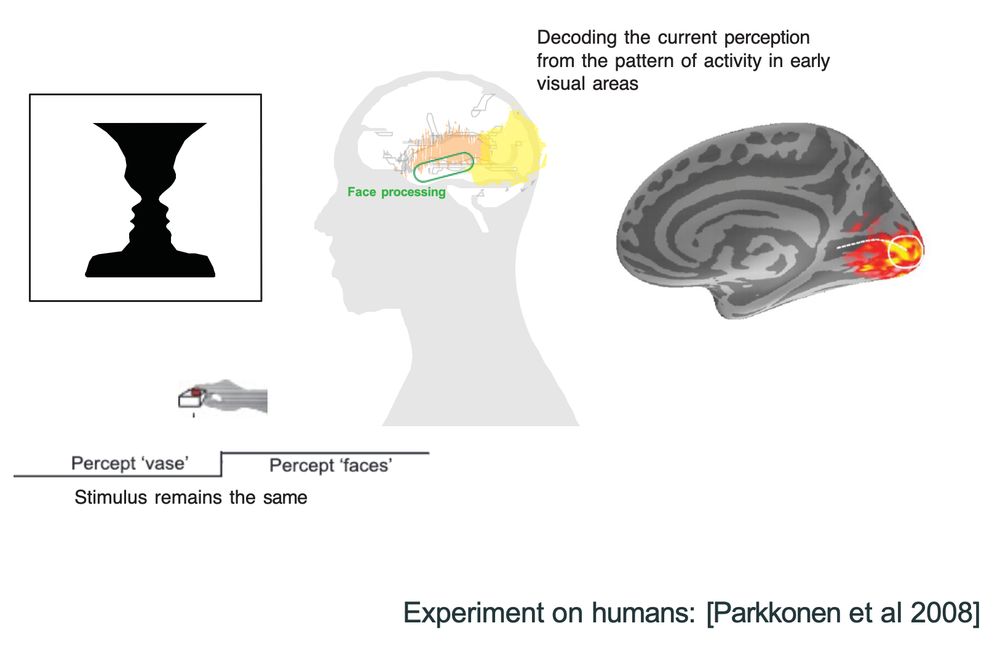

Running generative inference: the object network creates vase patterns, the face network creates facial features!

Running generative inference: the object network creates vase patterns, the face network creates facial features!

Here are actual ImageNet samples of both classes . The network found its closest meaningful interpretation!

Here are actual ImageNet samples of both classes . The network found its closest meaningful interpretation!

First time I ran this—I couldn't believe my eyes!

First time I ran this—I couldn't believe my eyes!

The algorithm iteratively updates activations using gradients (errors) of this objective.

The algorithm iteratively updates activations using gradients (errors) of this objective.

Conventional inference → low confidence output. Makes sense, it was never trained on Kanizsa stimuli. But what if we use it's (intrinsic learning) feedback (aka backpropagation graph)?

Conventional inference → low confidence output. Makes sense, it was never trained on Kanizsa stimuli. But what if we use it's (intrinsic learning) feedback (aka backpropagation graph)?

Even Gestalt principles, grouping by similarity, continuation, etc., exhibit identical neural signatures of delayed, feedback-dependent processing.

Even Gestalt principles, grouping by similarity, continuation, etc., exhibit identical neural signatures of delayed, feedback-dependent processing.

This response is causally dependent on feedback (not local recurrence).

This response is causally dependent on feedback (not local recurrence).

We may have an answer: integration of learned priors through feedback. New paper with @kenmiller.bsky.social! 🧵

We may have an answer: integration of learned priors through feedback. New paper with @kenmiller.bsky.social! 🧵

Again, generative inference via PGDD accounts for all of them.

Again, generative inference via PGDD accounts for all of them.