But, FIDO2 is a pretty solid improvement/extension over the FIDO. TIL.

But, FIDO2 is a pretty solid improvement/extension over the FIDO. TIL.

Finally I can max out on downloading these bulky models from HF & Ollama store. 😅

Finally I can max out on downloading these bulky models from HF & Ollama store. 😅

I'm now considering creating new keys (esp. for RSA/4096 & ed25519) for a new #Yubikey 5.

How is everybody else going about this?

I'm now considering creating new keys (esp. for RSA/4096 & ed25519) for a new #Yubikey 5.

How is everybody else going about this?

In 315 lines of code. And yes, it works. Very well.

There is no moat.

Read it here: ampcode.com/how-to-build...

A good reminder that being nice, forgiving, clear while still retaliatory/provocable is a pretty good strategy in the majority of cases.

www.youtube.com/watch?v=mScp...

A good reminder that being nice, forgiving, clear while still retaliatory/provocable is a pretty good strategy in the majority of cases.

www.youtube.com/watch?v=mScp...

The only thing that could have made this even better for me would be direct curl examples, but maybe I'm asking too much. 😅

I built this simple site to complement Google DeepMind's Gemini SDK with focused, practical code examples - inspired by the "Go By Example" approach.

geminibyexample.com

The only thing that could have made this even better for me would be direct curl examples, but maybe I'm asking too much. 😅

Along those lines, I remember olmOCR from @ai2.bsky.social released just some weeks ago, based on Qwen VL models.

Curious if somebody is working on olmOCR pipeline with Gemma3 models.

Along those lines, I remember olmOCR from @ai2.bsky.social released just some weeks ago, based on Qwen VL models.

Curious if somebody is working on olmOCR pipeline with Gemma3 models.

www.youtube.com/watch?v=P_fH...

www.youtube.com/watch?v=P_fH...

Really neat stuff! Once can easily replace the slower, expensive 3rd party LLM router with a fast, cheap & local model.

Use DeepSeek to generate high-quality training data, then distil that knowledge into ModernBERT for fast, efficient classification.

New blog post: danielvanstrien.xyz/posts/2025/d...

Really neat stuff! Once can easily replace the slower, expensive 3rd party LLM router with a fast, cheap & local model.

Follow along: github.com/huggingface/...

Follow along: github.com/huggingface/...

Start off with `deepseek-r1`, and the follow up the conversation with something like `phi4` or `qwen2.5-coder`.

Start off with `deepseek-r1`, and the follow up the conversation with something like `phi4` or `qwen2.5-coder`.

Most of the simple examples I've seen share the memory across all steps, but this doesn't seem "clean". Reminds me of workflow steps all reading/writing from same blob storage.

Most of the simple examples I've seen share the memory across all steps, but this doesn't seem "clean". Reminds me of workflow steps all reading/writing from same blob storage.

It's reassuring to read the recent @anthropic.com blog...

It's reassuring to read the recent @anthropic.com blog...

That being said, I still haven't "Signed In" to Zed! Not sure I'll do that anytime soon, but what am I missing out on?

That being said, I still haven't "Signed In" to Zed! Not sure I'll do that anytime soon, but what am I missing out on?

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

Any follow-up recommendations?

www.microsoft.com/en-us/resear...

Any follow-up recommendations?

www.microsoft.com/en-us/resear...

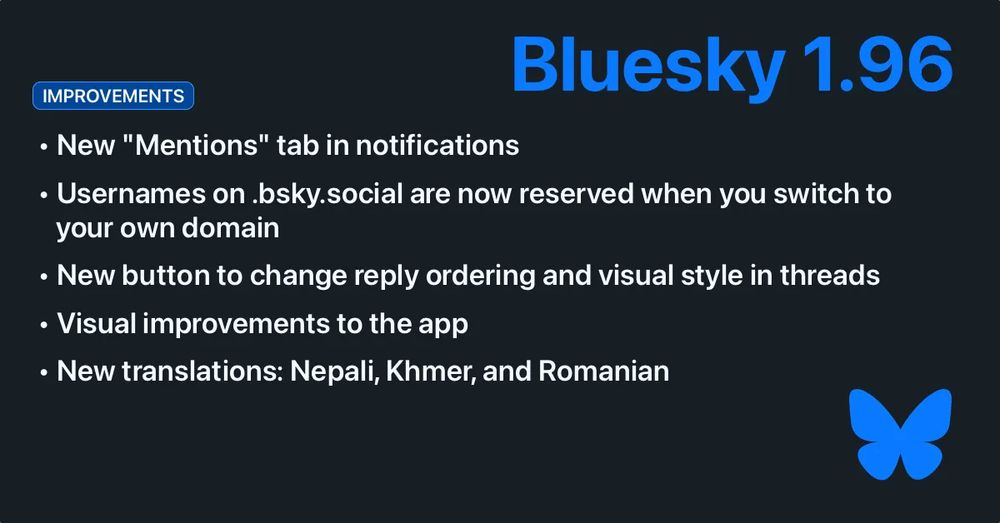

And yes, I've heard about starter packs and automated feeds, haven't quite used them yet, what else have I missed?

And yes, I've heard about starter packs and automated feeds, haven't quite used them yet, what else have I missed?