You can also find me at threads: @sung.kim.mw

FlashMoBA is a memory-efficient sparse attention mechanism designed to accelerate the training and inference of long-sequence models where it achieves up to 14.7x speedup over FlashAttention-2 for small blocks.

FlashMoBA is a memory-efficient sparse attention mechanism designed to accelerate the training and inference of long-sequence models where it achieves up to 14.7x speedup over FlashAttention-2 for small blocks.

(Comment — based on the number of authors on this paper, it must be a big deal.)

All the benefits of wider representations without incurring the quadratic cost of increasing the hidden size.

(Comment — based on the number of authors on this paper, it must be a big deal.)

All the benefits of wider representations without incurring the quadratic cost of increasing the hidden size.

"Normally, you can only work on one git branch at a time in a folder. Want to fix a bug while working on a feature? You have to stash changes, switch branches, then switch back. Git worktrees let you have multiple branches checked out at once in different folders

"Normally, you can only work on one git branch at a time in a folder. Want to fix a bug while working on a feature? You have to stash changes, switch branches, then switch back. Git worktrees let you have multiple branches checked out at once in different folders

Model: huggingface.co/stable-ai/Li...

Model: huggingface.co/stable-ai/Li...

Their attempt to develop a model to outperform gradient-boosting trees on tabular data.

Their attempt to develop a model to outperform gradient-boosting trees on tabular data.

Swap arxiv → quickarxiv on any paper URL to get an instant blog with figures, insights, and explanations.

Swap arxiv → quickarxiv on any paper URL to get an instant blog with figures, insights, and explanations.

Model Cookbook: github.com/hcompai/hai-...

Model: huggingface.co/collections/...

Model Cookbook: github.com/hcompai/hai-...

Model: huggingface.co/collections/...

Built on Qwen3-VL, it provides SOTA performance: 66.1% (+3%) on ScreenSpot-Pro and 76.1% (+5%) on OSWorld-G.

Built on Qwen3-VL, it provides SOTA performance: 66.1% (+3%) on ScreenSpot-Pro and 76.1% (+5%) on OSWorld-G.

A platform that maintains a continuously updated, structured wiki for code repositories. I'm not sure how this differs from Cognition AI's DeepWiki ( deepwiki.org ), but we'll find out soon enough.

developers.googleblog.com/en/introduci...

A platform that maintains a continuously updated, structured wiki for code repositories. I'm not sure how this differs from Cognition AI's DeepWiki ( deepwiki.org ), but we'll find out soon enough.

developers.googleblog.com/en/introduci...

Project: depth-anything-3.github.io

Paper: arxiv.org/abs/2511.10647

Code: github.com/ByteDance-Se...

Hugging face demo: huggingface.co/spaces/depth...

Project: depth-anything-3.github.io

Paper: arxiv.org/abs/2511.10647

Code: github.com/ByteDance-Se...

Hugging face demo: huggingface.co/spaces/depth...

DA3 extends monocular depth estimation to any-view scenarios, including single images, multi-view images, and video. They find that:

- A plain transformer (e.g., vanilla DINO) is enough. No specialized architecture.

DA3 extends monocular depth estimation to any-view scenarios, including single images, multi-view images, and video. They find that:

- A plain transformer (e.g., vanilla DINO) is enough. No specialized architecture.

Is it just me, or does it basically look like CSV?

Is it just me, or does it basically look like CSV?

A full Terminal UI (TUI) for live, interactive W&B monitoring right in your terminal.

wandb.ai/wandb_fc/pro...

A full Terminal UI (TUI) for live, interactive W&B monitoring right in your terminal.

wandb.ai/wandb_fc/pro...

developers.googleblog.com/en/google-co...

developers.googleblog.com/en/google-co...

🚀 Performance: Highly competitive on AIME24/25 & HMMT25 — surpasses DeepSeek R1-0120 on math, and outperforms same-size models in competitive coding.

🚀 Performance: Highly competitive on AIME24/25 & HMMT25 — surpasses DeepSeek R1-0120 on math, and outperforms same-size models in competitive coding.

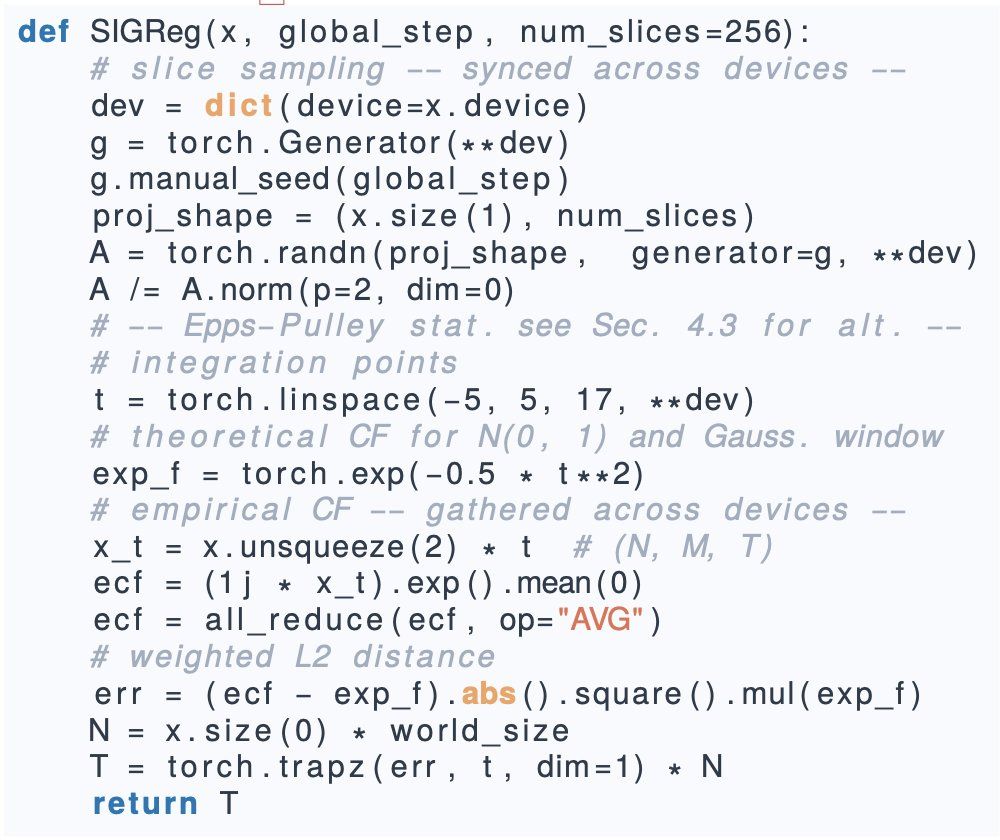

- what distribution to impose on your embeddings

- how to do distribution matching in high-dim

Paper: arxiv.org/abs/2511.08544

Code: github.com/rbalestr-lab...

- what distribution to impose on your embeddings

- how to do distribution matching in high-dim

Paper: arxiv.org/abs/2511.08544

Code: github.com/rbalestr-lab...

- 60+ arch., up to 2B params

- 10+ datasets

- in-domain training (>DINOv3)

- corr(train loss, test perf)=95%

- 60+ arch., up to 2B params

- 10+ datasets

- in-domain training (>DINOv3)

- corr(train loss, test perf)=95%

ernie.baidu.com

ernie.baidu.com

Currently, we have an unhealthy ‘upright pyramid’ AI industry structure

- Application Layer

- Model Layer

- Chip Layer

They are shifting to a healthy AI industry structure, which is an ‘inverted pyramid'

- Application Layer

- Model Layer

- Chip Layer

Currently, we have an unhealthy ‘upright pyramid’ AI industry structure

- Application Layer

- Model Layer

- Chip Layer

They are shifting to a healthy AI industry structure, which is an ‘inverted pyramid'

- Application Layer

- Model Layer

- Chip Layer

The paper formalizes a Bayesian framework for model control: altering a model's "beliefs" over which persona or data source it's emulating. Context (prompting) and internal representations (steering)

The paper formalizes a Bayesian framework for model control: altering a model's "beliefs" over which persona or data source it's emulating. Context (prompting) and internal representations (steering)

Here are a few optimizations that they did

- MuP-like scaling

- MQA + SWA

- Clamping everywhere to control activation

- KV Cache sharing

Here are a few optimizations that they did

- MuP-like scaling

- MQA + SWA

- Clamping everywhere to control activation

- KV Cache sharing