You can also find me at threads: @sung.kim.mw

careers.qualcomm.com/careers?quer...

careers.qualcomm.com/careers?quer...

💰 Cost: Full post-training for just $7.8K — 30-60× cheaper than DeepSeek R1 or MiniMax-M1.

Model : huggingface.co/WeiboAI/Vibe...

Github: github.com/WeiboAI/Vibe...

Arxiv : arxiv.org/abs/2511.06221

💰 Cost: Full post-training for just $7.8K — 30-60× cheaper than DeepSeek R1 or MiniMax-M1.

Model : huggingface.co/WeiboAI/Vibe...

Github: github.com/WeiboAI/Vibe...

Arxiv : arxiv.org/abs/2511.06221

2️⃣ Ensure forward passes in training use identical kernels as inference

3️⃣ Add custom backward passes in PyTorch

blog.vllm.ai/2025/11/10/b...

2️⃣ Ensure forward passes in training use identical kernels as inference

3️⃣ Add custom backward passes in PyTorch

blog.vllm.ai/2025/11/10/b...

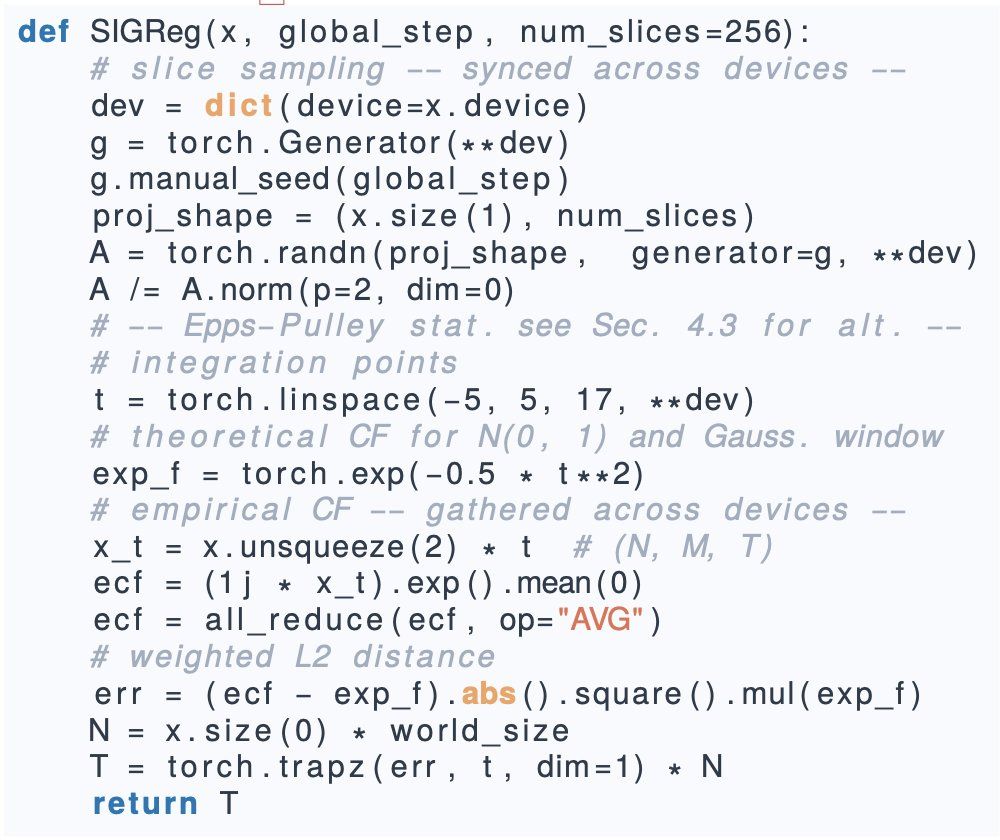

- what distribution to impose on your embeddings

- how to do distribution matching in high-dim

Paper: arxiv.org/abs/2511.08544

Code: github.com/rbalestr-lab...

- what distribution to impose on your embeddings

- how to do distribution matching in high-dim

Paper: arxiv.org/abs/2511.08544

Code: github.com/rbalestr-lab...

Paper: Belief Dynamics Reveal the Dual Nature of In-Context Learning and Activation Steering ( arxiv.org/abs/2511.00617 )

Paper: Belief Dynamics Reveal the Dual Nature of In-Context Learning and Activation Steering ( arxiv.org/abs/2511.00617 )

- FSDP + TP + SP

- Int6 gradient communication

- Quantization Aware Training (QAT)

blog.character.ai/technical/in...

- FSDP + TP + SP

- Int6 gradient communication

- Quantization Aware Training (QAT)

blog.character.ai/technical/in...