Bayesian | Computational guy | Name dropper | Deep learner | Book lover

Opinions are my own.

Interactive version: kucharssim.github.io/bayesflow-co...

PDF: osf.io/preprints/ps...

Interactive version: kucharssim.github.io/bayesflow-co...

PDF: osf.io/preprints/ps...

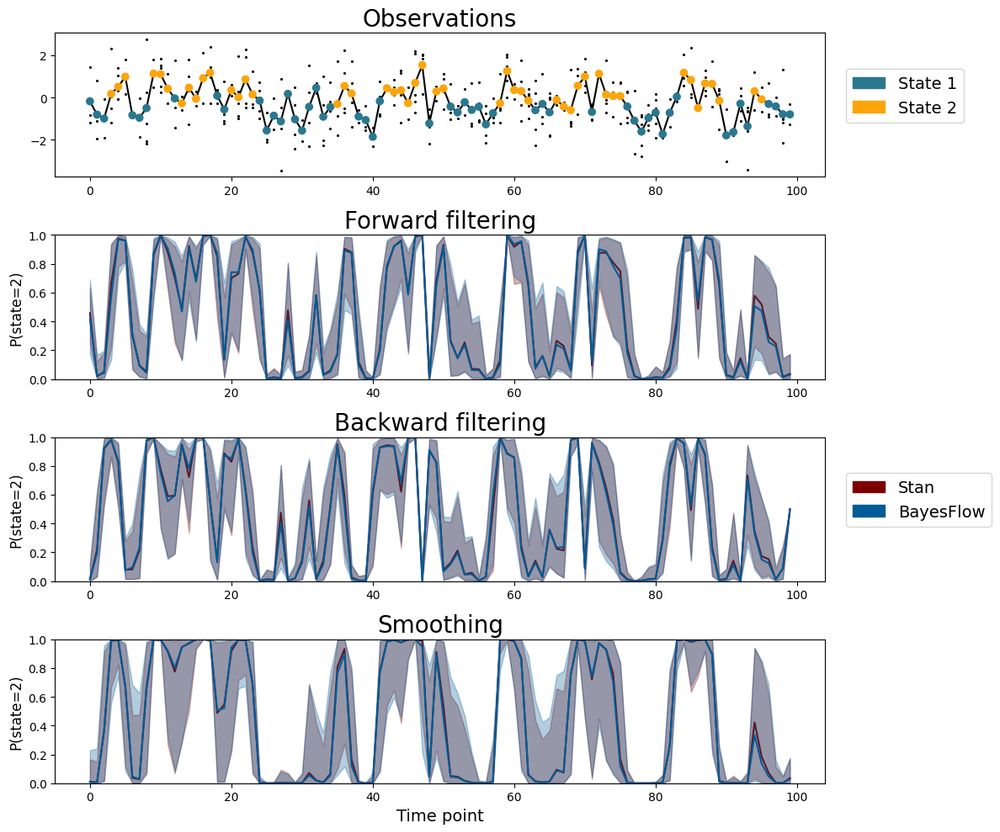

BayesFlow allows:

• Approximating the joint posterior of model parameters and mixture indicators

• Inferences for independent and dependent mixtures

• Amortization for fast and accurate estimation

📄 Preprint

💻 Code

jointly led by @marvinschmitt.com and @chengkunli.bsky.social , and with @avehtari.bsky.social @paulbuerkner.com @stefanradev.bsky.social

MCMC + amortized methods for the best of both worlds (speed & guarantees!)

arxiv.org/abs/2409.04332

jointly led by @marvinschmitt.com and @chengkunli.bsky.social , and with @avehtari.bsky.social @paulbuerkner.com @stefanradev.bsky.social

MCMC + amortized methods for the best of both worlds (speed & guarantees!)

arxiv.org/abs/2409.04332

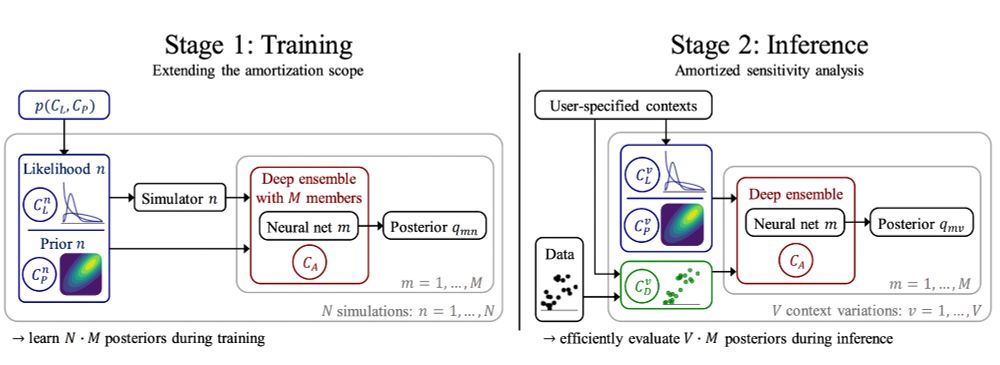

Sensitivity-aware amortized inference explores the iceberg:

⋅ Test alternative priors, likelihoods, and data perturbations

⋅ Deep ensembles flag misspecification issues

⋅ No model refits required during inference

🔗 openreview.net/forum?id=Kxt...

Sensitivity-aware amortized inference explores the iceberg:

⋅ Test alternative priors, likelihoods, and data perturbations

⋅ Deep ensembles flag misspecification issues

⋅ No model refits required during inference

🔗 openreview.net/forum?id=Kxt...

In our new paper, we (Florence Bockting, @stefanradev.bsky.social and me) develop a method for expert prior elicitation using generative neural networks and simulation-based learning.

arxiv.org/abs/2411.15826

In our new paper, we (Florence Bockting, @stefanradev.bsky.social and me) develop a method for expert prior elicitation using generative neural networks and simulation-based learning.

arxiv.org/abs/2411.15826