Come by today at 10.40-12.00 in East Ballroom C to ask me about:

1) 🏰 bio-inspired naturally robust models

2) 🎓 Interpretability & robustness

3) 🖼️ building a generator for free

4) 😵💫 attacking GPT-4, Claude & Gemini

Come by today at 10.40-12.00 in East Ballroom C to ask me about:

1) 🏰 bio-inspired naturally robust models

2) 🎓 Interpretability & robustness

3) 🖼️ building a generator for free

4) 😵💫 attacking GPT-4, Claude & Gemini

Example: Stephen Hawking that "looks" like the Never Gonna Give You Up song by Rick Astley (www.youtube.com/watch?v=mf_E...)

11/12

Example: Stephen Hawking that "looks" like the Never Gonna Give You Up song by Rick Astley (www.youtube.com/watch?v=mf_E...)

11/12

Turning Isaac Newton into Albert Einstein generates a perturbation that adds a mustache 🥸

Normally the perturbation looks like noise - our multi-res prior gets us a mustache!

10/12

Turning Isaac Newton into Albert Einstein generates a perturbation that adds a mustache 🥸

Normally the perturbation looks like noise - our multi-res prior gets us a mustache!

10/12

No diffusion or GANs involved anywhere here! No extra training either!

9/12

No diffusion or GANs involved anywhere here! No extra training either!

9/12

Just express the attack perturbation as a sum over resolutions => natural looking images instead of noisy attacks!

8/12

Just express the attack perturbation as a sum over resolutions => natural looking images instead of noisy attacks!

8/12

Normally, this gives a noisy super-stimulus that looks like nothing to a human. For our network, we get interpretable images 🖼️!

7/12

Normally, this gives a noisy super-stimulus that looks like nothing to a human. For our network, we get interpretable images 🖼️!

7/12

We call this the Interpretability-Robustness Hypothesis. We can clearly see why the attack perturbation does what it does - we get much better alignment

6/12

We call this the Interpretability-Robustness Hypothesis. We can clearly see why the attack perturbation does what it does - we get much better alignment

6/12

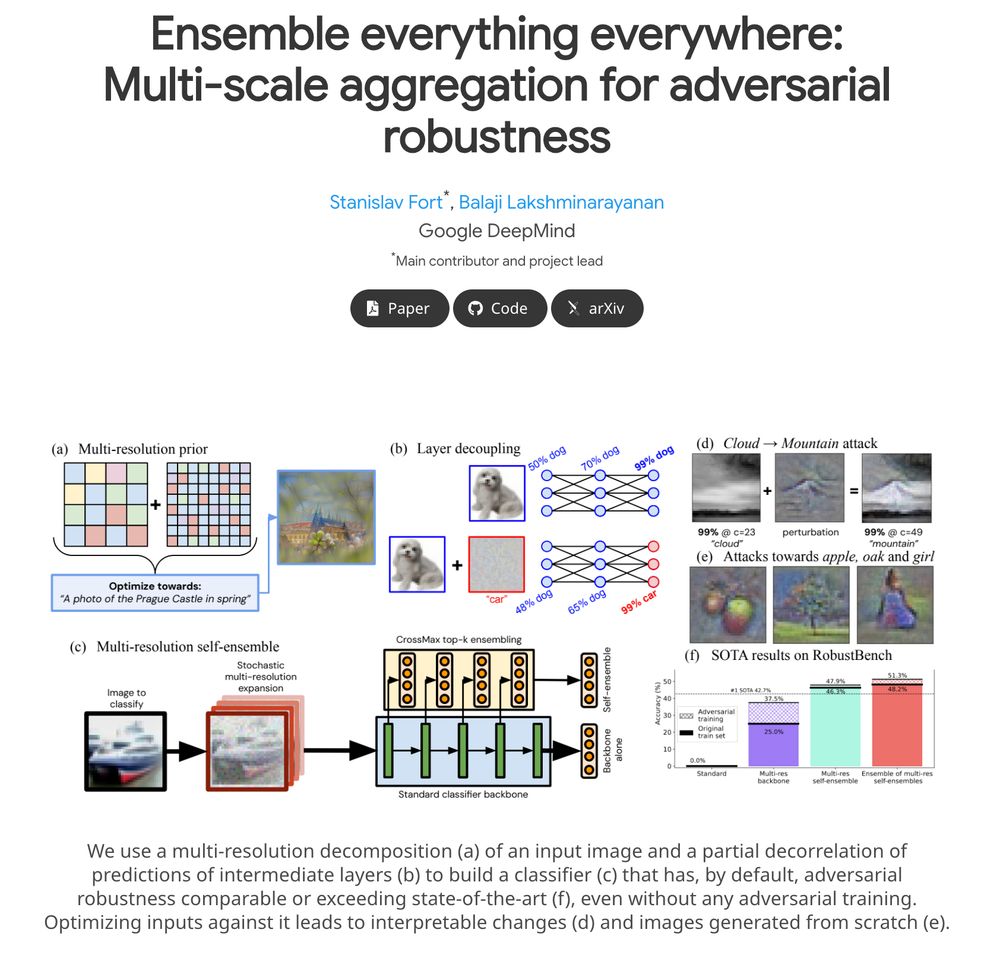

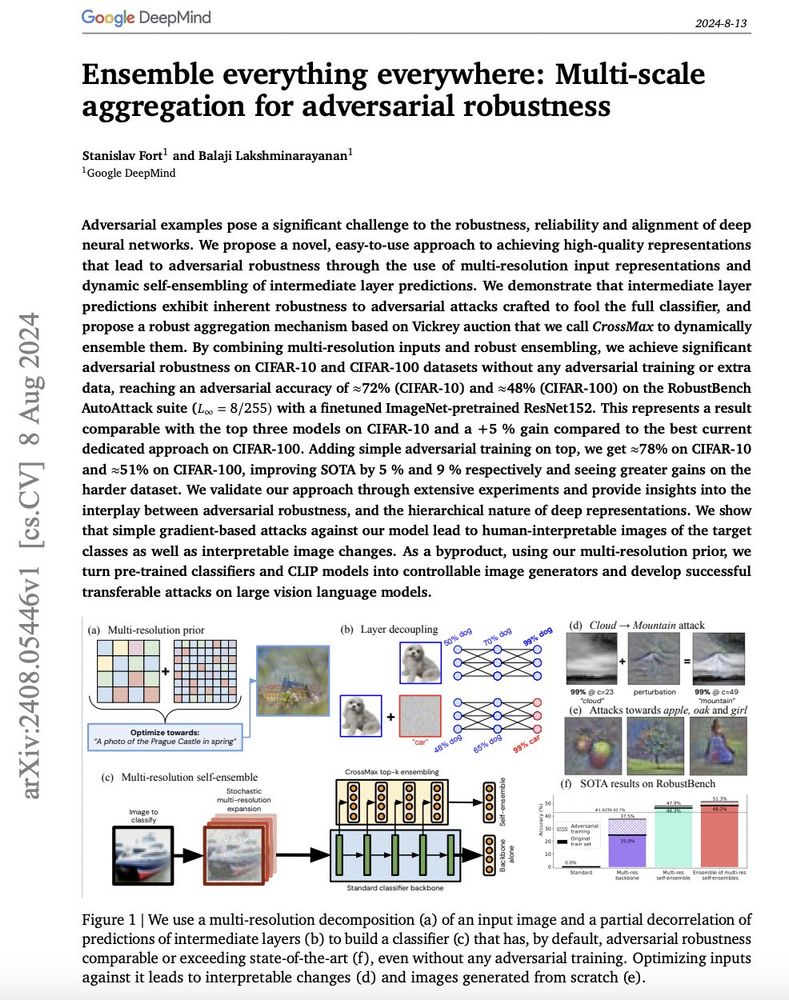

1) 🔎 at all resolutions &

2) 🪜at all abstraction scales

➡️ much harder to attack

➡️ matches or beats SOTA (=brute force) on CIFAR-10/100 adversarial accuracy cheaply w/o any adversarial training (on the RobustBench). With it, it's even better! 5/12

1) 🔎 at all resolutions &

2) 🪜at all abstraction scales

➡️ much harder to attack

➡️ matches or beats SOTA (=brute force) on CIFAR-10/100 adversarial accuracy cheaply w/o any adversarial training (on the RobustBench). With it, it's even better! 5/12

We do this via our new, Vickrey auction & balanced allocation inspired robust ensembling procedure we call CrossMax which behaves in an anti-Goodhart way

4/12

We do this via our new, Vickrey auction & balanced allocation inspired robust ensembling procedure we call CrossMax which behaves in an anti-Goodhart way

4/12

A dog 🐕 attacked to look like a car 🚘 still has dog 🐕-like edges, textures, & even higher-level features.

3/12

A dog 🐕 attacked to look like a car 🚘 still has dog 🐕-like edges, textures, & even higher-level features.

3/12

At low learning rates (but not high ones!) we get natural robust features by default

2/12

At low learning rates (but not high ones!) we get natural robust features by default

2/12

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

vvvvvvv The poem follows in the replies vvvvvv

vvvvvvv The poem follows in the replies vvvvvv

Blog post: www.lesswrong.com/posts/oPnFzf...

Blog post: www.lesswrong.com/posts/oPnFzf...