Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

>>

Come by today at 10.40-12.00 in East Ballroom C to ask me about:

1) 🏰 bio-inspired naturally robust models

2) 🎓 Interpretability & robustness

3) 🖼️ building a generator for free

4) 😵💫 attacking GPT-4, Claude & Gemini

Come by today at 10.40-12.00 in East Ballroom C to ask me about:

1) 🏰 bio-inspired naturally robust models

2) 🎓 Interpretability & robustness

3) 🖼️ building a generator for free

4) 😵💫 attacking GPT-4, Claude & Gemini

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

Inspired by biology we 1) get adversarial robustness + interpretability for free, 2) turn classifiers into generators & 3) design attacks on GPT-4

vvvvvvv The poem follows in the replies vvvvvv

vvvvvvv The poem follows in the replies vvvvvv

(www.youtube.com/watch?v=mf_E...)

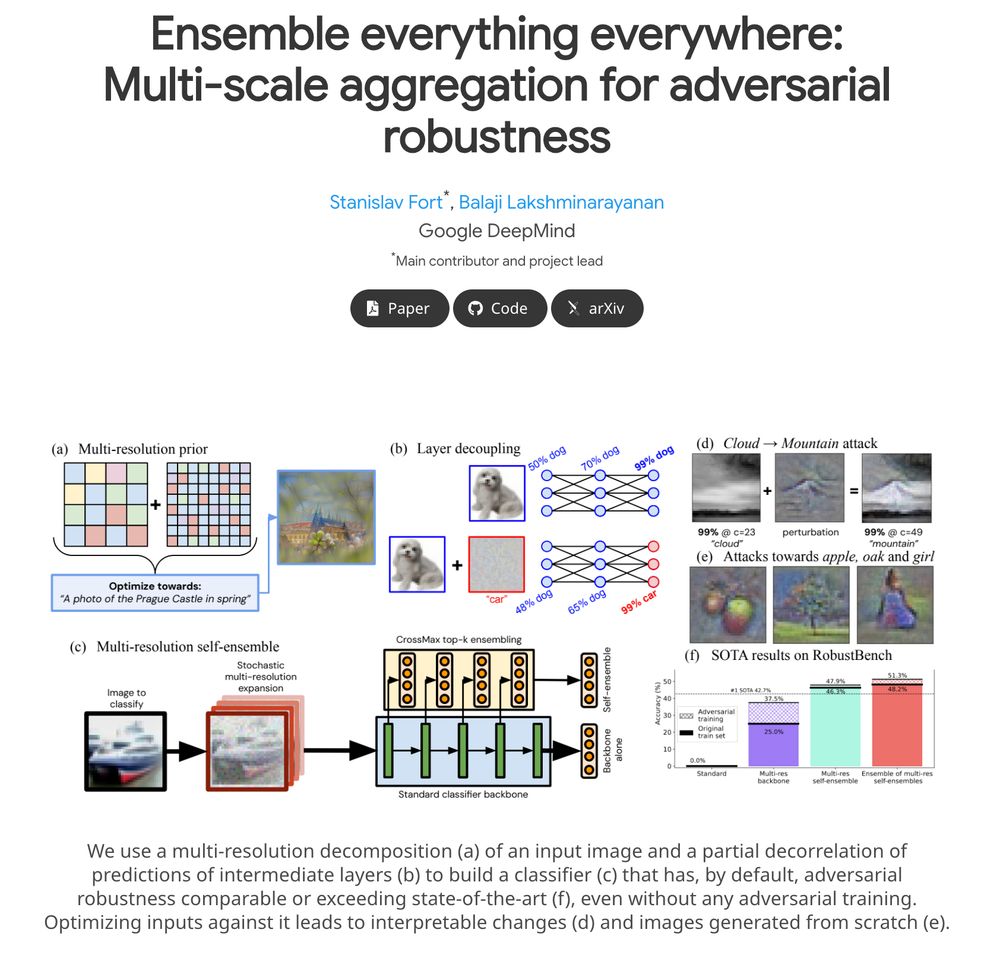

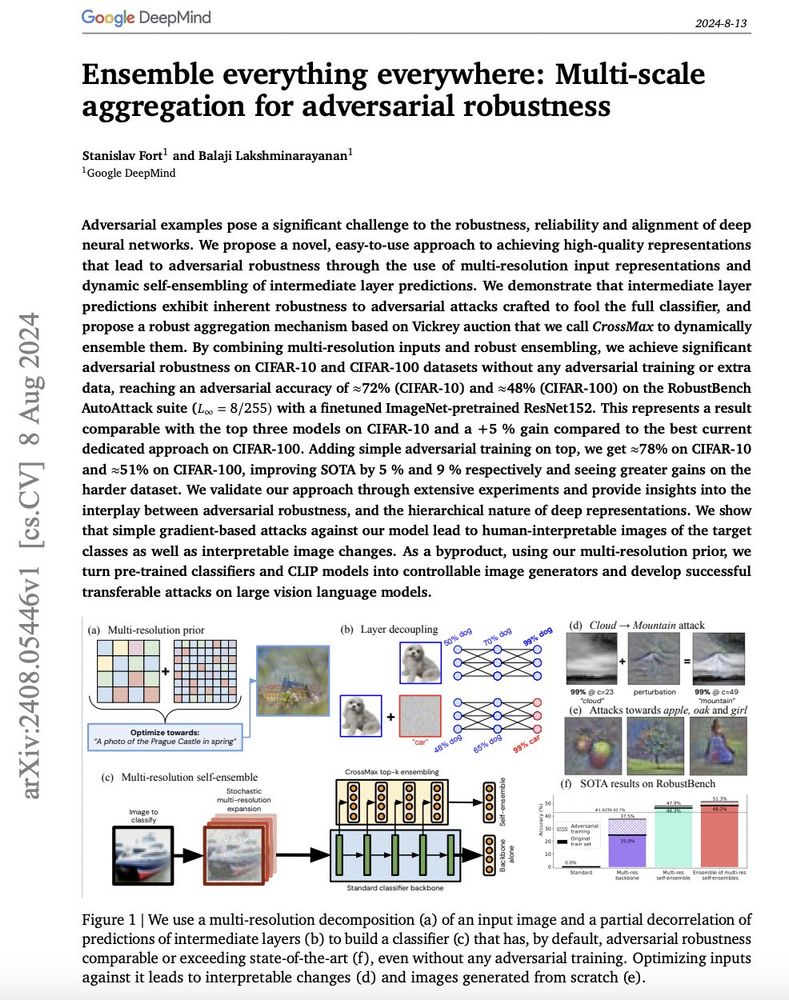

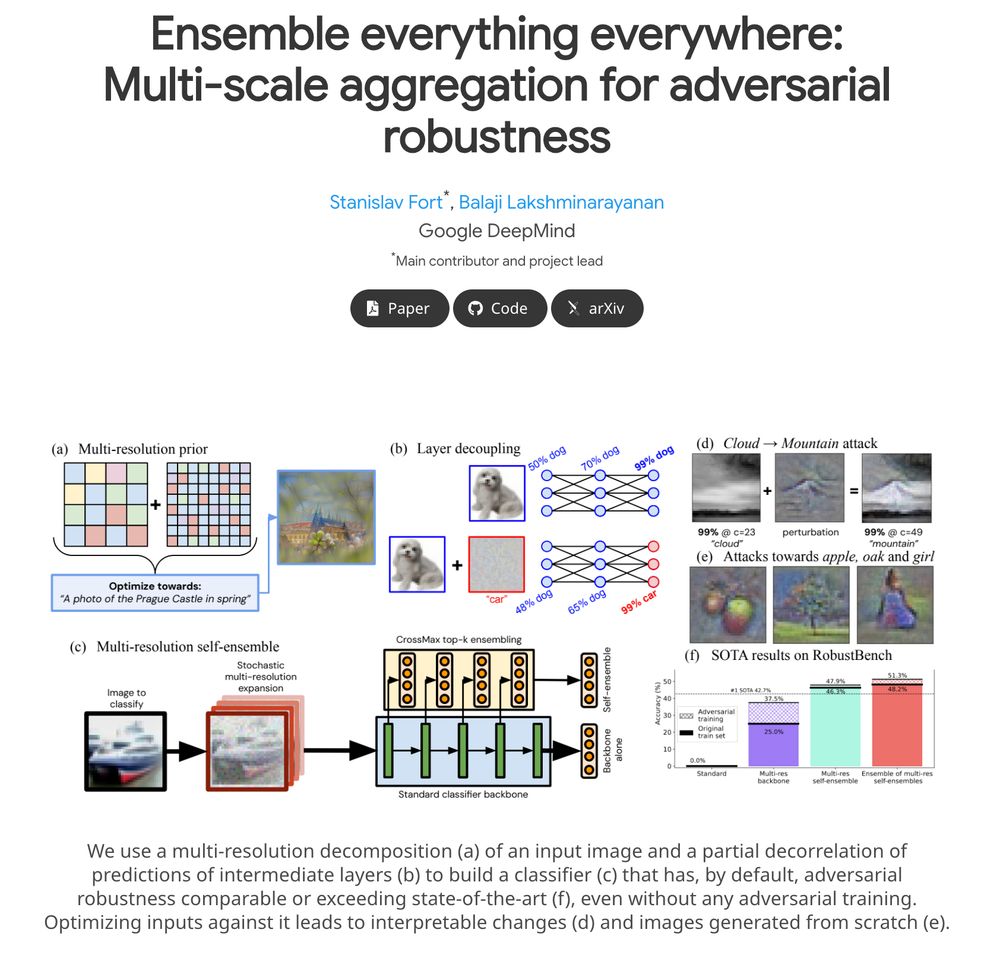

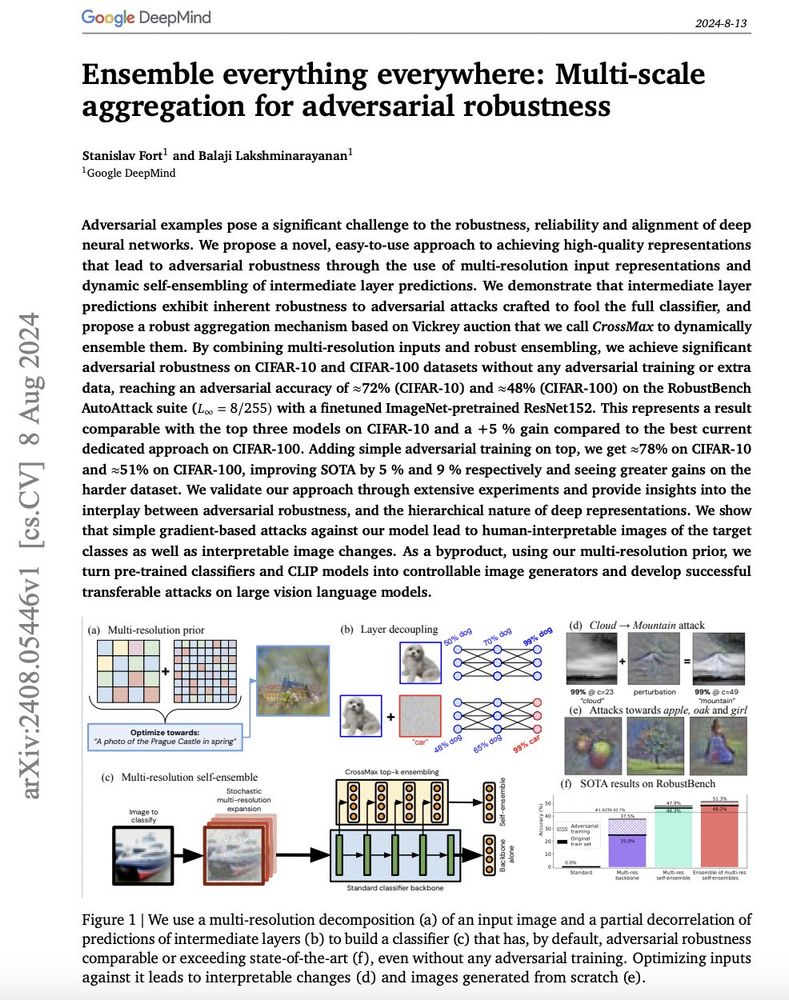

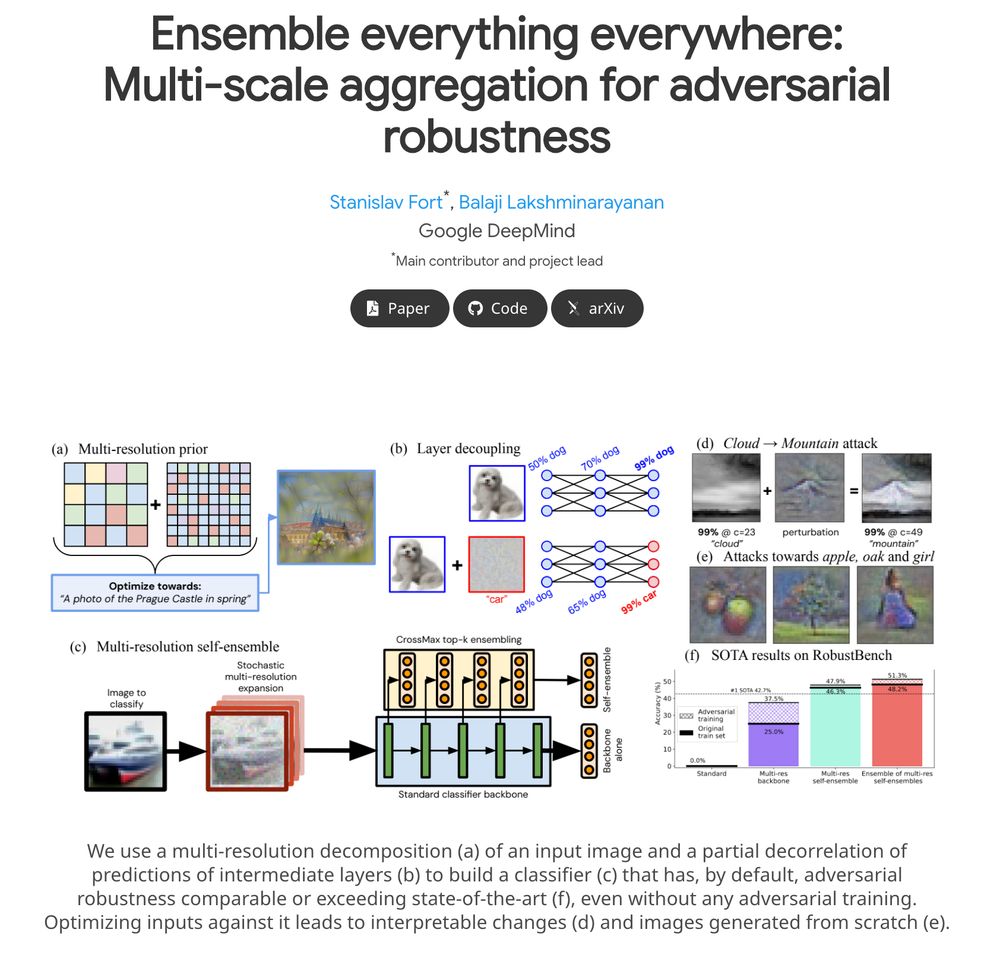

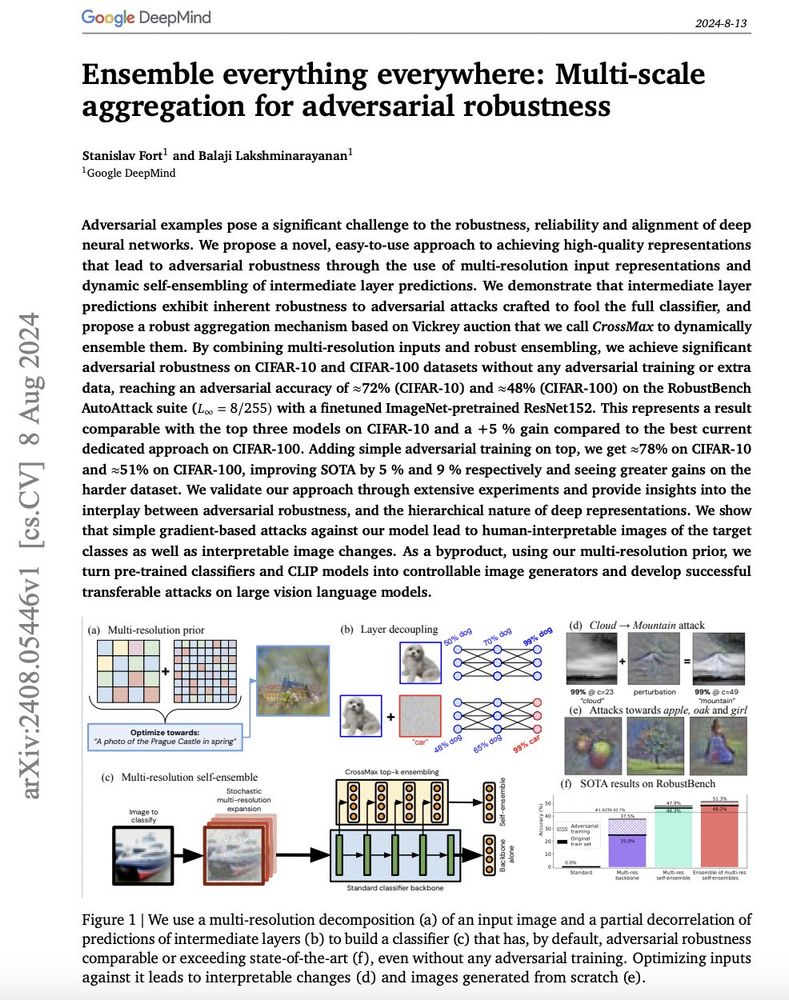

📝Ensemble everything everywhere: Multi-scale aggregation for adversarial robustness

Paper below 👇

(www.youtube.com/watch?v=mf_E...)

📝Ensemble everything everywhere: Multi-scale aggregation for adversarial robustness

Paper below 👇

Blog post: www.lesswrong.com/posts/oPnFzf...

Blog post: www.lesswrong.com/posts/oPnFzf...