Multi-modal ML | Alignment | Culture | Evaluations & Safety| AI & Society

Web: https://www.srishti.dev/

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

Understanding news output and embedded biases is especially important in today's environment and it's imperative to take a holistic look at it.

Looking forward to presenting it in Suzhou!

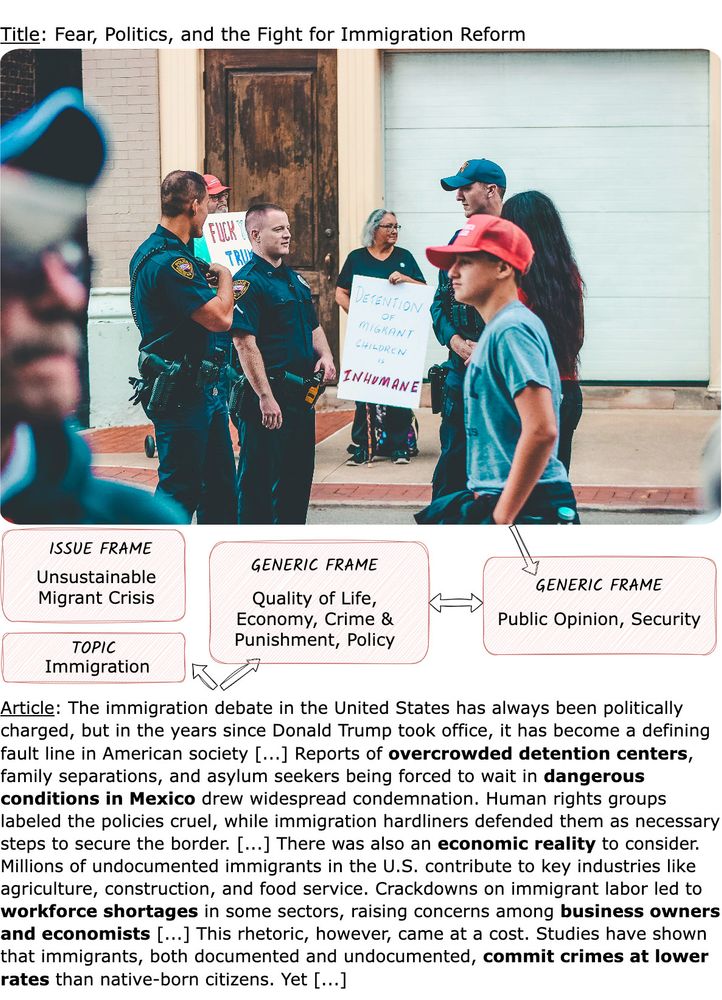

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

Understanding news output and embedded biases is especially important in today's environment and it's imperative to take a holistic look at it.

Looking forward to presenting it in Suzhou!

🗒️ Add your expression of interest to join @copenlu.bsky.social here by 20 July: forms.office.com/e/HZSmgR9nXB

Selected candidates will be invited to submit a DARA fellowship application with me: daracademy.dk/fellowship/f...

🗒️ Add your expression of interest to join @copenlu.bsky.social here by 20 July: forms.office.com/e/HZSmgR9nXB

Selected candidates will be invited to submit a DARA fellowship application with me: daracademy.dk/fellowship/f...

Left: Tsung-Yi Lin, Guandao Yang, Katie Luo, Boyi Li; Right: Menglin Jia, Subarna Tripathi, Ph.D., Srishti, Xun Huang

Left: Tsung-Yi Lin, Guandao Yang, Katie Luo, Boyi Li; Right: Menglin Jia, Subarna Tripathi, Ph.D., Srishti, Xun Huang

Infra Goals:

🔧 Share evaluation outputs & params

📊 Query results across experiments

Perfect for 🧰 hands-on folks ready to build tools the whole community can use

Join the EvalEval Coalition here 👇

forms.gle/6fEmrqJkxidy...

Infra Goals:

🔧 Share evaluation outputs & params

📊 Query results across experiments

Perfect for 🧰 hands-on folks ready to build tools the whole community can use

Join the EvalEval Coalition here 👇

forms.gle/6fEmrqJkxidy...

sites.google.com/view/fgvc12

We are looking forward to a series of talks on semantic granularity, covering topics such as machine teaching, interpretability and much more!

Room 104 E

Schedule & details: sites.google.com/view/fgvc12

@cvprconference.bsky.social #CVPR25

sites.google.com/view/fgvc12

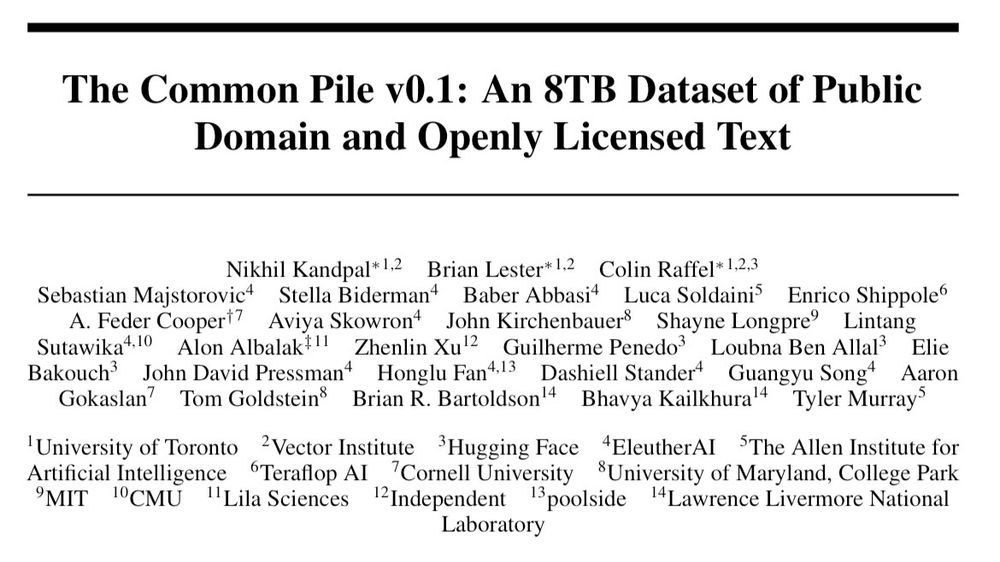

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

I spoke to political cartoonists, including Pulitzer-winner Mark Fiore, about how they are using AI image generators in their work. My latest for @niemanlab.org.

www.niemanlab.org/2025/06/i-do...

I spoke to political cartoonists, including Pulitzer-winner Mark Fiore, about how they are using AI image generators in their work. My latest for @niemanlab.org.

www.niemanlab.org/2025/06/i-do...

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

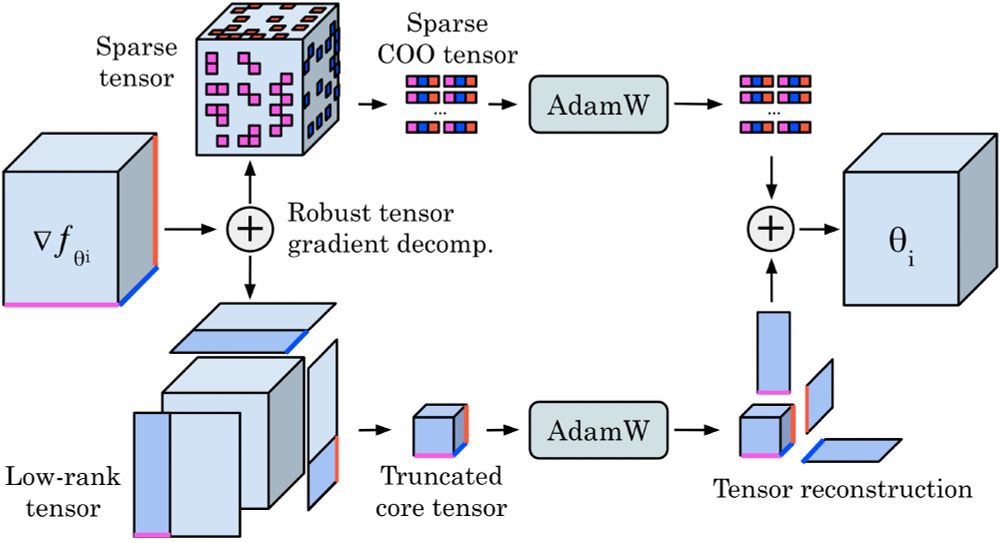

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

- 3M+ visual elements from historic US newspapers — photos, maps, cartoons, OCR + metadata.

- Parquet = fast filters, easier analysis.

- Great for ML + cultural research.

👉 huggingface.co/datasets/big...

- 3M+ visual elements from historic US newspapers — photos, maps, cartoons, OCR + metadata.

- Parquet = fast filters, easier analysis.

- Great for ML + cultural research.

👉 huggingface.co/datasets/big...

Curious how MULTIMODALITY can enhance FEW-SHOT 3D SEGMENTATION WITHOUT any additional cost? Come chat with us at the poster session — always happy to connect!🤝

🗓️ Fri 25 Apr, 3 - 5:30 pm

📍 Hall 3 + Hall 2B #504

More follow

Curious how MULTIMODALITY can enhance FEW-SHOT 3D SEGMENTATION WITHOUT any additional cost? Come chat with us at the poster session — always happy to connect!🤝

🗓️ Fri 25 Apr, 3 - 5:30 pm

📍 Hall 3 + Hall 2B #504

More follow