@uni_of_essex

GitHub: github.com/soran-ghaderi | TransformerX/torchEBM libraries

Website: https://soran-ghaderi.github.io/

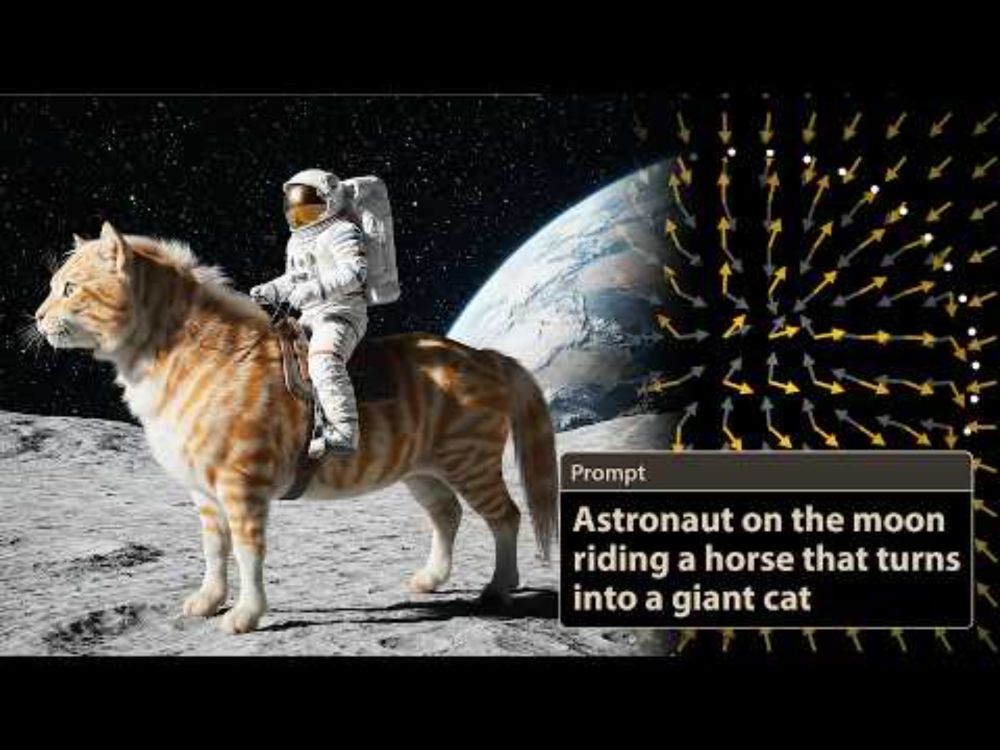

Produced by Welch Labs, this is the first in a short series of 3b1b this summer. I enjoyed providing editorial feedback throughout the last several months, and couldn't be happier with the result.

Produced by Welch Labs, this is the first in a short series of 3b1b this summer. I enjoyed providing editorial feedback throughout the last several months, and couldn't be happier with the result.

WACV'26 (R1,reg): 15 days.

WACV'26 (R1,paper): 22 days.

AAAI'26 (paper): 36 days.

3DV'26: 52 days.

WACV'26 (R2,reg): 78 days.

WACV'26 (R2,paper): 85 days.

ICLR'26 (abs): 85 days.

ICLR'26 (paper): 90 days.

WACV'26 (R1,reg): 15 days.

WACV'26 (R1,paper): 22 days.

AAAI'26 (paper): 36 days.

3DV'26: 52 days.

WACV'26 (R2,reg): 78 days.

WACV'26 (R2,paper): 85 days.

ICLR'26 (abs): 85 days.

ICLR'26 (paper): 90 days.

That was quite popular and here is a synthesis of the responses:

That was quite popular and here is a synthesis of the responses:

Trained using TorchEBM v0.3.0!

I will be uploading more experimental animations for different configs and datasets—Gaussian Mixture with 4 components.

Trained using TorchEBM v0.3.0!

I will be uploading more experimental animations for different configs and datasets—Gaussian Mixture with 4 components.

If you plan to submit a proposal for a workshop, please read our detailed guidance in our new blog post: blog.neurips.cc/2025/04/12/g...

If you plan to submit a proposal for a workshop, please read our detailed guidance in our new blog post: blog.neurips.cc/2025/04/12/g...

Read this blog post on how to train an energy-based model (EBM) on a 2D Gaussian mixture distribution using the TorchEBM library. 👇

#EBM #OpenSource #DeepLearning #GenerativeAI

Read this blog post on how to train an energy-based model (EBM) on a 2D Gaussian mixture distribution using the TorchEBM library. 👇

#EBM #OpenSource #DeepLearning #GenerativeAI

📌 This is the first model trained using #TorchEBM

- The probability density (blue colors) learned by the EBM sharpens around the white circles.

- Red dots get closer to the GM dist.

Link 👇

#EBM #GenerativeAI

📌 This is the first model trained using #TorchEBM

- The probability density (blue colors) learned by the EBM sharpens around the white circles.

- Red dots get closer to the GM dist.

Link 👇

#EBM #GenerativeAI

📌Collaboration's welcome!

Link: ...

📌Collaboration's welcome!

Link: ...

I'll publish an intro to Hamiltonian mechanics and Monte Carlo on the #TorchEBM lib blog soon. #Hamiltonian #Sampling #EnergyBasedModels #MCMC #GenerativeAI

I'll publish an intro to Hamiltonian mechanics and Monte Carlo on the #TorchEBM lib blog soon. #Hamiltonian #Sampling #EnergyBasedModels #MCMC #GenerativeAI

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

Join us on zoom at 9am PT / 12pm ET / 6pm CET: portal.valencelabs.com/logg

Join us on zoom at 9am PT / 12pm ET / 6pm CET: portal.valencelabs.com/logg

RSS'25 (abs): 54 days.

SIGGRAPH'25 (paper-md5): 60 days.

RSS'25 (paper): 61 days.

ICML'25: 67 days.

ICCV'25: 102 days.

RSS'25 (abs): 54 days.

SIGGRAPH'25 (paper-md5): 60 days.

RSS'25 (paper): 61 days.

ICML'25: 67 days.

ICCV'25: 102 days.