openai.com/index/sharin...

openai.com/index/sharin...

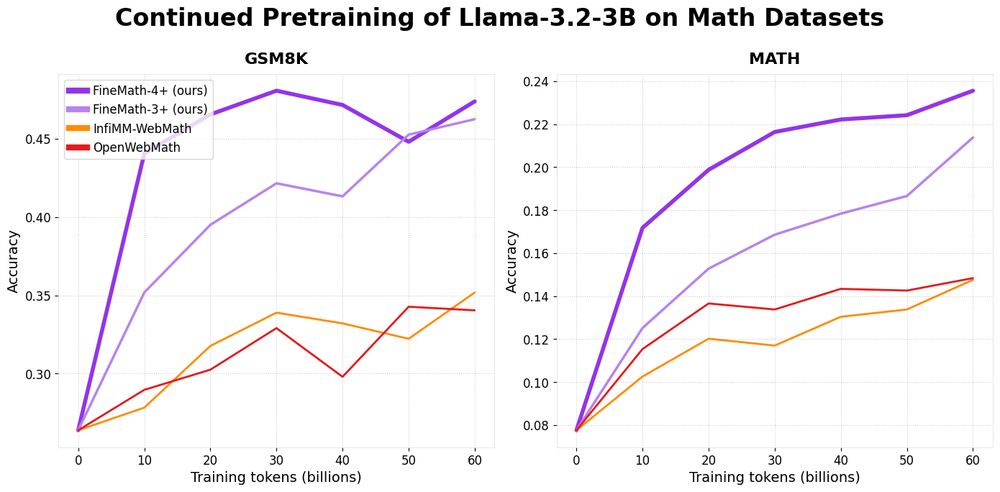

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵