Phd student in CS + AI @usc.edu. CS undergrad, master at ShanghaiTech. LLM reasoning, RL, AI4Science.

The trained model is transparent, revealing where reasoning abilities hide, also generalizable and modular, enabling transfer across datasets and models.

The trained model is transparent, revealing where reasoning abilities hide, also generalizable and modular, enabling transfer across datasets and models.

In SAE-Tuning, the SAE first “extracts” latent reasoning features and then guides a standard supervised fine-tuning process to “elicit” reasoning abilities.

In SAE-Tuning, the SAE first “extracts” latent reasoning features and then guides a standard supervised fine-tuning process to “elicit” reasoning abilities.

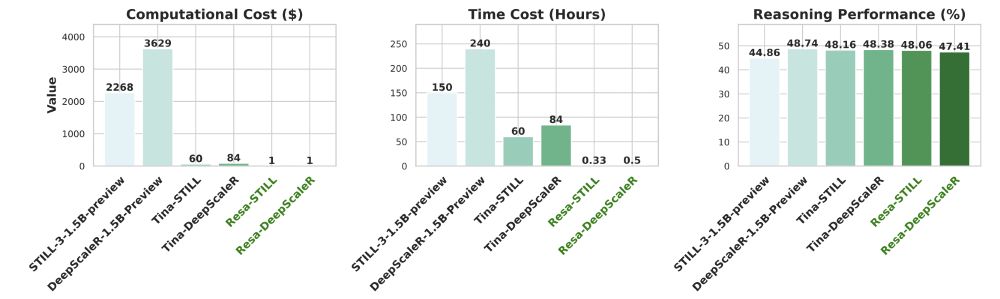

Using only 1 hour of training at $2 cost without any reasoning traces, we find a way to train 1.5B models via SAEs to score 43.33% Pass@1 on AIME24 and 90% Pass@1 on AMC23.

Using only 1 hour of training at $2 cost without any reasoning traces, we find a way to train 1.5B models via SAEs to score 43.33% Pass@1 on AIME24 and 90% Pass@1 on AMC23.

Paper: arxiv.org/abs/2504.15777

Notion Blog: shangshangwang.notion.site/tina

Code: github.com/shangshang-w...

Model: huggingface.co/Tina-Yi

Training Logs: wandb.ai/upup-ashton-...

Tina's avatar is generated by GPT-4o based on KYNE's girls.

Paper: arxiv.org/abs/2504.15777

Notion Blog: shangshangwang.notion.site/tina

Code: github.com/shangshang-w...

Model: huggingface.co/Tina-Yi

Training Logs: wandb.ai/upup-ashton-...

Tina's avatar is generated by GPT-4o based on KYNE's girls.

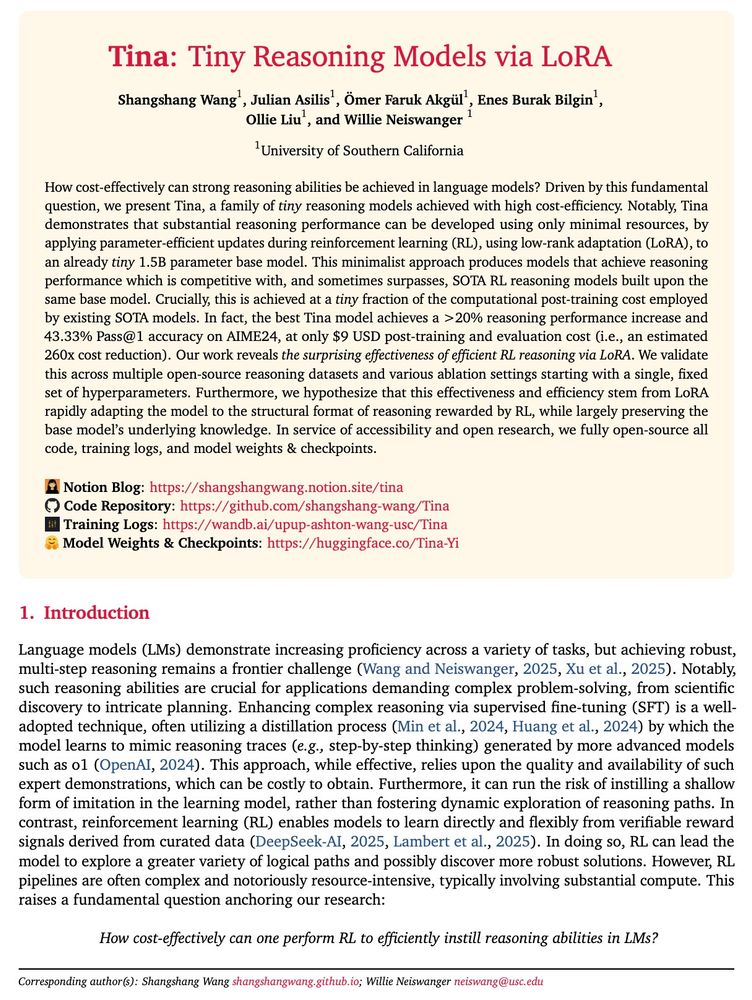

[1/9] Introducing Tina: A family of tiny reasoning models with strong performance at low cost, providing an accessible testbed for RL reasoning. 🧵

[1/9] Introducing Tina: A family of tiny reasoning models with strong performance at low cost, providing an accessible testbed for RL reasoning. 🧵

We then collect survey, evaluation, benchmark and application papers and also online resources like blogs, posts, videos, code, and data.

We then collect survey, evaluation, benchmark and application papers and also online resources like blogs, posts, videos, code, and data.

Verifiers serve as a key component in both post-training (e.g., as reward models) and test-time compute (e.g., as signals to guide search). Our fourth section collects thoughts on various verification.

Verifiers serve as a key component in both post-training (e.g., as reward models) and test-time compute (e.g., as signals to guide search). Our fourth section collects thoughts on various verification.

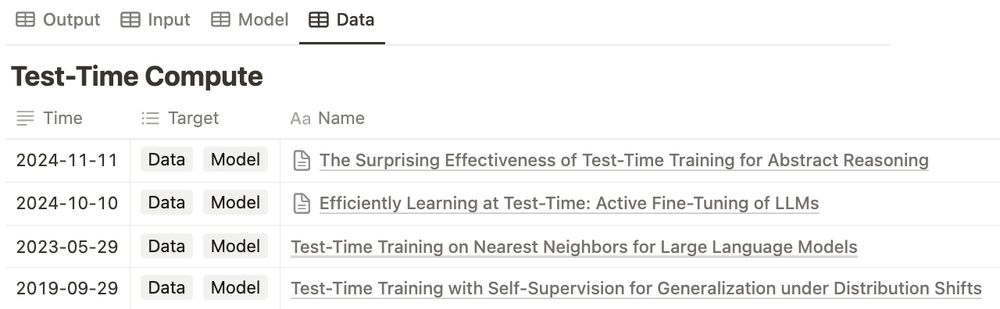

Test-time compute is an emerging field where folks are trying different methods (e.g., search) and using extra components (e.g., verifiers). Our third section classifies them based on the optimization targets for LLMs.

Test-time compute is an emerging field where folks are trying different methods (e.g., search) and using extra components (e.g., verifiers). Our third section classifies them based on the optimization targets for LLMs.

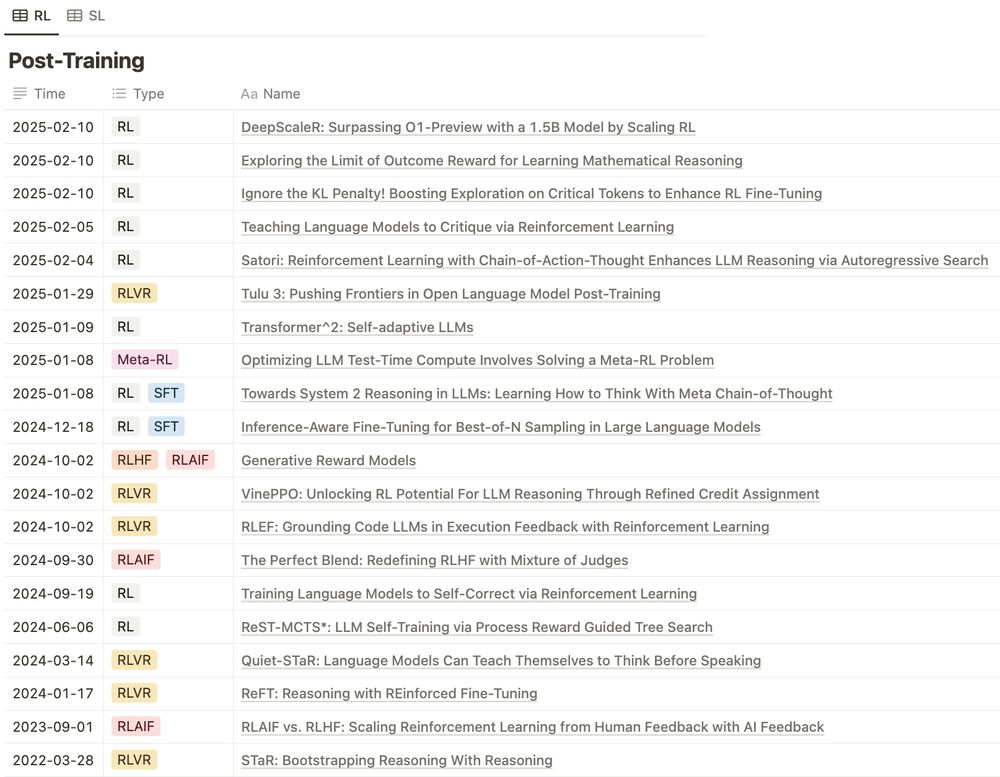

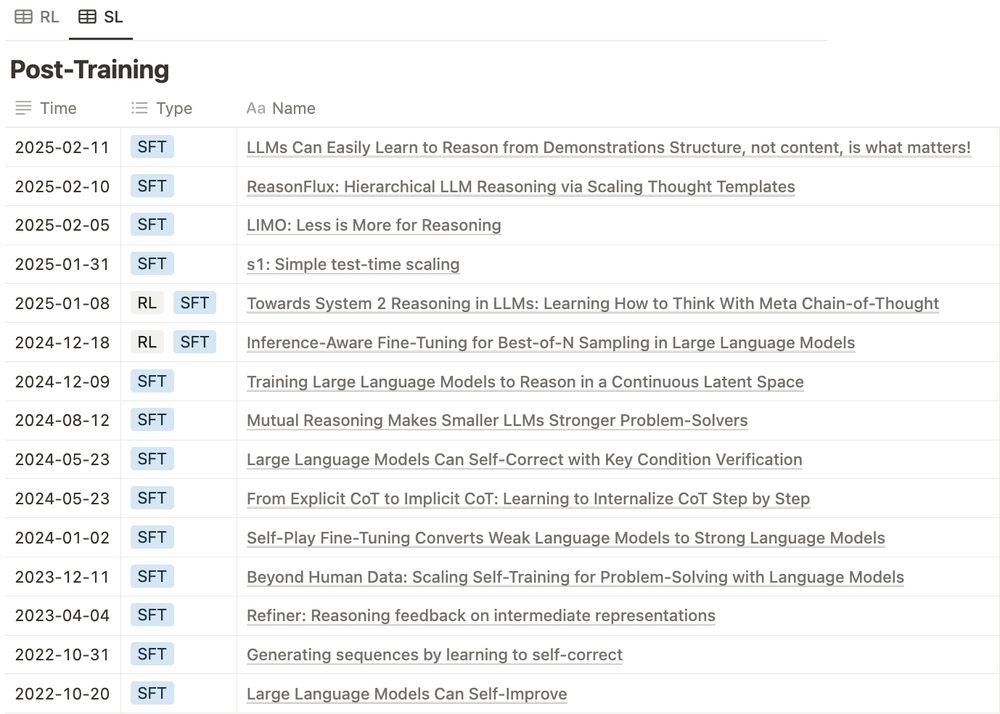

Our second section collects thoughts on post-training methods for LLM reasoning including the hot RL-based and also SFT-based methods.

Our second section collects thoughts on post-training methods for LLM reasoning including the hot RL-based and also SFT-based methods.

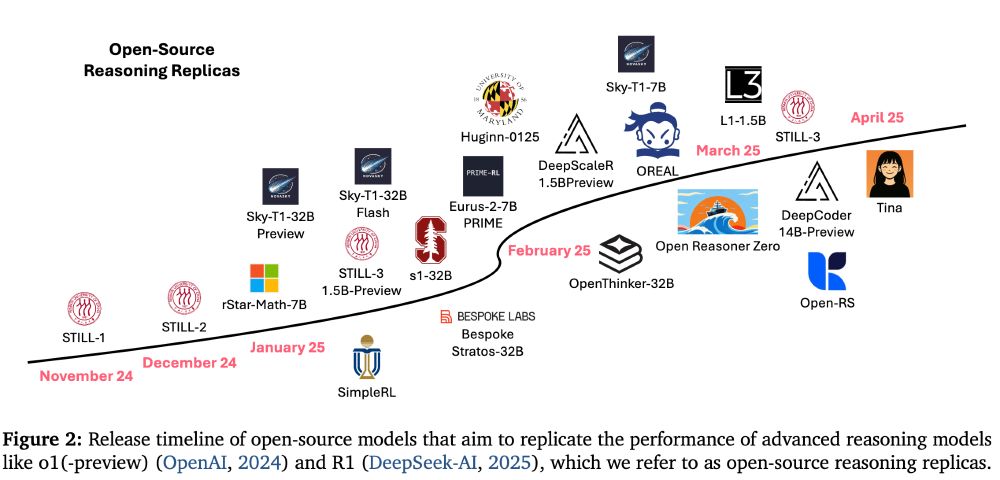

The discussion on reasoning ability started to go viral with the release of OpenAI o-series and DeepSeek R1 models. Our first section collects thoughts on OpenAI o-series and DeepSeek R1 models and other SOTA reasoning models.

The discussion on reasoning ability started to go viral with the release of OpenAI o-series and DeepSeek R1 models. Our first section collects thoughts on OpenAI o-series and DeepSeek R1 models and other SOTA reasoning models.

From OpenAI's o-series to DeepSeek R1, from post-training to test-time compute — we break it down into structured spreadsheets. 🧵

From OpenAI's o-series to DeepSeek R1, from post-training to test-time compute — we break it down into structured spreadsheets. 🧵