Shahab Bakhtiari

@shahabbakht.bsky.social

|| assistant prof at University of Montreal || leading the systems neuroscience and AI lab (SNAIL: https://www.snailab.ca/) 🐌 || associate academic member of Mila (Quebec AI Institute) || #NeuroAI || vision and learning in brains and machines

Cool paper. Let’s do a journal club on it.

October 27, 2025 at 12:56 AM

Cool paper. Let’s do a journal club on it.

It does feel a lot like that in this interview actually. He seems to be pushing a strong position for purely experience-dependent intelligence.

Though I just remembered this bit from their ‘reward is enough’ paper, which makes the notion of reward so wide it becomes almost meaningless.

Though I just remembered this bit from their ‘reward is enough’ paper, which makes the notion of reward so wide it becomes almost meaningless.

October 4, 2025 at 4:26 AM

It does feel a lot like that in this interview actually. He seems to be pushing a strong position for purely experience-dependent intelligence.

Though I just remembered this bit from their ‘reward is enough’ paper, which makes the notion of reward so wide it becomes almost meaningless.

Though I just remembered this bit from their ‘reward is enough’ paper, which makes the notion of reward so wide it becomes almost meaningless.

Just read through this paper. Very nice and comprehensive analysis of the IBL data.

This figure looks a lot like salt & pepper organization taken to the extreme.

This figure looks a lot like salt & pepper organization taken to the extreme.

September 12, 2025 at 2:51 PM

Just read through this paper. Very nice and comprehensive analysis of the IBL data.

This figure looks a lot like salt & pepper organization taken to the extreme.

This figure looks a lot like salt & pepper organization taken to the extreme.

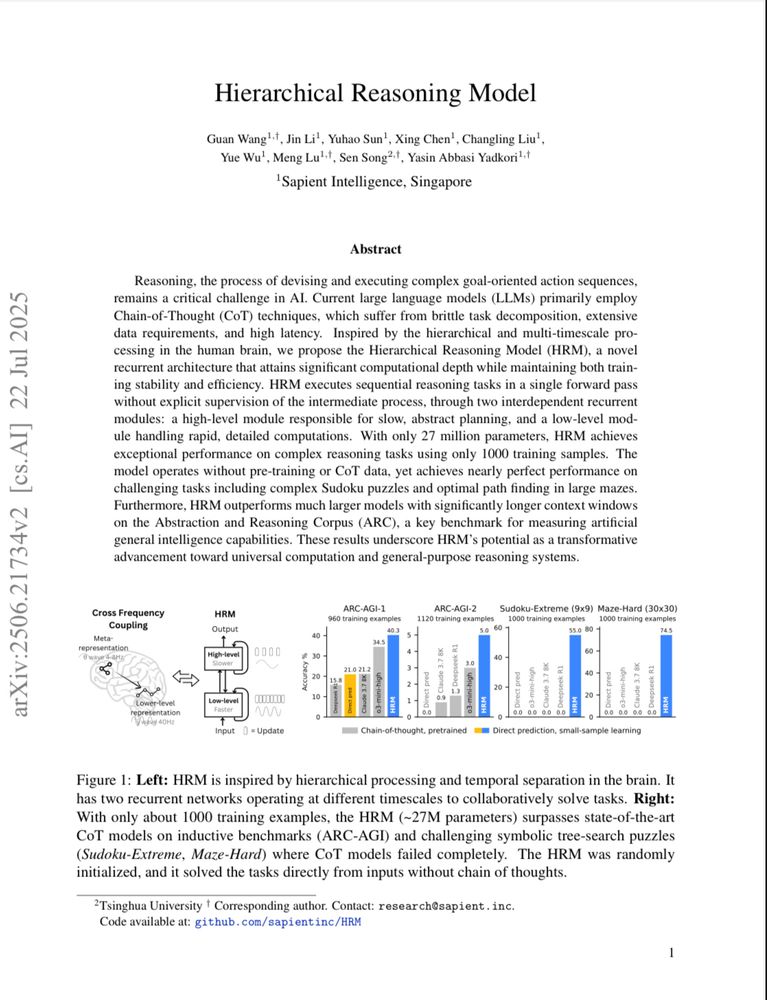

Well … the Hierarchical Reasoning Model didn’t quite hold up under scrutiny, at least not its central claim, I.e., its brain inspired architecture: arcprize.org/blog/hrm-ana...

But … from now on, HRM is going to be my best teaching material for why AI models should be open.

But … from now on, HRM is going to be my best teaching material for why AI models should be open.

August 16, 2025 at 12:23 PM

Well … the Hierarchical Reasoning Model didn’t quite hold up under scrutiny, at least not its central claim, I.e., its brain inspired architecture: arcprize.org/blog/hrm-ana...

But … from now on, HRM is going to be my best teaching material for why AI models should be open.

But … from now on, HRM is going to be my best teaching material for why AI models should be open.

This paper is making the rounds: arxiv.org/abs/2506.21734

A tiny (27M) brain-inspired model trained just on 1000 samples outperforming o3-mini-high on reasoning tasks.

#MLSky 🧠🤖

A tiny (27M) brain-inspired model trained just on 1000 samples outperforming o3-mini-high on reasoning tasks.

#MLSky 🧠🤖

August 3, 2025 at 2:01 AM

This paper is making the rounds: arxiv.org/abs/2506.21734

A tiny (27M) brain-inspired model trained just on 1000 samples outperforming o3-mini-high on reasoning tasks.

#MLSky 🧠🤖

A tiny (27M) brain-inspired model trained just on 1000 samples outperforming o3-mini-high on reasoning tasks.

#MLSky 🧠🤖

Meta is creating another salary bubble by offering unprecedented compensation to poach AI scientists from other places, then tying their pay to performance metrics.

IMO, this is a recipe for disaster for a field that still desperately needs rigorous scientific research.

IMO, this is a recipe for disaster for a field that still desperately needs rigorous scientific research.

July 10, 2025 at 1:54 PM

Meta is creating another salary bubble by offering unprecedented compensation to poach AI scientists from other places, then tying their pay to performance metrics.

IMO, this is a recipe for disaster for a field that still desperately needs rigorous scientific research.

IMO, this is a recipe for disaster for a field that still desperately needs rigorous scientific research.

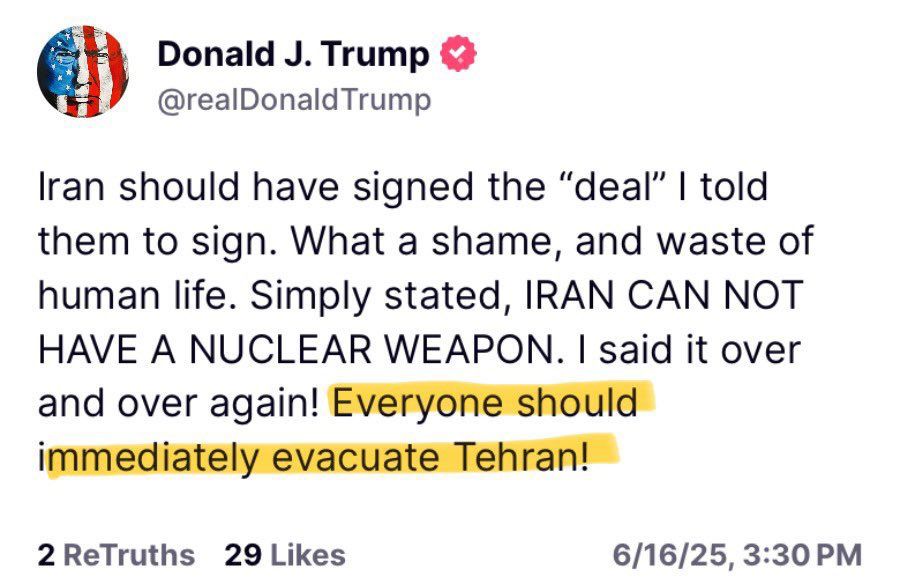

A city with a population of 15 million, and all roads leading out are blocked. This is simply a murder threat.

June 17, 2025 at 3:06 AM

A city with a population of 15 million, and all roads leading out are blocked. This is simply a murder threat.

So for foundation models in neuroscience: if training on large multimodal neural & behavioral data is the first component, what would serve as the reward signals for the RL component?

www.mechanize.work/blog/how-to-...

🧠🤖

www.mechanize.work/blog/how-to-...

🧠🤖

June 3, 2025 at 3:11 AM

So for foundation models in neuroscience: if training on large multimodal neural & behavioral data is the first component, what would serve as the reward signals for the RL component?

www.mechanize.work/blog/how-to-...

🧠🤖

www.mechanize.work/blog/how-to-...

🧠🤖

I keep going back to this 1992 paper by David Robinson: www.cambridge.org/core/journal...

April 29, 2025 at 2:24 PM

I keep going back to this 1992 paper by David Robinson: www.cambridge.org/core/journal...

Another surprising finding (not from lesioning though): The model trained on V1 data generalized equally well to V1 ut also mouse higher visual areas, despite the supposed functional hierarchy from V1 to HVA.

April 10, 2025 at 3:28 PM

Another surprising finding (not from lesioning though): The model trained on V1 data generalized equally well to V1 ut also mouse higher visual areas, despite the supposed functional hierarchy from V1 to HVA.

We'll be presenting two projects at #Cosyne2025, representing two main research directions in our lab:

🧠🤖 🧠📈

1/3

🧠🤖 🧠📈

1/3

March 27, 2025 at 7:13 PM

We'll be presenting two projects at #Cosyne2025, representing two main research directions in our lab:

🧠🤖 🧠📈

1/3

🧠🤖 🧠📈

1/3

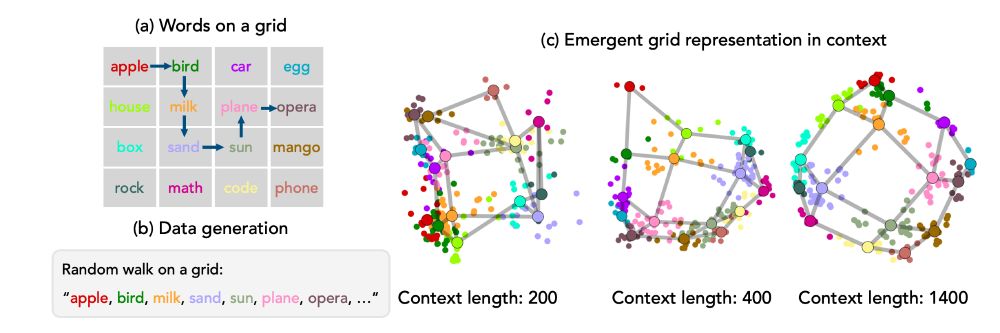

Interesting paper showing how LLMs change their representational geometry in-context to match a task structure: arxiv.org/abs/2501.00070

Here, the model’s latent representations show a grid structure matching the task.

#MLSKy #NeuroAI

Here, the model’s latent representations show a grid structure matching the task.

#MLSKy #NeuroAI

January 6, 2025 at 4:19 PM

Interesting paper showing how LLMs change their representational geometry in-context to match a task structure: arxiv.org/abs/2501.00070

Here, the model’s latent representations show a grid structure matching the task.

#MLSKy #NeuroAI

Here, the model’s latent representations show a grid structure matching the task.

#MLSKy #NeuroAI

Just came across this interesting blog post on the job market for new PhD grads in AI: kyunghyuncho.me/i-sensed-anxiety-and-frustration-at-neurips24

The argument feels pretty reasonable. Here is my take: (1/6)

#MLSky #NeuroAI 🧠📈

The argument feels pretty reasonable. Here is my take: (1/6)

#MLSky #NeuroAI 🧠📈

January 3, 2025 at 4:02 PM

Just came across this interesting blog post on the job market for new PhD grads in AI: kyunghyuncho.me/i-sensed-anxiety-and-frustration-at-neurips24

The argument feels pretty reasonable. Here is my take: (1/6)

#MLSky #NeuroAI 🧠📈

The argument feels pretty reasonable. Here is my take: (1/6)

#MLSky #NeuroAI 🧠📈

I really like this review paper by Justin Gardner: www.nature.com/articles/s41...

I keep coming back to it whenever I’m writing about models of perception.

I especially like this quote; it took me a while to fully wrap my head around it, but I think it touches on something quite fundamental.

🧠📈

I keep coming back to it whenever I’m writing about models of perception.

I especially like this quote; it took me a while to fully wrap my head around it, but I think it touches on something quite fundamental.

🧠📈

December 11, 2024 at 12:28 PM

I really like this review paper by Justin Gardner: www.nature.com/articles/s41...

I keep coming back to it whenever I’m writing about models of perception.

I especially like this quote; it took me a while to fully wrap my head around it, but I think it touches on something quite fundamental.

🧠📈

I keep coming back to it whenever I’m writing about models of perception.

I especially like this quote; it took me a while to fully wrap my head around it, but I think it touches on something quite fundamental.

🧠📈

Next semester, I’m kicking off my NeuroAI course for the first time… IN FRENCH! 🇨🇦🥖 "IA en psychologie et neuroscience" 🤖🧠

It will cover the basics of AI and its application in neuroscience & psychology

I’ll be sharing updates as we go, but here’s slide #2 from the first session.

It will cover the basics of AI and its application in neuroscience & psychology

I’ll be sharing updates as we go, but here’s slide #2 from the first session.

December 10, 2024 at 4:35 PM

Next semester, I’m kicking off my NeuroAI course for the first time… IN FRENCH! 🇨🇦🥖 "IA en psychologie et neuroscience" 🤖🧠

It will cover the basics of AI and its application in neuroscience & psychology

I’ll be sharing updates as we go, but here’s slide #2 from the first session.

It will cover the basics of AI and its application in neuroscience & psychology

I’ll be sharing updates as we go, but here’s slide #2 from the first session.

"Hanging On To The Edges:

Staying in the Game" by Daniel Nettle

www.danielnettle.org.uk/wp-content/u...

(h/t someone who posted it here and now I can’t find the OP)

Staying in the Game" by Daniel Nettle

www.danielnettle.org.uk/wp-content/u...

(h/t someone who posted it here and now I can’t find the OP)

December 7, 2024 at 4:55 PM

"Hanging On To The Edges:

Staying in the Game" by Daniel Nettle

www.danielnettle.org.uk/wp-content/u...

(h/t someone who posted it here and now I can’t find the OP)

Staying in the Game" by Daniel Nettle

www.danielnettle.org.uk/wp-content/u...

(h/t someone who posted it here and now I can’t find the OP)

"Shahab, you just never shut up, do you?" Sounds familiar 🫣

December 5, 2024 at 1:14 AM

"Shahab, you just never shut up, do you?" Sounds familiar 🫣

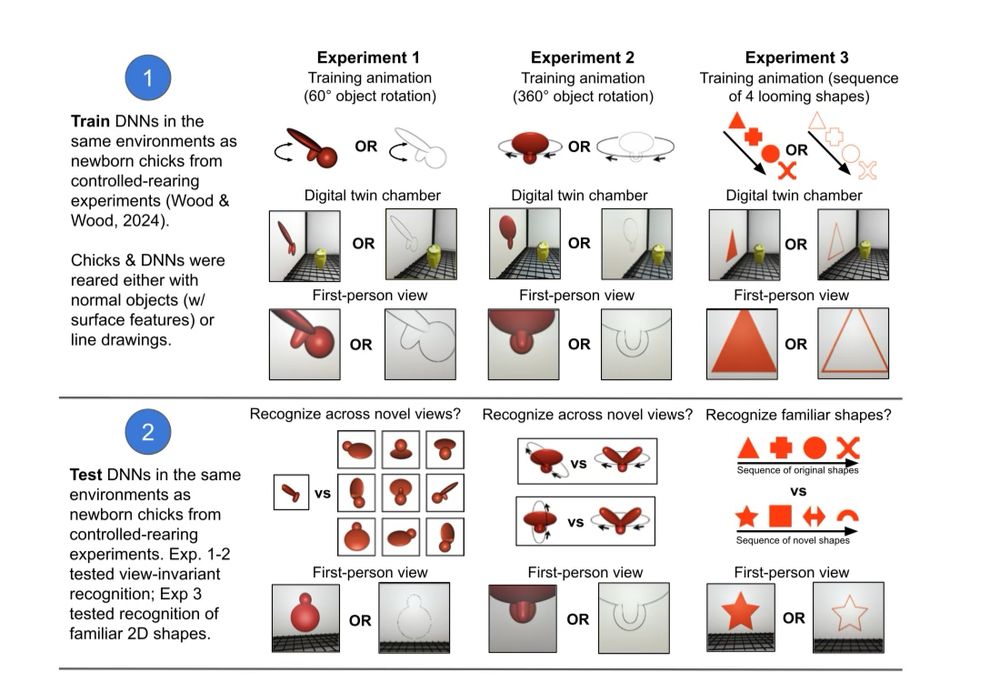

Not many papers have shown vision transformers outperforming conv nets when it comes to brain/behavior alignment.

This paper (openreview.net/forum?id=vHq...) makes the claim that ViTs produce error patterns more similar to control-reared chicks than CNNs.

#NeuroAI

This paper (openreview.net/forum?id=vHq...) makes the claim that ViTs produce error patterns more similar to control-reared chicks than CNNs.

#NeuroAI

November 29, 2024 at 2:06 PM

Not many papers have shown vision transformers outperforming conv nets when it comes to brain/behavior alignment.

This paper (openreview.net/forum?id=vHq...) makes the claim that ViTs produce error patterns more similar to control-reared chicks than CNNs.

#NeuroAI

This paper (openreview.net/forum?id=vHq...) makes the claim that ViTs produce error patterns more similar to control-reared chicks than CNNs.

#NeuroAI

How about this one (from the V-JEPA paper)? Using different strategies for masking is called an ablation experiment.

November 28, 2024 at 3:56 PM

How about this one (from the V-JEPA paper)? Using different strategies for masking is called an ablation experiment.

This is from the Barlow Twins paper: proceedings.mlr.press/v139/zbontar...

November 28, 2024 at 3:26 PM

This is from the Barlow Twins paper: proceedings.mlr.press/v139/zbontar...

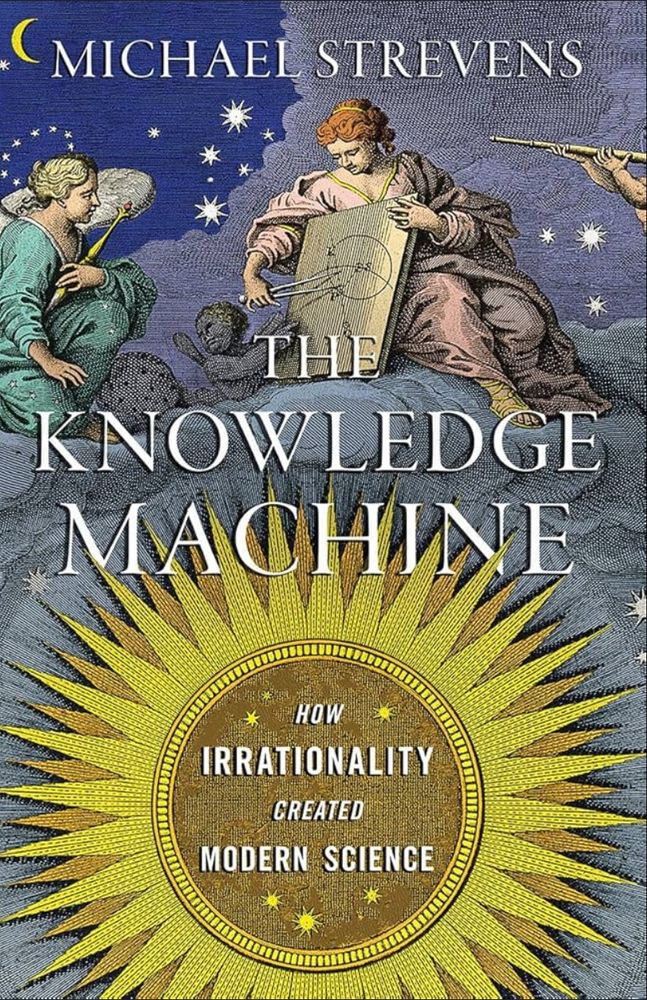

I’d say it’s not just attractiveness; it’s attractiveness shared among a group of people that also engage in *constructive* criticism based on accepted norms and frameworks. It’s a social construct.

This excellent book is quite relevant here (h/t @dlevenstein.bsky.social)

This excellent book is quite relevant here (h/t @dlevenstein.bsky.social)

November 25, 2024 at 1:55 PM

I’d say it’s not just attractiveness; it’s attractiveness shared among a group of people that also engage in *constructive* criticism based on accepted norms and frameworks. It’s a social construct.

This excellent book is quite relevant here (h/t @dlevenstein.bsky.social)

This excellent book is quite relevant here (h/t @dlevenstein.bsky.social)

"Given what you know about me, create an image of my life"

Impressive, especially the emphasis on the activity at the back of the brain (quite close to where area MT is).

Ok … It knows too much about me.

Impressive, especially the emphasis on the activity at the back of the brain (quite close to where area MT is).

Ok … It knows too much about me.

November 23, 2024 at 5:32 AM

"Given what you know about me, create an image of my life"

Impressive, especially the emphasis on the activity at the back of the brain (quite close to where area MT is).

Ok … It knows too much about me.

Impressive, especially the emphasis on the activity at the back of the brain (quite close to where area MT is).

Ok … It knows too much about me.

Robinson’s papers on the necessity of using distributed parallel processing (aka ANNs) to model the brain (and oculomotor system specifically) also had a big influence on my eventual shift to what’s now known as #NeuroAI.

His BBS paper is a must-read: www.cambridge.org/core/journal...

His BBS paper is a must-read: www.cambridge.org/core/journal...

November 19, 2024 at 8:30 PM

Robinson’s papers on the necessity of using distributed parallel processing (aka ANNs) to model the brain (and oculomotor system specifically) also had a big influence on my eventual shift to what’s now known as #NeuroAI.

His BBS paper is a must-read: www.cambridge.org/core/journal...

His BBS paper is a must-read: www.cambridge.org/core/journal...

For me, it wasn’t a book; it was a paper by David Robinson “A model of the smooth pursuit eye movement system.”

As an undergrad studying control theory in Iran, it was such a fresh air to see these ideas applied to something other than oil & gas plants.

As an undergrad studying control theory in Iran, it was such a fresh air to see these ideas applied to something other than oil & gas plants.

November 19, 2024 at 8:14 PM

For me, it wasn’t a book; it was a paper by David Robinson “A model of the smooth pursuit eye movement system.”

As an undergrad studying control theory in Iran, it was such a fresh air to see these ideas applied to something other than oil & gas plants.

As an undergrad studying control theory in Iran, it was such a fresh air to see these ideas applied to something other than oil & gas plants.

French is tough, man!

November 12, 2024 at 5:02 AM

French is tough, man!