Enjoying Bsky without understanding. Fullstack dev. Working out of NYPL when their wifi works.

As instruction count increases, instruction-following quality decreases uniformly. This means that as you give the LLM more instructions, it doesn't simply ignore the newer ("further down in the file") instructions - it begins to ignore all of them uniformly

"""

As instruction count increases, instruction-following quality decreases uniformly. This means that as you give the LLM more instructions, it doesn't simply ignore the newer ("further down in the file") instructions - it begins to ignore all of them uniformly

"""

Is this what they call "dickensian writing"? I can't believe this was any more decipherable in David Copperfield's time.

Is this what they call "dickensian writing"? I can't believe this was any more decipherable in David Copperfield's time.

![The "Oh? You're Approaching Me? / JoJo Approach". As described by knowyourmeme:

The original scene occurs in Chapter 143, "DIO's World (10)"[1], of the JoJo's Bizarre Adventure: Stardust Crusaders manga, during protagonist Jotaro Kujo's confrontation with main villain Dio Brando. As Jotaro angrily approaches Dio, the latter asks, "Oh? You're approaching me? Instead of running away, you're coming right to me?" to which Jotaro responds, "I can't beat the shit out of you without getting closer." The issue was published on February 24th, 1992.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:7uen4esa33kb4mg5bf2jb5ml/bafkreidy2m2r72psjyzh2ifunpmx6axnqkjebq7h4jr7odzphpwjwny6jq@jpeg)

![A screencap of a job rejection email from Discord. The email template did not correctly insert the job role, so it has the literal text "[[MANUALLY ENTER JOB NAME]]".](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:7uen4esa33kb4mg5bf2jb5ml/bafkreigkgyjewnmur4ff3izmjs6a6fj4lusuxd6e2kqkwz2cxcdm2vk5ya@jpeg)

Here's some apps that you may want to double-check & turn off personalized ad data for:

NETFLIX: account > security & privacy > privacy & data settings > data privacy > opt out

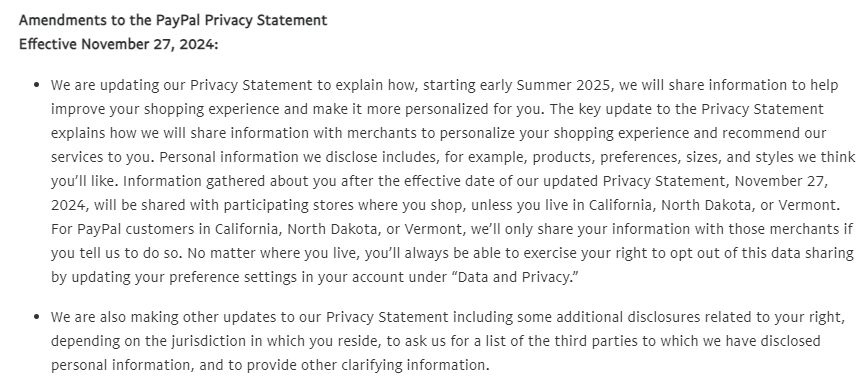

go to Settings > Data & Privacy > Manage shared info > Personalized shopping, and toggle that shit off

Here's some apps that you may want to double-check & turn off personalized ad data for:

NETFLIX: account > security & privacy > privacy & data settings > data privacy > opt out

I have mixed feelings about Michael Lewis (the author), but this article is like 🤌😙

gift link: wapo.st/4ggsRLV

I have mixed feelings about Michael Lewis (the author), but this article is like 🤌😙

gift link: wapo.st/4ggsRLV

Gift link about biases in child learning studies and how to identify them

wapo.st/3WZ9HRQ

Gift link about biases in child learning studies and how to identify them

wapo.st/3WZ9HRQ