- Bradley-terry (batch MLE, our ground truth)

- Elo (online, chess-standard)

- Glicko-1 (online, uncertainty-aware)

- GXE: (Glicko-derived win %)

(2/5)

- Bradley-terry (batch MLE, our ground truth)

- Elo (online, chess-standard)

- Glicko-1 (online, uncertainty-aware)

- GXE: (Glicko-derived win %)

(2/5)

Announcing our replay archive preview: We are releasing an additional 25k games to help you train a metagame exploiter (5 million more released after qualifier)

replays.pokeagentshowdown. com:8443/

(3/3)

Announcing our replay archive preview: We are releasing an additional 25k games to help you train a metagame exploiter (5 million more released after qualifier)

replays.pokeagentshowdown. com:8443/

(3/3)

- The RPG requires an autonomous embodied agentic agent with perception, planning, memory, and control

- VGC and Gen 9 OU penalize erroneous actions with fast-paced opponent-modeling in short games

(1/3)

- The RPG requires an autonomous embodied agentic agent with perception, planning, memory, and control

- VGC and Gen 9 OU penalize erroneous actions with fast-paced opponent-modeling in short games

(1/3)

Most people are saying TMLR is the only good alternative, but are skeptical

Most people are saying TMLR is the only good alternative, but are skeptical

Jumpstart your PokéAgent Challenge submission ahead of NeurIPS!

📅 Sept 13–14

✅ Leaderboards reset Sat 10AM EDT

🎙️ Lightning talks in LLMs, RL, and Pokemon

💬 Live Office hours

🏆 $2k in prizes

Jumpstart your PokéAgent Challenge submission ahead of NeurIPS!

📅 Sept 13–14

✅ Leaderboards reset Sat 10AM EDT

🎙️ Lightning talks in LLMs, RL, and Pokemon

💬 Live Office hours

🏆 $2k in prizes

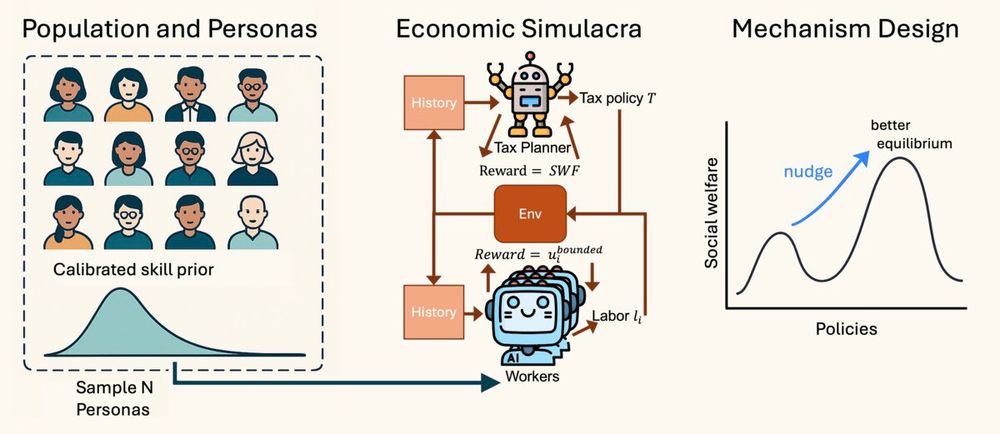

🤔 Can one agent “nudge” a synthetic civilization of Census‑grounded agents toward higher social welfare—all by optimizing utilities in‑context? Meet the LLM Economist ↓

🤔 Can one agent “nudge” a synthetic civilization of Census‑grounded agents toward higher social welfare—all by optimizing utilities in‑context? Meet the LLM Economist ↓

Two tracks:

① Showdown Battling – imperfect-info, turn-based strategy

② Pokemon Emerald Speedrunning – long horizon RPG planning

5 M labeled replays • starter kit • baselines.

Bring your LLM, RL, or hybrid agent!

Two tracks:

① Showdown Battling – imperfect-info, turn-based strategy

② Pokemon Emerald Speedrunning – long horizon RPG planning

5 M labeled replays • starter kit • baselines.

Bring your LLM, RL, or hybrid agent!

Key insights we’ll unpack:

• Base LLM + test-time planning

• Game-theoretic scaffolding

• Context-engineered opponent prediction

• Comparative LLM-as-judge (relative > absolute)

Catch me Thu Jul 17, 4:30-7 PM PT👇

Key insights we’ll unpack:

• Base LLM + test-time planning

• Game-theoretic scaffolding

• Context-engineered opponent prediction

• Comparative LLM-as-judge (relative > absolute)

Catch me Thu Jul 17, 4:30-7 PM PT👇

🚙🚙🚙

Send me a message if youre in the bay area and want to chat!

🚙🚙🚙

Send me a message if youre in the bay area and want to chat!

This should serve as an excellent benchmark for competitive games AND ‘speedrunning’ the RPG. I hope to see both the RL and LLM agent communities working together here to eval agents in Pokemon

More info soon👀

This should serve as an excellent benchmark for competitive games AND ‘speedrunning’ the RPG. I hope to see both the RL and LLM agent communities working together here to eval agents in Pokemon

More info soon👀

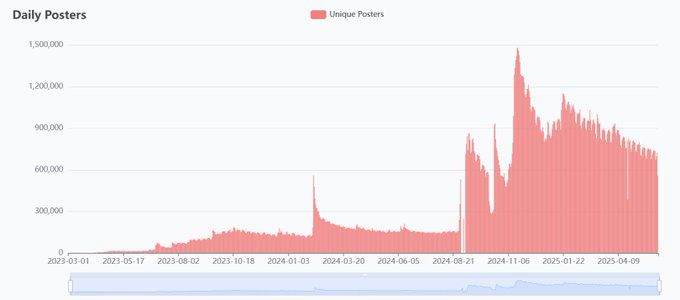

Is the study justified since bots are already rampant on reddit?

Or does this cross ethical lines?

Is the study justified since bots are already rampant on reddit?

Or does this cross ethical lines?

Our agent, powered by GPT-4, achieves a win rate of 84% against Abyssal (best rule-based bot) and a local Elo of 1268, outperforming all baselines, including other LLM-based agents and traditional methods!

Our agent, powered by GPT-4, achieves a win rate of 84% against Abyssal (best rule-based bot) and a local Elo of 1268, outperforming all baselines, including other LLM-based agents and traditional methods!

Result: Expert-level play at human speed!

Result: Expert-level play at human speed!

PokéChamp leverages an LLM for action sampling, opponent modeling and value estimation, with no domain-specific training required

PokéChamp leverages an LLM for action sampling, opponent modeling and value estimation, with no domain-specific training required

Introducing PokéChamp, our minimax LLM agent that reaches top 30%-10% human-level Elo on Pokémon Showdown!

New paper on arXiv and code on github!

Introducing PokéChamp, our minimax LLM agent that reaches top 30%-10% human-level Elo on Pokémon Showdown!

New paper on arXiv and code on github!