https://sebschu.com

🌐Website: rexbench.com

📄Paper: arxiv.org/abs/2506.22598

🌐Website: rexbench.com

📄Paper: arxiv.org/abs/2506.22598

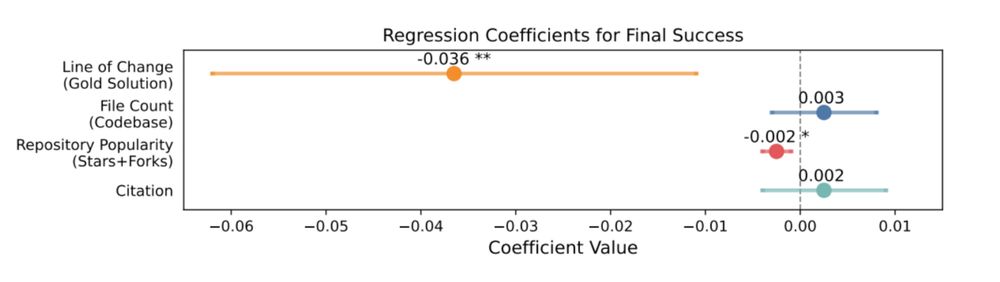

Statistically, tasks with more lines of change in the gold solution were harder. Meanwhile, repo size and popularity had marginal effects. Qualitatively, the performance aligned poorly with human-expert perceived difficulty!

Statistically, tasks with more lines of change in the gold solution were harder. Meanwhile, repo size and popularity had marginal effects. Qualitatively, the performance aligned poorly with human-expert perceived difficulty!

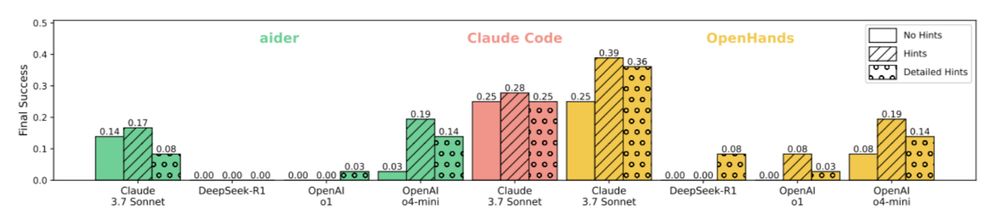

We provided two levels of human-written hints. L1: information localization (e.g., files to edit) & L2: step-by-step guidance. With hints, the best agent’s performance improves to 39%, showing that substantial human guidance is still needed.

We provided two levels of human-written hints. L1: information localization (e.g., files to edit) & L2: step-by-step guidance. With hints, the best agent’s performance improves to 39%, showing that substantial human guidance is still needed.

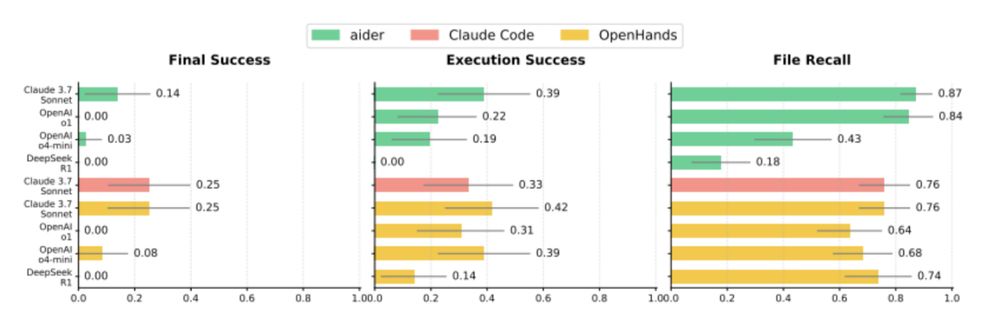

The best-performing agents (OpenHands + Claude 3.7 Sonnet and Claude Code) only had a 25% average success rate across 3 runs. But we were still impressed that the top agents achieved end-to-end success on several tasks!Res

The best-performing agents (OpenHands + Claude 3.7 Sonnet and Claude Code) only had a 25% average success rate across 3 runs. But we were still impressed that the top agents achieved end-to-end success on several tasks!Res

The agents get papers, code, & extension hypotheses as inputs and produce code edits. The edited code is then executed.

The agents get papers, code, & extension hypotheses as inputs and produce code edits. The edited code is then executed.

New research builds on prior work, so understanding existing research & building upon it is a key capacity for autonomous research agents. Many research coding benchmarks focus on replication, but we wanted to target *novel* research extensions.

New research builds on prior work, so understanding existing research & building upon it is a key capacity for autonomous research agents. Many research coding benchmarks focus on replication, but we wanted to target *novel* research extensions.