It appears that state AI bills -- many of which big tech has fought tooth and nail to prevent -- are categorically regulatory capture.

It appears that state AI bills -- many of which big tech has fought tooth and nail to prevent -- are categorically regulatory capture.

t.co/Ag4J6rrejz

t.co/Ag4J6rrejz

Thx Epoch & Bhandari et al.

Thx Epoch & Bhandari et al.

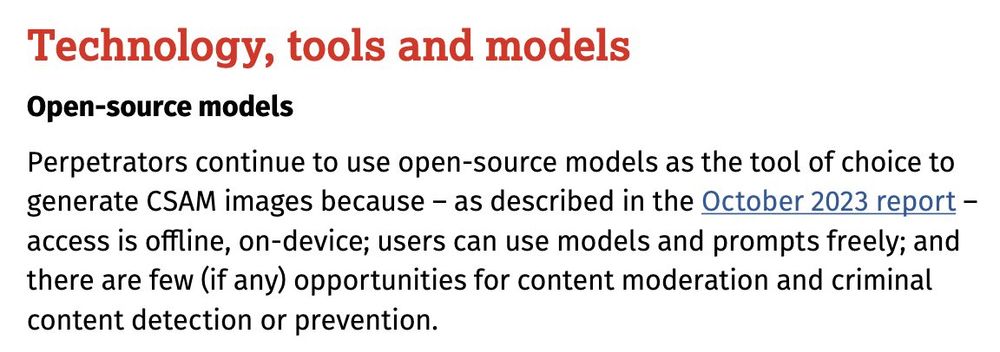

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

admin.iwf.org.uk/media/nadlc...

admin.iwf.org.uk/media/nadlc...

Thx @EpochAIResearch & Bhandari et al.

Thx @EpochAIResearch & Bhandari et al.

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

I'm increasingly persuaded that the only quantitative measures that matter anymore are usage stats & profit.

I'm increasingly persuaded that the only quantitative measures that matter anymore are usage stats & profit.

Now that Moonshot claims Kimi K2 Thinking is SOTA, it seems, uh, less than ideal that it came with zero reporting related to safety/risk.

Now that Moonshot claims Kimi K2 Thinking is SOTA, it seems, uh, less than ideal that it came with zero reporting related to safety/risk.

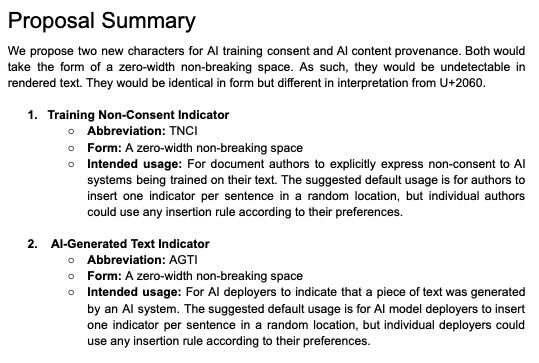

unicode.org/L2/L2025/252...

t.co/yJfp8ezU64

unicode.org/L2/L2025/252...

t.co/yJfp8ezU64

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

t.co/us8MEhMrIh

t.co/us8MEhMrIh

time.com/7308857/chin...

papers.ssrn.com/sol3/papers....

time.com/7308857/chin...

papers.ssrn.com/sol3/papers....

- I just finished my 6-month residency at UK AISI.

- I'm going back to MIT for the final year of my PhD.

- I'm on the postdoc and faculty job markets this fall!

- I just finished my 6-month residency at UK AISI.

- I'm going back to MIT for the final year of my PhD.

- I'm on the postdoc and faculty job markets this fall!

t.co/nHMFKXF4B8

t.co/nHMFKXF4B8