I'm excited to be on the faculty job market this fall. I just updated my website with my CV.

stephencasper.com

It appears that state AI bills -- many of which big tech has fought tooth and nail to prevent -- are categorically regulatory capture.

It appears that state AI bills -- many of which big tech has fought tooth and nail to prevent -- are categorically regulatory capture.

But in case it makes your life easier, feel free to copy or adapt my rebuttal template linked here.

docs.google.com/document/d/1...

But in case it makes your life easier, feel free to copy or adapt my rebuttal template linked here.

docs.google.com/document/d/1...

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

I'm increasingly persuaded that the only quantitative measures that matter anymore are usage stats & profit.

I'm increasingly persuaded that the only quantitative measures that matter anymore are usage stats & profit.

Now that Moonshot claims Kimi K2 Thinking is SOTA, it seems, uh, less than ideal that it came with zero reporting related to safety/risk.

Now that Moonshot claims Kimi K2 Thinking is SOTA, it seems, uh, less than ideal that it came with zero reporting related to safety/risk.

Is it because more Chinese companies are "fast followers" who find their niche by making open models?

Is it cultural? Do Eastern/Chinese cultures value open tech more?

Is it because more Chinese companies are "fast followers" who find their niche by making open models?

Is it cultural? Do Eastern/Chinese cultures value open tech more?

We’ll host speakers from political theory, economics, mechanism design, history, and hierarchical agency.

post-agi.org

We’ll host speakers from political theory, economics, mechanism design, history, and hierarchical agency.

post-agi.org

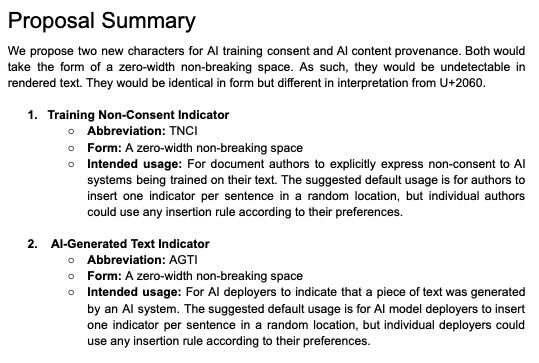

unicode.org/L2/L2025/252...

t.co/yJfp8ezU64

unicode.org/L2/L2025/252...

t.co/yJfp8ezU64

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

In a new paper with @realbrianjudge.bsky.social at #EAAMO25, we pull back the curtain on AI safety's toolkit. (1/n)

arxiv.org/pdf/2509.22872

In a new paper with @realbrianjudge.bsky.social at #EAAMO25, we pull back the curtain on AI safety's toolkit. (1/n)

arxiv.org/pdf/2509.22872

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

www.nature.com/articles/d41...

www.nature.com/articles/d41...

We propose human agency as a new alignment target in HumanAgencyBench, made possible by AI simulation/evals. We find e.g., Claude most supports agency but also most tries to steer user values 👇 arxiv.org/abs/2509.08494

We propose human agency as a new alignment target in HumanAgencyBench, made possible by AI simulation/evals. We find e.g., Claude most supports agency but also most tries to steer user values 👇 arxiv.org/abs/2509.08494

www.matsprogram.org/apply

www.matsprogram.org/apply

I'm excited to be on the faculty job market this fall. I just updated my website with my CV.

stephencasper.com

I'm excited to be on the faculty job market this fall. I just updated my website with my CV.

stephencasper.com

Dark as night in the morning light.

I live high until I am ground.

I sit dry until I am drowned.

What am I?

Dark as night in the morning light.

I live high until I am ground.

I sit dry until I am drowned.

What am I?

TL;DR, I think it's a confusing reframing of fairly well studied and previously solved problems.

TL;DR, I think it's a confusing reframing of fairly well studied and previously solved problems.

time.com/7308857/chin...

papers.ssrn.com/sol3/papers....

time.com/7308857/chin...

papers.ssrn.com/sol3/papers....

- I just finished my 6-month residency at UK AISI.

- I'm going back to MIT for the final year of my PhD.

- I'm on the postdoc and faculty job markets this fall!

- I just finished my 6-month residency at UK AISI.

- I'm going back to MIT for the final year of my PhD.

- I'm on the postdoc and faculty job markets this fall!

t.co/nHMFKXF4B8

t.co/nHMFKXF4B8