chandar-lab.github.io/NovoMolGen/

chandar-lab.github.io/NovoMolGen/

github.com/chandar-lab/...

github.com/chandar-lab/...

github.com/chandar-lab/...

github.com/chandar-lab/...

Paper: www.arxiv.org/abs/2508.13408

Code: github.com/chandar-lab/...

Models: huggingface.co/collections/...

Paper: www.arxiv.org/abs/2508.13408

Code: github.com/chandar-lab/...

Models: huggingface.co/collections/...

Consider submitting!

Consider submitting!

Hadi, in collaboration with Mathieu, Miao, and Janarthanan!

Paper: arxiv.org/abs/2503.14555

Code: github.com/chandar-lab/...

Models: huggingface.co/chandar-lab/...

Website: chandar-lab.github.io/R3D2-A-Gener.... 7/7

Hadi, in collaboration with Mathieu, Miao, and Janarthanan!

Paper: arxiv.org/abs/2503.14555

Code: github.com/chandar-lab/...

Models: huggingface.co/chandar-lab/...

Website: chandar-lab.github.io/R3D2-A-Gener.... 7/7

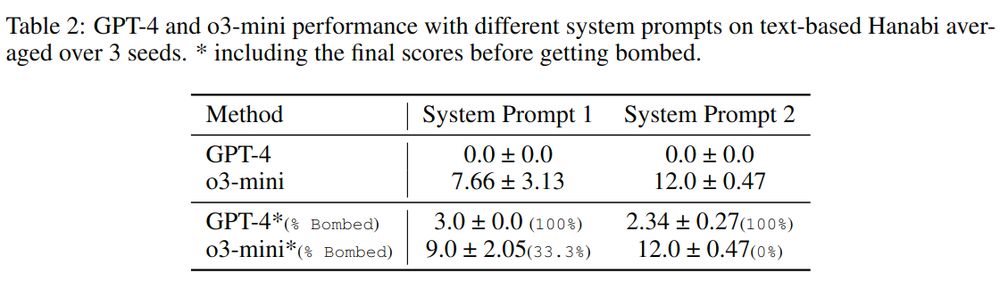

Results? Still far off—o3-mini scored only 12 out of 25 even with extensive prompting! 🚧 #LLMs 6/n

Results? Still far off—o3-mini scored only 12 out of 25 even with extensive prompting! 🚧 #LLMs 6/n

Openreview: openreview.net/forum?id=pCj...

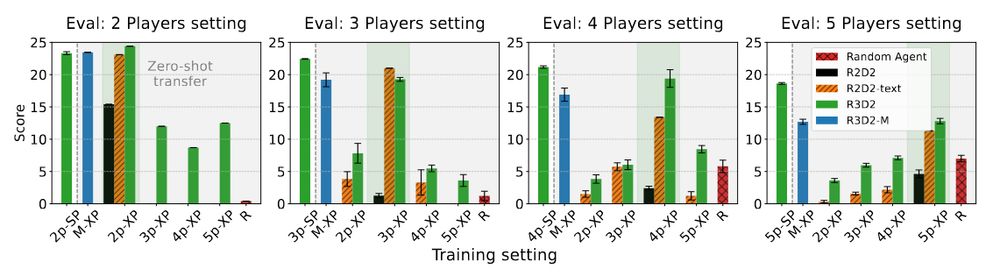

5/n

Openreview: openreview.net/forum?id=pCj...

5/n