New paper + @philipwitti.bsky.social

@gregorbachmann.bsky.social :) arxiv.org/abs/2412.15210

New paper + @philipwitti.bsky.social

@gregorbachmann.bsky.social :) arxiv.org/abs/2412.15210

This is my full-time job.

www.ai-supremacy.com/subscribe?co...

This is my full-time job.

www.ai-supremacy.com/subscribe?co...

🧵>>

🧵>>

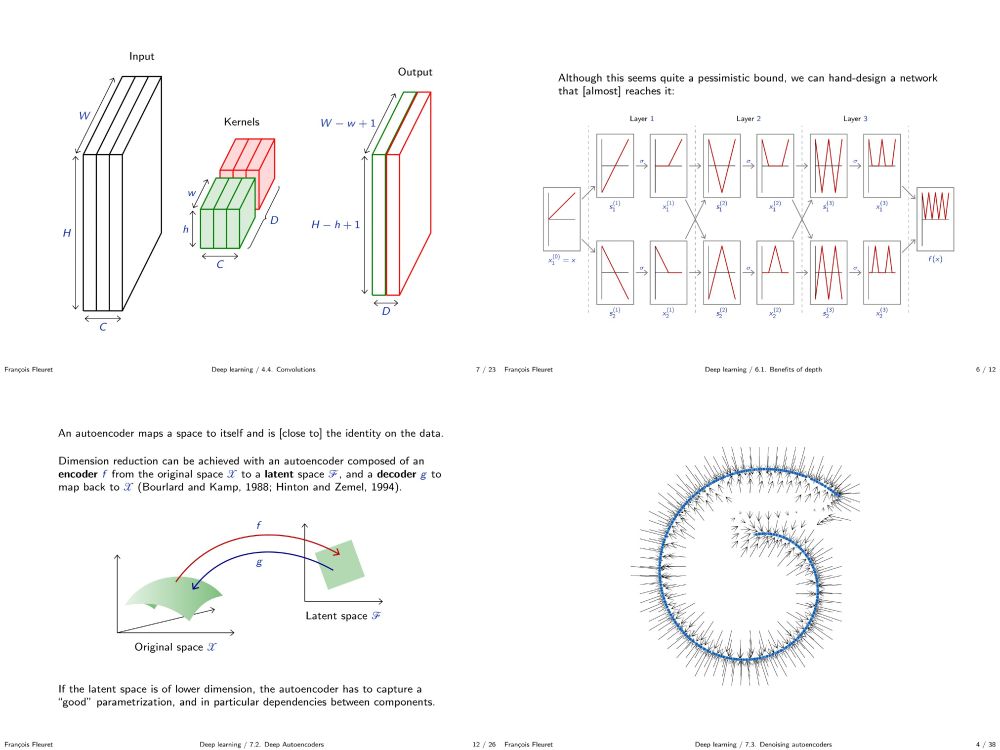

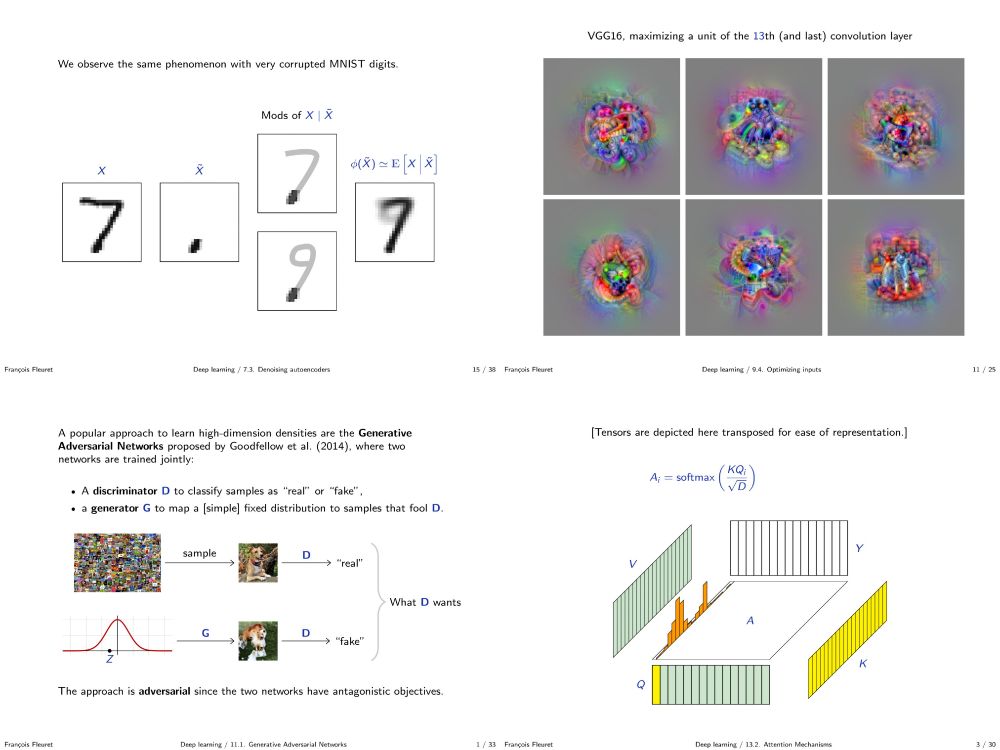

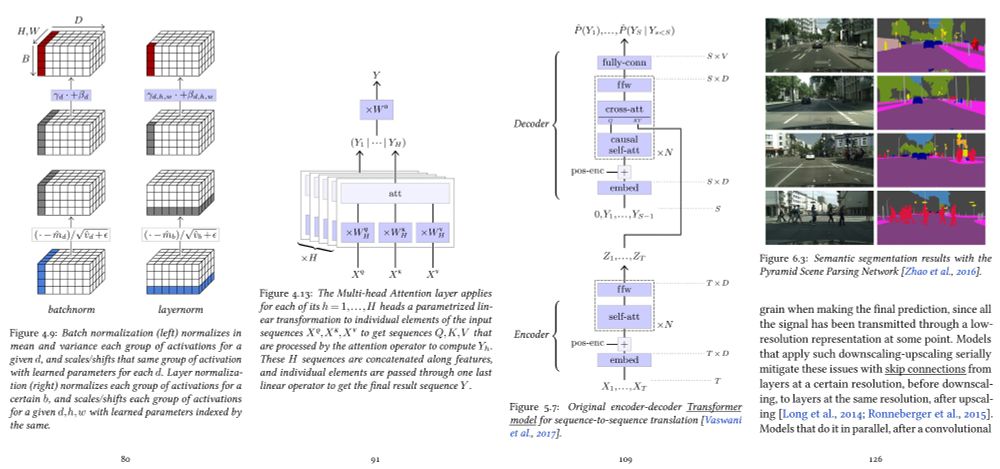

fleuret.org/dlc/

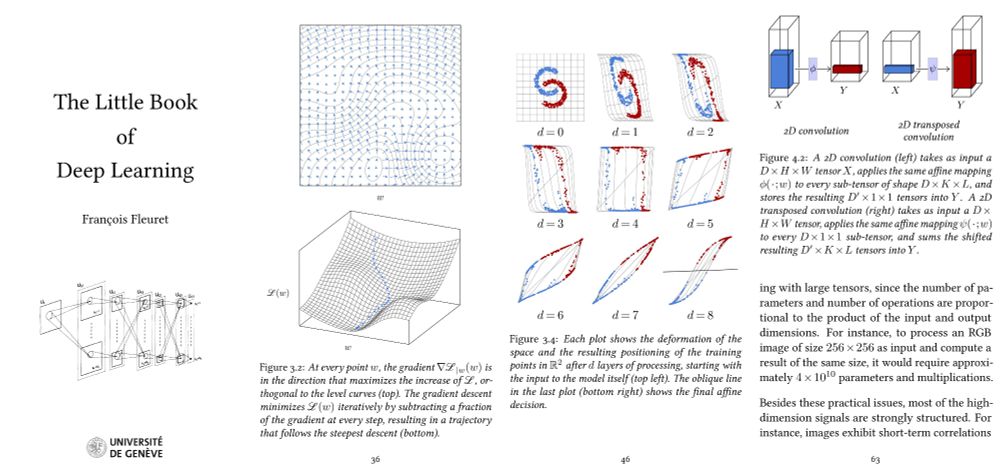

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/