Website: royf.org

1. bsky.app/profile/royf...

2. bsky.app/profile/royf...

3. bsky.app/profile/royf...

4. bsky.app/profile/royf...

5. bsky.app/profile/royf...

1. bsky.app/profile/royf...

2. bsky.app/profile/royf...

3. bsky.app/profile/royf...

4. bsky.app/profile/royf...

5. bsky.app/profile/royf...

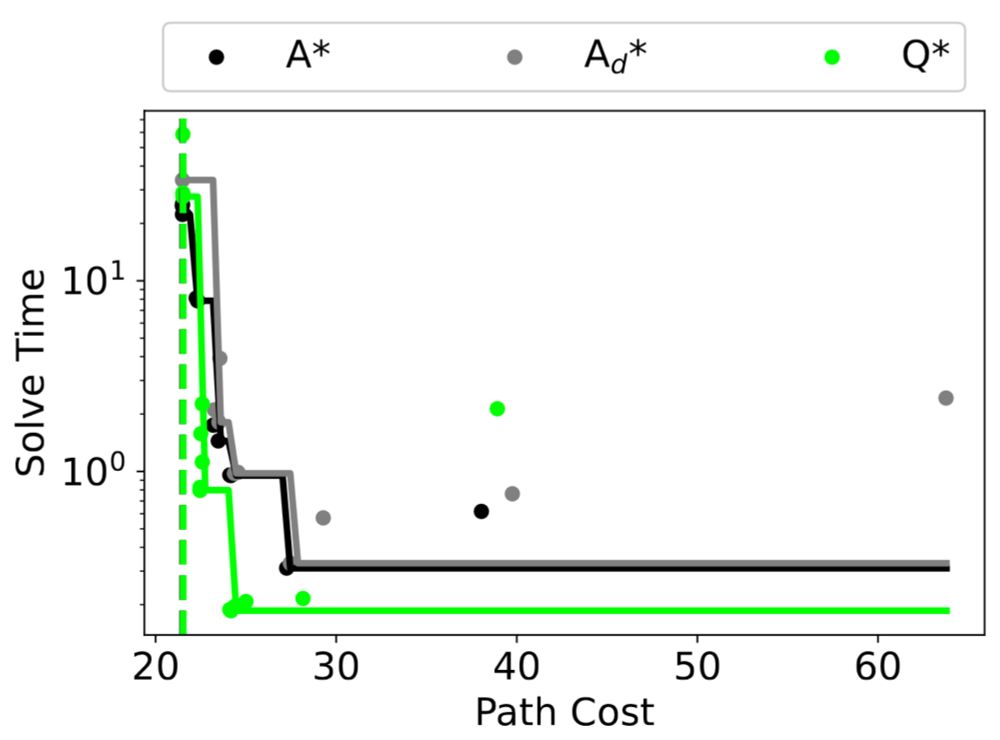

“Q* Search: Heuristic Search with Deep Q-Networks”, by Forest Agostinelli, in collaboration with Shahaf Shperberg, Alexander Shmakov, Stephen McAleer, and Pierre Baldi. PRL @ ICAPS 2024.

“Q* Search: Heuristic Search with Deep Q-Networks”, by Forest Agostinelli, in collaboration with Shahaf Shperberg, Alexander Shmakov, Stephen McAleer, and Pierre Baldi. PRL @ ICAPS 2024.

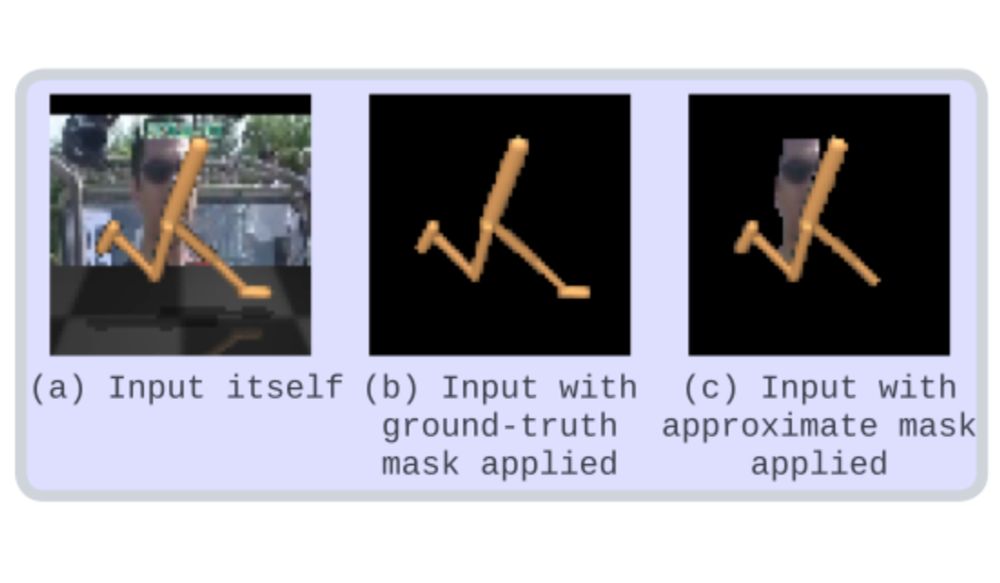

“Make the Pertinent Salient: Task-Relevant Reconstruction for Visual Control with Distraction”, by Kyungmin Kim, in collaboration with Charless Fowlkes. TAFM @ RLC 2024.

“Make the Pertinent Salient: Task-Relevant Reconstruction for Visual Control with Distraction”, by Kyungmin Kim, in collaboration with Charless Fowlkes. TAFM @ RLC 2024.